is a part of a collection about distributed AI throughout a number of GPUs:

Introduction

Earlier than diving into superior parallelism methods, we have to perceive the important thing applied sciences that allow GPUs to speak with one another.

However why do GPUs want to speak within the first place? When coaching AI fashions throughout a number of GPUs, every GPU processes completely different knowledge batches however all of them want to remain synchronized by sharing gradients throughout backpropagation or exchanging mannequin weights. The specifics of what will get communicated and when is determined by your parallelism technique, which we’ll discover in depth within the subsequent weblog posts. For now, simply know that fashionable AI coaching is communication-intensive, making environment friendly GPU-to-GPU knowledge switch essential for efficiency.

The Communication Stack

PCIe

PCIe (Peripheral Element Interconnect Specific) connects growth playing cards like GPUs to the motherboard utilizing unbiased point-to-point serial lanes. Right here’s what completely different PCIe generations supply for a GPU utilizing 16 lanes:

- Gen4 x16: ~32 GB/s bidirectional

- Gen5 x16: ~64 GB/s bidirectional

- Gen6 x16: ~128 GB/s bidirectional (FYI 16 lanes × 8 GB/s/lane = 128 GB/s)

Excessive-end server CPUs usually supply 128 PCIe lanes, and fashionable GPUs want 16 lanes for optimum bandwidth. Because of this you normally see 8 GPUs per server (128 = 16 x 8). Energy consumption and bodily area in server chassis additionally make it impractical to transcend 8 GPUs in a single node.

NVLink

NVLink permits direct GPU-to-GPU communication inside the identical server (node), bypassing the CPU totally. This NVIDIA-proprietary interconnect creates a direct memory-to-memory pathway between GPUs with enormous bandwidth:

- NVLink 3 (A100): ~600 GB/s per GPU

- NVLink 4 (H100): ~900 GB/s per GPU

- NVLink 5 (Blackwell): As much as 1.8 TB/s per GPU

Observe: on NVLink for CPU-GPU communication

Sure CPU architectures assist NVLink as a PCIe substitute, dramatically accelerating CPU-GPU communication by overcoming the PCIe bottleneck in knowledge transfers, resembling shifting coaching batches from CPU to GPU. This CPU-GPU NVLink functionality makes CPU-offloading (a way that saves VRAM by storing knowledge in RAM as a substitute) sensible for real-world AI functions. Since scaling RAM is often cheaper than scaling VRAM, this strategy presents vital financial benefits.

CPUs with NVLink assist embody IBM POWER8, POWER9, and NVIDIA Grace.

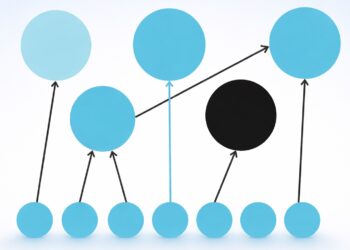

Nevertheless, there’s a catch. In a server with 8x H100s, every GPU wants to speak with 7 others, splitting that 900 GB/s into seven point-to-point connections of about 128 GB/s every. That’s the place NVSwitch is available in.

NVSwitch

NVSwitch acts as a central hub for GPU communication, dynamically routing (switching if you’ll) knowledge between GPUs as wanted. With NVSwitch, each Hopper GPU can talk at 900 GB/s with all different Hopper GPUs concurrently, i.e. peak bandwidth doesn’t rely upon what number of GPUs are speaking. That is what makes NVSwitch “non-blocking”. Every GPU connects to a number of NVSwitch chips by way of a number of NVLink connections, making certain most bandwidth.

Whereas NVSwitch began as an intra-node resolution, it’s been prolonged to interconnect a number of nodes, creating GPU clusters that assist as much as 256 GPUs with all-to-all communication at near-local NVLink speeds.

The generations of NVSwitch are:

- First-Technology: Helps as much as 16 GPUs per server (suitable with Tesla V100)

- Second-Technology: Additionally helps as much as 16 GPUs with improved bandwidth and decrease latency

- Third-Technology: Designed for H100 GPUs, helps as much as 256 GPUs

InfiniBand

InfiniBand handles inter-node communication. Whereas a lot slower (and cheaper) than NVSwitch, it’s generally utilized in datacenters to scale to 1000’s of GPUs. Fashionable InfiniBand helps NVIDIA GPUDirect® RDMA (Distant Direct Reminiscence Entry), letting community adapters entry GPU reminiscence straight with out CPU involvement (no costly copying to host RAM).

Present InfiniBand speeds embody:

- HDR: ~25 GB/s per port

- NDR: ~50 GB/s per port

- NDR200: ~100 GB/s per port

These speeds are considerably slower than intra-node NVLink as a consequence of community protocol overhead and the necessity for 2 PCIe traversals (one on the sender and one on the receiver).

Key Design Ideas

Understanding Linear Scaling

Linear scaling is the holy grail of distributed computing. In easy phrases, it means doubling your GPUs ought to double your throughput and halve your coaching time. This occurs when communication overhead is minimal in comparison with computation time, permitting every GPU to function at full capability. Nevertheless, excellent linear scaling is uncommon in AI workloads as a result of communication necessities develop with the variety of units, and it’s normally inconceivable to attain excellent compute-communication overlap (defined subsequent).

The Significance of Compute-Communication Overlap

When a GPU sits idle ready for knowledge to be transferred earlier than it may be processed, you’re losing assets. Communication operations ought to overlap with computation as a lot as attainable. When that’s not attainable, we name that communication an “uncovered operation”.

Intra-Node vs. Inter-Node: The Efficiency Cliff

Fashionable server-grade motherboards assist as much as 8 GPUs. Inside this vary, you possibly can typically obtain near-linear scaling because of high-bandwidth, low-latency intra-node communication.

When you scale past 8 GPUs and begin utilizing a number of nodes linked by way of InfiniBand, you’ll see a big efficiency degradation. Inter-node communication is far slower than intra-node NVLink, introducing community protocol overhead, increased latency, and bandwidth limitations. As you add extra GPUs, every GPU should coordinate with extra friends, spending extra time idle ready for knowledge transfers to finish.

Conclusion

Comply with me on X for extra free AI content material @l_cesconetto

Congratulations on making it to the tip! On this put up you discovered about:

- The CPU-GPU and GPU-GPU communication fundamentals:

- PCIe, NVLink, NVSwitch, and InfiniBand

- Key design rules for distributed GPU computing

- You’re now in a position to make way more knowledgeable selections when designing your AI workloads

Within the subsequent weblog put up, we’ll dive into our first parallelism approach, the Distributed Knowledge Parallelism.