What makes a language mannequin good? Is it predicting the following phrase in a sentence ‒ or dealing with powerful reasoning duties that problem even shiny people? Right this moment’s Massive Language Fashions (LLMs) create clean textual content plus resolve easy issues however they wrestle with challenges needing cautious thought, like laborious math or summary problem-solving.

This problem comes from how LLMs deal with info. Most fashions use System 1-like considering ‒ quick, sample primarily based reactions just like instinct. Whereas it really works for a lot of duties, it fails when issues want logical reasoning together with making an attempt totally different approaches and checking outcomes. Enter System 2 considering ‒ a human technique for tackling laborious challenges: cautious, step-by-step ‒ typically needing backtracking to enhance conclusions.

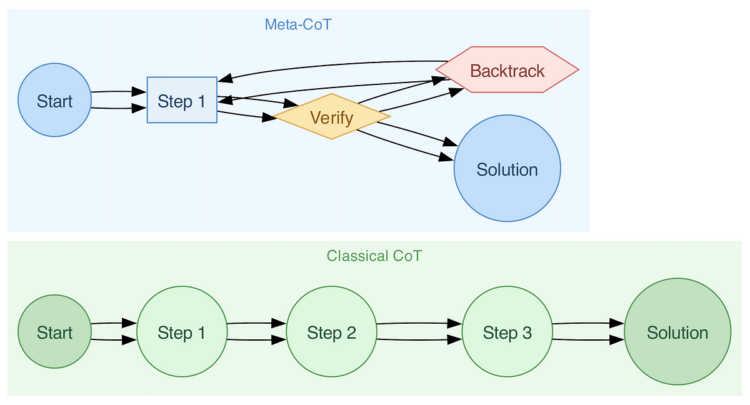

To repair this hole, researchers launched Meta Chain-of-Thought (Meta-CoT). Constructing on the favored Chain-of-Thought (CoT) technique, Meta-CoT lets LLMs mannequin not simply steps of reasoning however the entire means of “considering via an issue.” This modification is like how people sort out powerful questions by exploring together with evaluating ‒ and iterating towards solutions.