An astounding variety of movies can be found on the Net, overlaying quite a lot of content material from on a regular basis moments individuals share to historic moments to scientific observations, every of which incorporates a singular document of the world. The fitting instruments may assist researchers analyze these movies, reworking how we perceive the world round us.

Movies provide dynamic visible content material way more wealthy than static pictures, capturing motion, adjustments, and dynamic relationships between entities. Analyzing this complexity, together with the immense variety of publicly obtainable video information, calls for fashions that transcend conventional picture understanding. Consequently, most of the approaches that greatest carry out on video understanding nonetheless depend on specialised fashions tailored for explicit duties. Lately, there was thrilling progress on this space utilizing video basis fashions (ViFMs), equivalent to VideoCLIP, InternVideo, VideoCoCa, and UMT. Nevertheless, constructing a ViFM that handles the sheer variety of video information stays a problem.

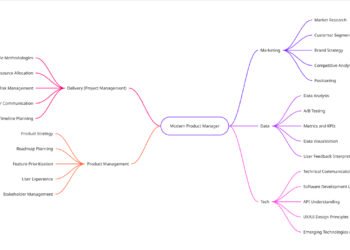

With the aim of constructing a single mannequin for general-purpose video understanding, we introduce “VideoPrism: A Foundational Visible Encoder for Video Understanding”. VideoPrism is a ViFM designed to deal with a large spectrum of video understanding duties, together with classification, localization, retrieval, captioning, and query answering (QA). We suggest improvements in each the pre-training information in addition to the modeling technique. We pre-train VideoPrism on an enormous and various dataset: 36 million high-quality video-text pairs and 582 million video clips with noisy or machine-generated parallel textual content. Our pre-training method is designed for this hybrid information, to be taught each from video-text pairs and the movies themselves. VideoPrism is extremely straightforward to adapt to new video understanding challenges, and achieves state-of-the-art efficiency utilizing a single frozen mannequin.

VideoPrism is a general-purpose video encoder that permits state-of-the-art outcomes over a large spectrum of video understanding duties, together with classification, localization, retrieval, captioning, and query answering, by producing video representations from a single frozen mannequin.

Pre-training information

A strong ViFM wants a really massive assortment of movies on which to coach — just like different basis fashions (FMs), equivalent to these for giant language fashions (LLMs). Ideally, we’d need the pre-training information to be a consultant pattern of all of the movies on the earth. Whereas naturally most of those movies shouldn’t have excellent captions or descriptions, even imperfect textual content can present helpful details about the semantic content material of the video.

To offer our mannequin the very best start line, we put collectively an enormous pre-training corpus consisting of a number of private and non-private datasets, together with YT-Temporal-180M, InternVid, VideoCC, WTS-70M, and so on. This contains 36 million fastidiously chosen movies with high-quality captions, together with a further 582 million clips with various ranges of noisy textual content (like auto-generated transcripts). To our information, that is the most important and most various video coaching corpus of its form.

|

| Statistics on the video-text pre-training information. The big variations of the CLIP similarity scores (the upper, the higher) show the various caption high quality of our pre-training information, which is a byproduct of the assorted methods used to reap the textual content. |

Two-stage coaching

The VideoPrism mannequin structure stems from the usual imaginative and prescient transformer (ViT) with a factorized design that sequentially encodes spatial and temporal info following ViViT. Our coaching method leverages each the high-quality video-text information and the video information with noisy textual content talked about above. To begin, we use contrastive studying (an method that minimizes the gap between optimistic video-text pairs whereas maximizing the gap between detrimental video-text pairs) to show our mannequin to match movies with their very own textual content descriptions, together with imperfect ones. This builds a basis for matching semantic language content material to visible content material.

After video-text contrastive coaching, we leverage the gathering of movies with out textual content descriptions. Right here, we construct on the masked video modeling framework to foretell masked patches in a video, with just a few enhancements. We practice the mannequin to foretell each the video-level international embedding and token-wise embeddings from the first-stage mannequin to successfully leverage the information acquired in that stage. We then randomly shuffle the anticipated tokens to forestall the mannequin from studying shortcuts.

What is exclusive about VideoPrism’s setup is that we use two complementary pre-training indicators: textual content descriptions and the visible content material inside a video. Textual content descriptions typically concentrate on what issues seem like, whereas the video content material gives details about motion and visible dynamics. This allows VideoPrism to excel in duties that demand an understanding of each look and movement.

Outcomes

We conduct in depth analysis on VideoPrism throughout 4 broad classes of video understanding duties, together with video classification and localization, video-text retrieval, video captioning, query answering, and scientific video understanding. VideoPrism achieves state-of-the-art efficiency on 30 out of 33 video understanding benchmarks — all with minimal adaptation of a single, frozen mannequin.

|

| VideoPrism in comparison with the earlier best-performing FMs. |

Classification and localization

We consider VideoPrism on an present large-scale video understanding benchmark (VideoGLUE) overlaying classification and localization duties. We discover that (1) VideoPrism outperforms the entire different state-of-the-art FMs, and (2) no different single mannequin persistently got here in second place. This tells us that VideoPrism has realized to successfully pack quite a lot of video indicators into one encoder — from semantics at totally different granularities to look and movement cues — and it really works properly throughout quite a lot of video sources.

Combining with LLMs

We additional discover combining VideoPrism with LLMs to unlock its means to deal with varied video-language duties. Particularly, when paired with a textual content encoder (following LiT) or a language decoder (equivalent to PaLM-2), VideoPrism will be utilized for video-text retrieval, video captioning, and video QA duties. We examine the mixed fashions on a broad and difficult set of vision-language benchmarks. VideoPrism units the brand new cutting-edge on most benchmarks. From the visible outcomes, we discover that VideoPrism is able to understanding advanced motions and appearances in movies (e.g., the mannequin can acknowledge the totally different colours of spinning objects on the window within the visible examples under). These outcomes show that VideoPrism is strongly appropriate with language fashions.

We present qualitative outcomes utilizing VideoPrism with a textual content encoder for video-text retrieval (first row) and tailored to a language decoder for video QA (second and third row). For video-text retrieval examples, the blue bars point out the embedding similarities between the movies and the textual content queries.

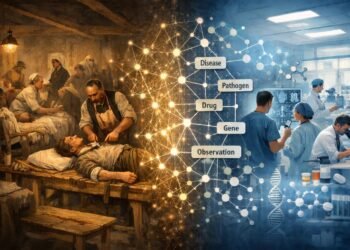

Scientific functions

Lastly, we check VideoPrism on datasets utilized by scientists throughout domains, together with fields equivalent to ethology, behavioral neuroscience, and ecology. These datasets usually require area experience to annotate, for which we leverage present scientific datasets open-sourced by the group together with Fly vs. Fly, CalMS21, ChimpACT, and KABR. VideoPrism not solely performs exceptionally properly, however really surpasses fashions designed particularly for these duties. This implies instruments like VideoPrism have the potential to rework how scientists analyze video information throughout totally different fields.

Conclusion

With VideoPrism, we introduce a robust and versatile video encoder that units a brand new customary for general-purpose video understanding. Our emphasis on each constructing an enormous and assorted pre-training dataset and revolutionary modeling strategies has been validated by means of our in depth evaluations. Not solely does VideoPrism persistently outperform robust baselines, however its distinctive means to generalize positions it properly for tackling an array of real-world functions. Due to its potential broad use, we’re dedicated to persevering with additional accountable analysis on this house, guided by our AI Ideas. We hope VideoPrism paves the best way for future breakthroughs on the intersection of AI and video evaluation, serving to to comprehend the potential of ViFMs throughout domains equivalent to scientific discovery, training, and healthcare.

Acknowledgements

This weblog put up is made on behalf of all of the VideoPrism authors: Lengthy Zhao, Nitesh B. Gundavarapu, Liangzhe Yuan, Hao Zhou, Shen Yan, Jennifer J. Solar, Luke Friedman, Rui Qian, Tobias Weyand, Yue Zhao, Rachel Hornung, Florian Schroff, Ming-Hsuan Yang, David A. Ross, Huisheng Wang, Hartwig Adam, Mikhail Sirotenko, Ting Liu, and Boqing Gong. We sincerely thank David Hendon for his or her product administration efforts, and Alex Siegman, Ramya Ganeshan, and Victor Gomes for his or her program and useful resource administration efforts. We additionally thank Hassan Akbari, Sherry Ben, Yoni Ben-Meshulam, Chun-Te Chu, Sam Clearwater, Yin Cui, Ilya Figotin, Anja Hauth, Sergey Ioffe, Xuhui Jia, Yeqing Li, Lu Jiang, Zu Kim, Dan Kondratyuk, Invoice Mark, Arsha Nagrani, Caroline Pantofaru, Sushant Prakash, Cordelia Schmid, Bryan Seybold, Mojtaba Seyedhosseini, Amanda Sadler, Rif A. Saurous, Rachel Stigler, Paul Voigtlaender, Pingmei Xu, Chaochao Yan, Xuan Yang, and Yukun Zhu for the discussions, assist, and suggestions that significantly contributed to this work. We’re grateful to Jay Yagnik, Rahul Sukthankar, and Tomas Izo for his or her enthusiastic assist for this venture. Lastly, we thank Tom Small, Jennifer J. Solar, Hao Zhou, Nitesh B. Gundavarapu, Luke Friedman, and Mikhail Sirotenko for the large assist with making this weblog put up.

An astounding variety of movies can be found on the Net, overlaying quite a lot of content material from on a regular basis moments individuals share to historic moments to scientific observations, every of which incorporates a singular document of the world. The fitting instruments may assist researchers analyze these movies, reworking how we perceive the world round us.

Movies provide dynamic visible content material way more wealthy than static pictures, capturing motion, adjustments, and dynamic relationships between entities. Analyzing this complexity, together with the immense variety of publicly obtainable video information, calls for fashions that transcend conventional picture understanding. Consequently, most of the approaches that greatest carry out on video understanding nonetheless depend on specialised fashions tailored for explicit duties. Lately, there was thrilling progress on this space utilizing video basis fashions (ViFMs), equivalent to VideoCLIP, InternVideo, VideoCoCa, and UMT. Nevertheless, constructing a ViFM that handles the sheer variety of video information stays a problem.

With the aim of constructing a single mannequin for general-purpose video understanding, we introduce “VideoPrism: A Foundational Visible Encoder for Video Understanding”. VideoPrism is a ViFM designed to deal with a large spectrum of video understanding duties, together with classification, localization, retrieval, captioning, and query answering (QA). We suggest improvements in each the pre-training information in addition to the modeling technique. We pre-train VideoPrism on an enormous and various dataset: 36 million high-quality video-text pairs and 582 million video clips with noisy or machine-generated parallel textual content. Our pre-training method is designed for this hybrid information, to be taught each from video-text pairs and the movies themselves. VideoPrism is extremely straightforward to adapt to new video understanding challenges, and achieves state-of-the-art efficiency utilizing a single frozen mannequin.

VideoPrism is a general-purpose video encoder that permits state-of-the-art outcomes over a large spectrum of video understanding duties, together with classification, localization, retrieval, captioning, and query answering, by producing video representations from a single frozen mannequin.

Pre-training information

A strong ViFM wants a really massive assortment of movies on which to coach — just like different basis fashions (FMs), equivalent to these for giant language fashions (LLMs). Ideally, we’d need the pre-training information to be a consultant pattern of all of the movies on the earth. Whereas naturally most of those movies shouldn’t have excellent captions or descriptions, even imperfect textual content can present helpful details about the semantic content material of the video.

To offer our mannequin the very best start line, we put collectively an enormous pre-training corpus consisting of a number of private and non-private datasets, together with YT-Temporal-180M, InternVid, VideoCC, WTS-70M, and so on. This contains 36 million fastidiously chosen movies with high-quality captions, together with a further 582 million clips with various ranges of noisy textual content (like auto-generated transcripts). To our information, that is the most important and most various video coaching corpus of its form.

|

| Statistics on the video-text pre-training information. The big variations of the CLIP similarity scores (the upper, the higher) show the various caption high quality of our pre-training information, which is a byproduct of the assorted methods used to reap the textual content. |

Two-stage coaching

The VideoPrism mannequin structure stems from the usual imaginative and prescient transformer (ViT) with a factorized design that sequentially encodes spatial and temporal info following ViViT. Our coaching method leverages each the high-quality video-text information and the video information with noisy textual content talked about above. To begin, we use contrastive studying (an method that minimizes the gap between optimistic video-text pairs whereas maximizing the gap between detrimental video-text pairs) to show our mannequin to match movies with their very own textual content descriptions, together with imperfect ones. This builds a basis for matching semantic language content material to visible content material.

After video-text contrastive coaching, we leverage the gathering of movies with out textual content descriptions. Right here, we construct on the masked video modeling framework to foretell masked patches in a video, with just a few enhancements. We practice the mannequin to foretell each the video-level international embedding and token-wise embeddings from the first-stage mannequin to successfully leverage the information acquired in that stage. We then randomly shuffle the anticipated tokens to forestall the mannequin from studying shortcuts.

What is exclusive about VideoPrism’s setup is that we use two complementary pre-training indicators: textual content descriptions and the visible content material inside a video. Textual content descriptions typically concentrate on what issues seem like, whereas the video content material gives details about motion and visible dynamics. This allows VideoPrism to excel in duties that demand an understanding of each look and movement.

Outcomes

We conduct in depth analysis on VideoPrism throughout 4 broad classes of video understanding duties, together with video classification and localization, video-text retrieval, video captioning, query answering, and scientific video understanding. VideoPrism achieves state-of-the-art efficiency on 30 out of 33 video understanding benchmarks — all with minimal adaptation of a single, frozen mannequin.

|

| VideoPrism in comparison with the earlier best-performing FMs. |

Classification and localization

We consider VideoPrism on an present large-scale video understanding benchmark (VideoGLUE) overlaying classification and localization duties. We discover that (1) VideoPrism outperforms the entire different state-of-the-art FMs, and (2) no different single mannequin persistently got here in second place. This tells us that VideoPrism has realized to successfully pack quite a lot of video indicators into one encoder — from semantics at totally different granularities to look and movement cues — and it really works properly throughout quite a lot of video sources.

Combining with LLMs

We additional discover combining VideoPrism with LLMs to unlock its means to deal with varied video-language duties. Particularly, when paired with a textual content encoder (following LiT) or a language decoder (equivalent to PaLM-2), VideoPrism will be utilized for video-text retrieval, video captioning, and video QA duties. We examine the mixed fashions on a broad and difficult set of vision-language benchmarks. VideoPrism units the brand new cutting-edge on most benchmarks. From the visible outcomes, we discover that VideoPrism is able to understanding advanced motions and appearances in movies (e.g., the mannequin can acknowledge the totally different colours of spinning objects on the window within the visible examples under). These outcomes show that VideoPrism is strongly appropriate with language fashions.

We present qualitative outcomes utilizing VideoPrism with a textual content encoder for video-text retrieval (first row) and tailored to a language decoder for video QA (second and third row). For video-text retrieval examples, the blue bars point out the embedding similarities between the movies and the textual content queries.

Scientific functions

Lastly, we check VideoPrism on datasets utilized by scientists throughout domains, together with fields equivalent to ethology, behavioral neuroscience, and ecology. These datasets usually require area experience to annotate, for which we leverage present scientific datasets open-sourced by the group together with Fly vs. Fly, CalMS21, ChimpACT, and KABR. VideoPrism not solely performs exceptionally properly, however really surpasses fashions designed particularly for these duties. This implies instruments like VideoPrism have the potential to rework how scientists analyze video information throughout totally different fields.

Conclusion

With VideoPrism, we introduce a robust and versatile video encoder that units a brand new customary for general-purpose video understanding. Our emphasis on each constructing an enormous and assorted pre-training dataset and revolutionary modeling strategies has been validated by means of our in depth evaluations. Not solely does VideoPrism persistently outperform robust baselines, however its distinctive means to generalize positions it properly for tackling an array of real-world functions. Due to its potential broad use, we’re dedicated to persevering with additional accountable analysis on this house, guided by our AI Ideas. We hope VideoPrism paves the best way for future breakthroughs on the intersection of AI and video evaluation, serving to to comprehend the potential of ViFMs throughout domains equivalent to scientific discovery, training, and healthcare.

Acknowledgements

This weblog put up is made on behalf of all of the VideoPrism authors: Lengthy Zhao, Nitesh B. Gundavarapu, Liangzhe Yuan, Hao Zhou, Shen Yan, Jennifer J. Solar, Luke Friedman, Rui Qian, Tobias Weyand, Yue Zhao, Rachel Hornung, Florian Schroff, Ming-Hsuan Yang, David A. Ross, Huisheng Wang, Hartwig Adam, Mikhail Sirotenko, Ting Liu, and Boqing Gong. We sincerely thank David Hendon for his or her product administration efforts, and Alex Siegman, Ramya Ganeshan, and Victor Gomes for his or her program and useful resource administration efforts. We additionally thank Hassan Akbari, Sherry Ben, Yoni Ben-Meshulam, Chun-Te Chu, Sam Clearwater, Yin Cui, Ilya Figotin, Anja Hauth, Sergey Ioffe, Xuhui Jia, Yeqing Li, Lu Jiang, Zu Kim, Dan Kondratyuk, Invoice Mark, Arsha Nagrani, Caroline Pantofaru, Sushant Prakash, Cordelia Schmid, Bryan Seybold, Mojtaba Seyedhosseini, Amanda Sadler, Rif A. Saurous, Rachel Stigler, Paul Voigtlaender, Pingmei Xu, Chaochao Yan, Xuan Yang, and Yukun Zhu for the discussions, assist, and suggestions that significantly contributed to this work. We’re grateful to Jay Yagnik, Rahul Sukthankar, and Tomas Izo for his or her enthusiastic assist for this venture. Lastly, we thank Tom Small, Jennifer J. Solar, Hao Zhou, Nitesh B. Gundavarapu, Luke Friedman, and Mikhail Sirotenko for the large assist with making this weblog put up.