largely a

It’s not essentially the most thrilling subject, however increasingly corporations are paying consideration. So it’s value digging into which metrics to trace to really measure that efficiency.

It additionally helps to have correct evals in place anytime you push modifications, to ensure issues don’t go haywire.

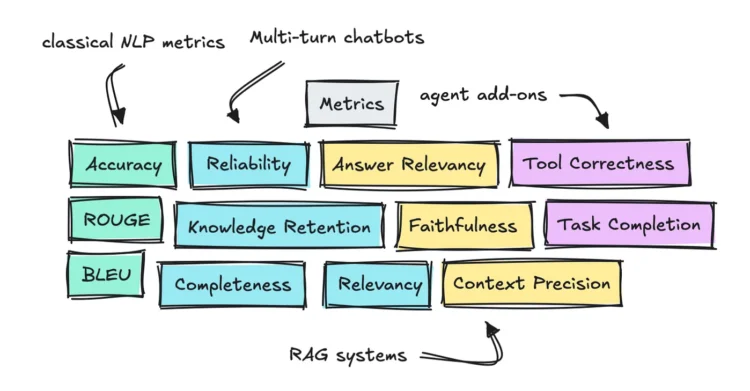

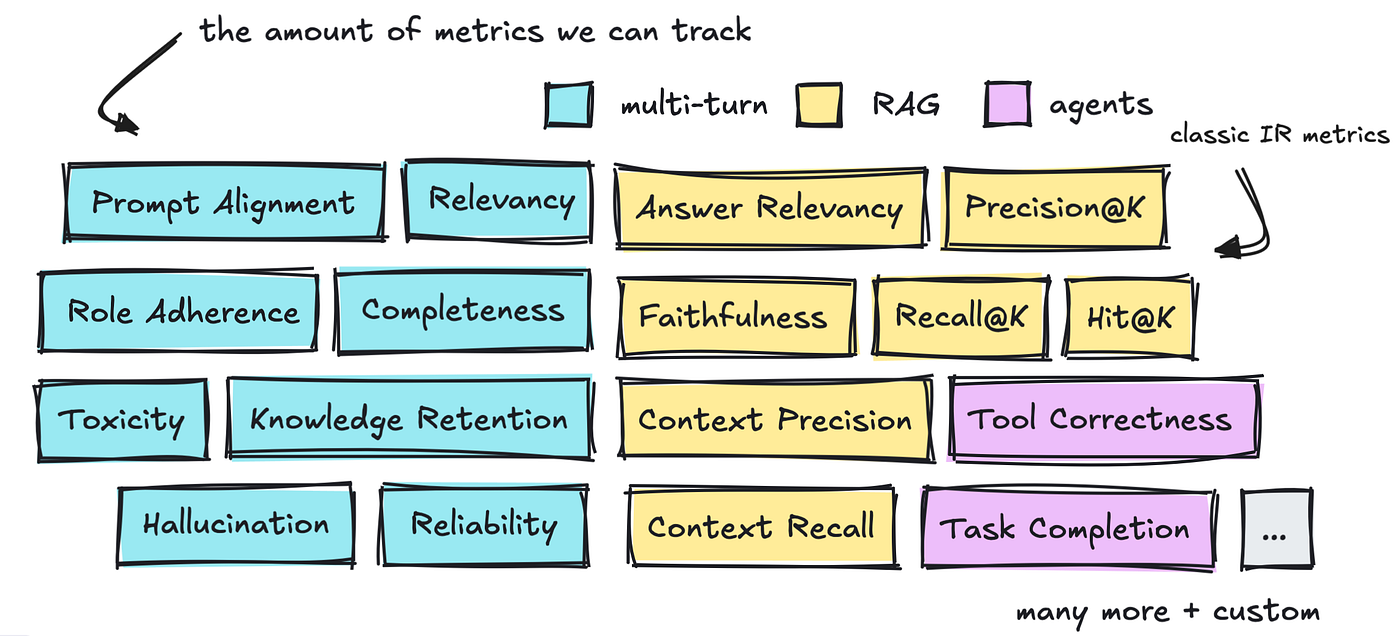

So, for this text I’ve executed some analysis on frequent metrics for multi-turn chatbots, RAG, and agentic purposes.

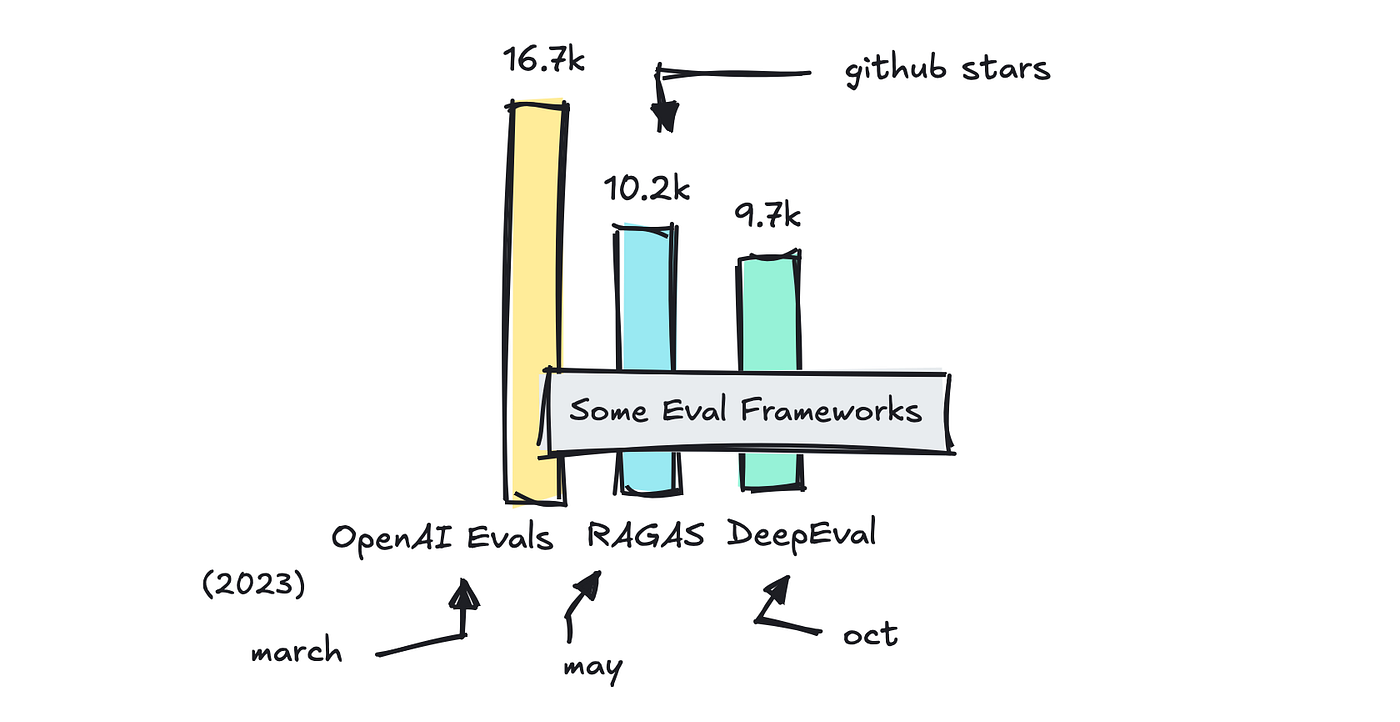

I’ve additionally included a fast overview of frameworks like DeepEval, RAGAS, and OpenAI’s Evals library, so you understand when to choose what.

This text is break up in two. If you happen to’re new, Half 1 talks a bit about conventional metrics like BLEU and ROUGE, touches on LLM benchmarks, and introduces the concept of utilizing an LLM as a choose in evals.

If this isn’t new to you, you possibly can skip this. Half 2 digs into evaluations of various sorts of LLM purposes.

What we did earlier than

If you happen to’re effectively versed in how we consider NLP duties and the way public benchmarks work, you possibly can skip this primary half.

If you happen to’re not, it’s good to know what the sooner metrics like accuracy and BLEU have been initially used for and the way they work, together with understanding how we take a look at for public benchmarks like MMLU.

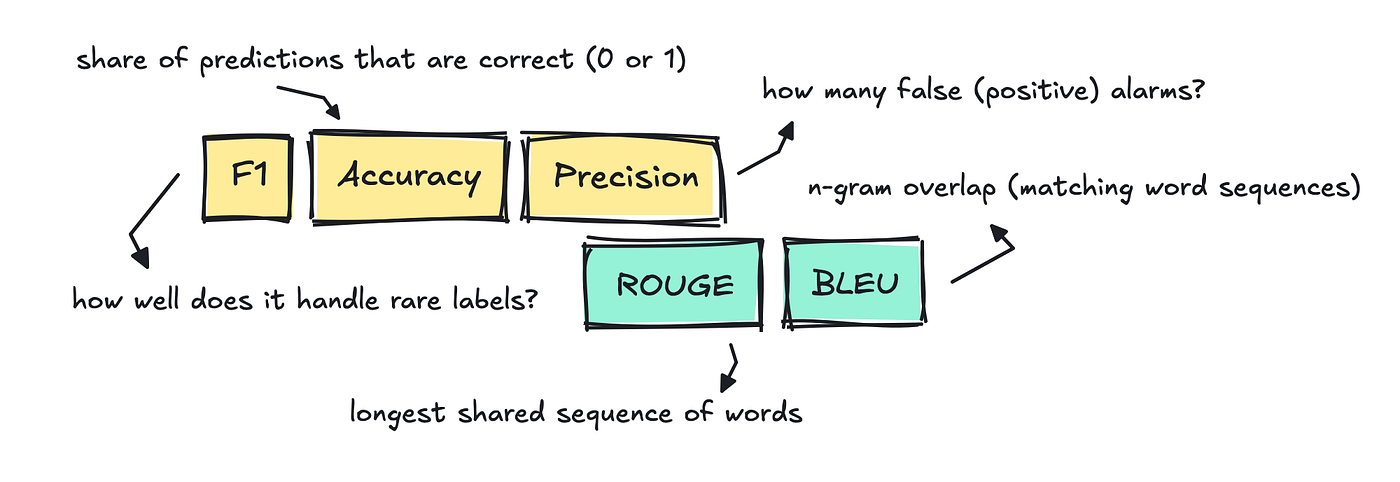

Evaluating NLP duties

After we consider conventional NLP duties equivalent to classification, translation, summarization, and so forth, we flip to conventional metrics like accuracy, precision, F1, BLEU, and ROUGE

These metrics are nonetheless used in the present day, however largely when the mannequin produces a single, simply comparable “proper” reply.

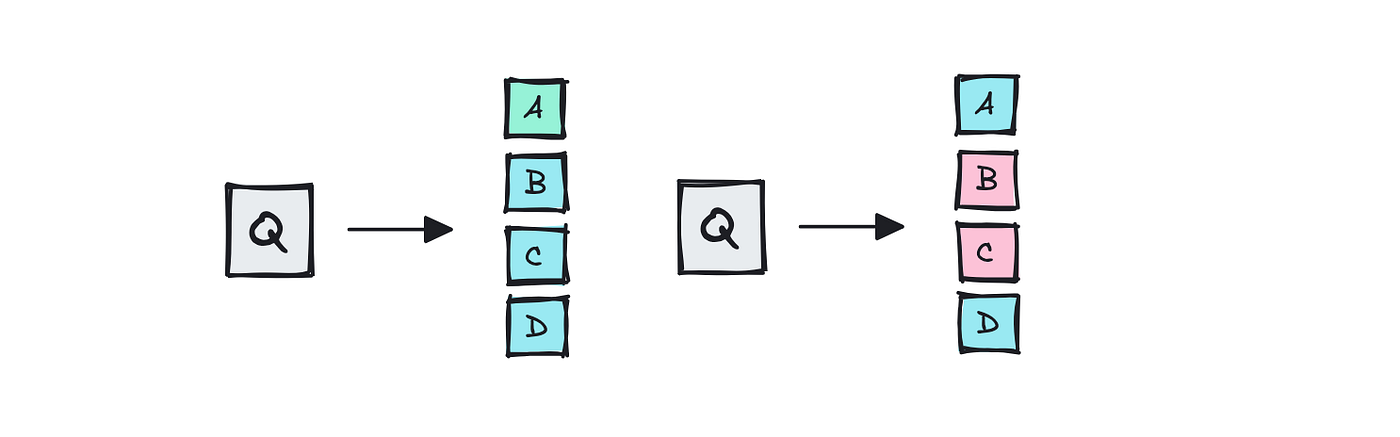

Take classification, for instance, the place the duty is to assign every textual content a single label. To check this, we will use accuracy by evaluating the label assigned by the mannequin to the reference label within the eval dataset to see if it received it proper.

It’s very clear-cut: if it assigns the mistaken label, it will get a 0; if it assigns the proper label, it will get a 1.

This implies if we construct a classifier for a spam dataset with 1,000 emails, and the mannequin labels 910 of them accurately, the accuracy could be 0.91.

For textual content classification, we frequently additionally use F1, precision, and recall.

On the subject of NLP duties like summarization and machine translation, individuals typically used ROUGE and BLEU to see how intently the mannequin’s translation or abstract traces up with a reference textual content.

Each scores depend overlapping n-grams, and whereas the path of the comparability is totally different, basically it simply means the extra shared phrase chunks, the upper the rating.

That is fairly simplistic, since if the outputs use totally different wording, it would rating low.

All of those metrics work greatest when there’s a single proper reply to a response and are sometimes not the best alternative for the LLM purposes we construct in the present day.

LLM benchmarks

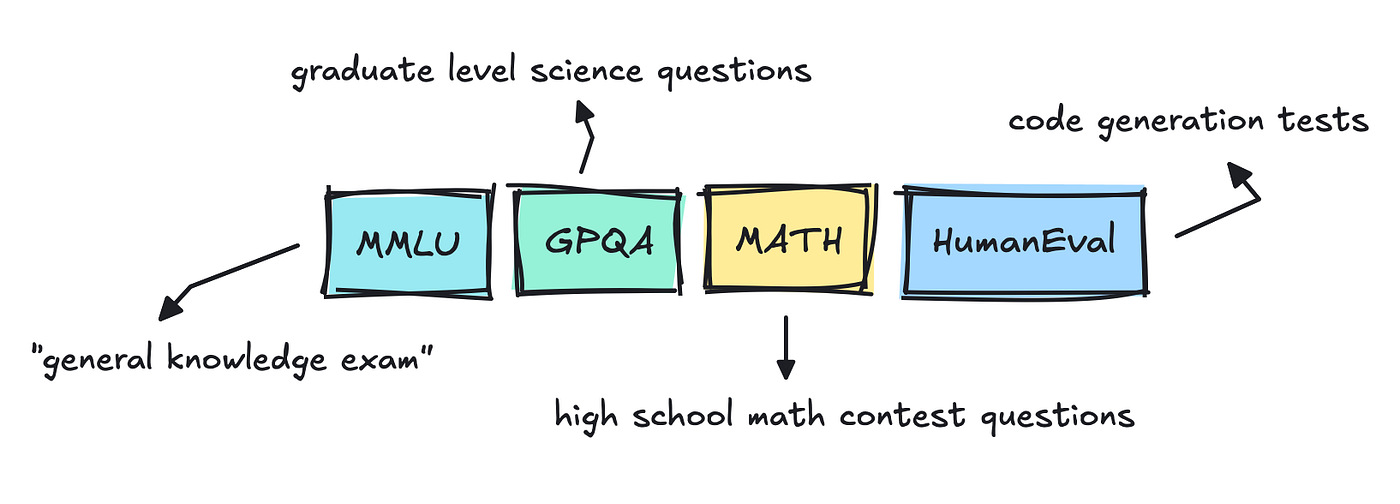

If you happen to’ve watched the information, you’ve in all probability seen that each time a brand new model of a big language mannequin will get launched, it follows just a few benchmarks: MMLU Professional, GPQA, or Massive-Bench.

These are generic evals for which the right time period is basically “benchmark” and never evals (which we’ll cowl later).

Though there’s quite a lot of different evaluations executed for every mannequin, together with for toxicity, hallucination, and bias, those that get many of the consideration are extra like exams or leaderboards.

Datasets like MMLU are multiple-choice and have been round for fairly a while. I’ve truly skimmed via it earlier than and seen how messy it’s.

Some questions and solutions are fairly ambiguous, which makes me assume that LLM suppliers will attempt to prepare their fashions on these datasets simply to ensure they get them proper.

This creates some concern in most people that the majority LLMs are simply overfitting after they do effectively on these benchmarks and why there’s a necessity for newer datasets and impartial evaluations.

LLM scorers

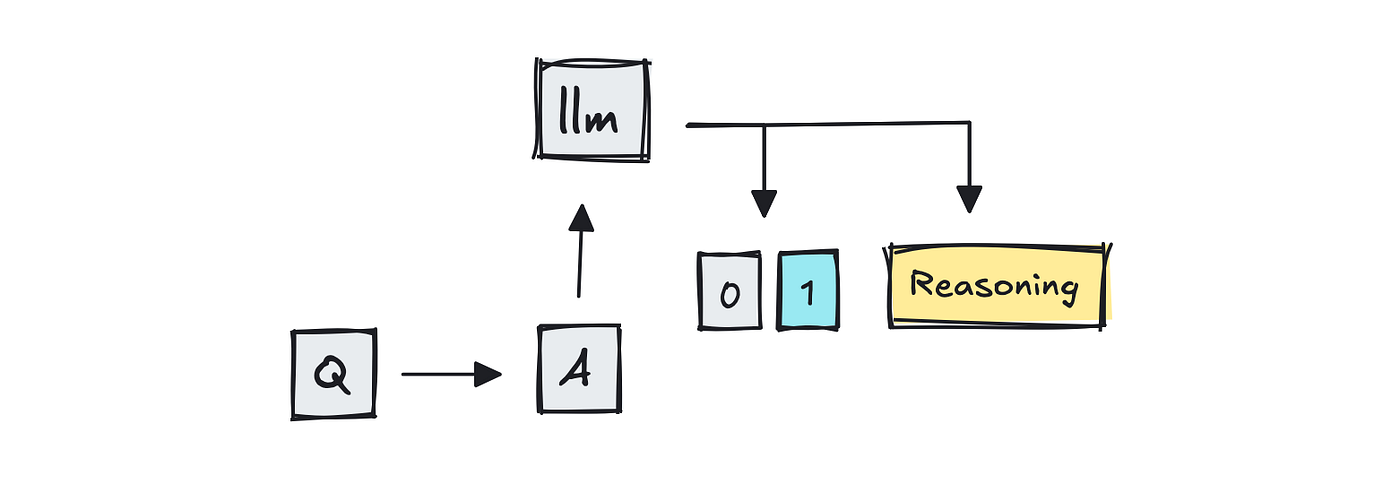

To run evaluations on these datasets, you possibly can normally use accuracy and unit exams. Nevertheless, what’s totally different now could be the addition of one thing known as LLM-as-a-judge.

To benchmark the fashions, groups will largely use conventional strategies.

So so long as it’s a number of alternative or there’s only one proper reply, there’s no want for anything however to check the reply to the reference for a precise match.

That is the case for datasets equivalent to MMLU and GPQA, which have a number of alternative solutions.

For the coding exams (HumanEval, SWE-Bench), the grader can merely run the mannequin’s patch or operate. If each take a look at passes, the issue counts as solved, and vice versa.

Nevertheless, as you possibly can think about, if the questions are ambiguous or open-ended, the solutions might fluctuate. This hole led to the rise of “LLM-as-a-judge,” the place a big language mannequin like GPT-4 scores the solutions.

MT-Bench is without doubt one of the benchmarks that makes use of LLMs as scorers, because it feeds GPT-4 two competing multi-turn solutions and asks which one is healthier.

Chatbot Enviornment, which use human raters, I believe now scales up by additionally incorporating using an LLM-as-a-judge.

For transparency, it’s also possible to use semantic rulers equivalent to BERTScore to check for semantic similarity. I’m glossing over what’s on the market to maintain it condensed.

So, groups should still use overlap metrics like BLEU or ROUGE for fast sanity checks, or depend on exact-match parsing when doable, however what’s new is to have one other giant language mannequin choose the output.

What we do with LLM apps

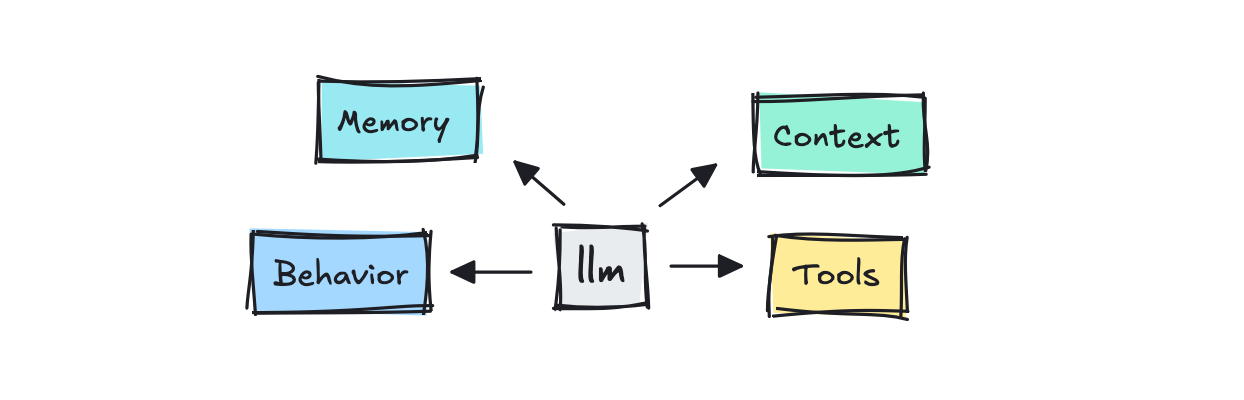

The first factor that modifications now could be that we’re not simply testing the LLM itself however all the system.

After we can, we nonetheless use programmatic strategies to judge, identical to earlier than.

For extra nuanced outputs, we will begin with one thing low cost and deterministic like BLEU or ROUGE to have a look at n-gram overlap, however most fashionable frameworks on the market will now use LLM scorers to judge.

There are three areas value speaking about: easy methods to consider multi-turn conversations, RAG, and brokers, by way of the way it’s executed and what sorts of metrics we will flip to.

We’ll discuss all of those metrics which have already been outlined briefly earlier than transferring on to the totally different frameworks that assist us out.

Multi-turn conversations

The primary a part of that is about constructing evals for multi-turn conversations, those we see in chatbots.

After we work together with a chatbot, we wish the dialog to really feel pure, skilled, and for it to recollect the best bits. We wish it to remain on subject all through the dialog and really reply the factor we requested.

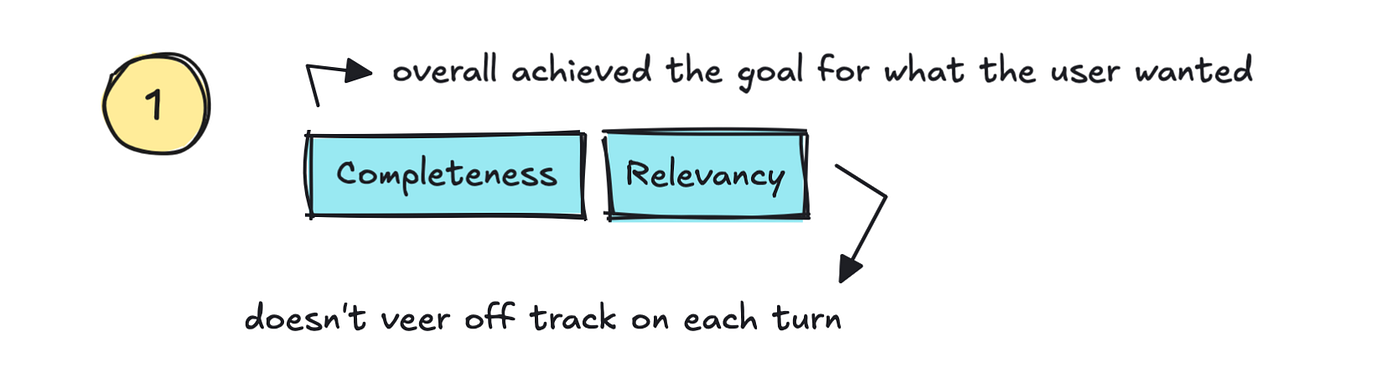

There are fairly just a few commonplace metrics which have already been outlined right here. The primary we will discuss are Relevancy/Coherence and Completeness.

Relevancy is a metric that ought to observe if the LLM appropriately addresses the person’s question and stays on subject, whereas Completeness is excessive if the ultimate final result truly addresses the person’s objective.

That’s, if we will observe satisfaction throughout all the dialog, we will additionally observe whether or not it actually does “scale back help prices” and enhance belief, together with offering excessive “self-service charges.”

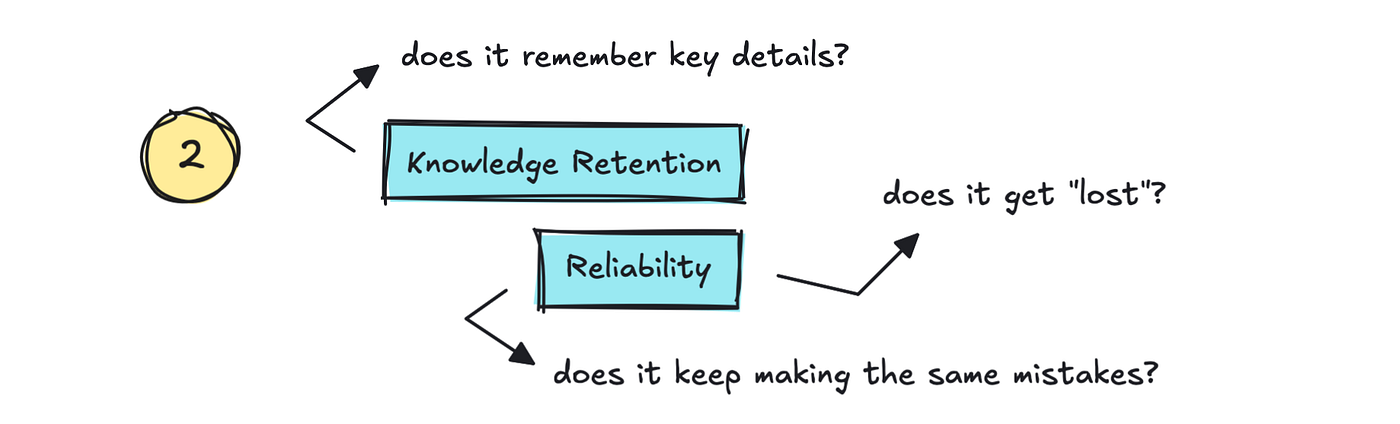

The second half is Information Retention and Reliability.

That’s: does it keep in mind key particulars from the dialog, and may we belief it to not get “misplaced”? It’s not simply sufficient that it remembers particulars. It additionally wants to have the ability to right itself.

That is one thing we see in vibe coding instruments. They overlook the errors they’ve made after which hold making them. We must be monitoring this as low Reliability or Stability.

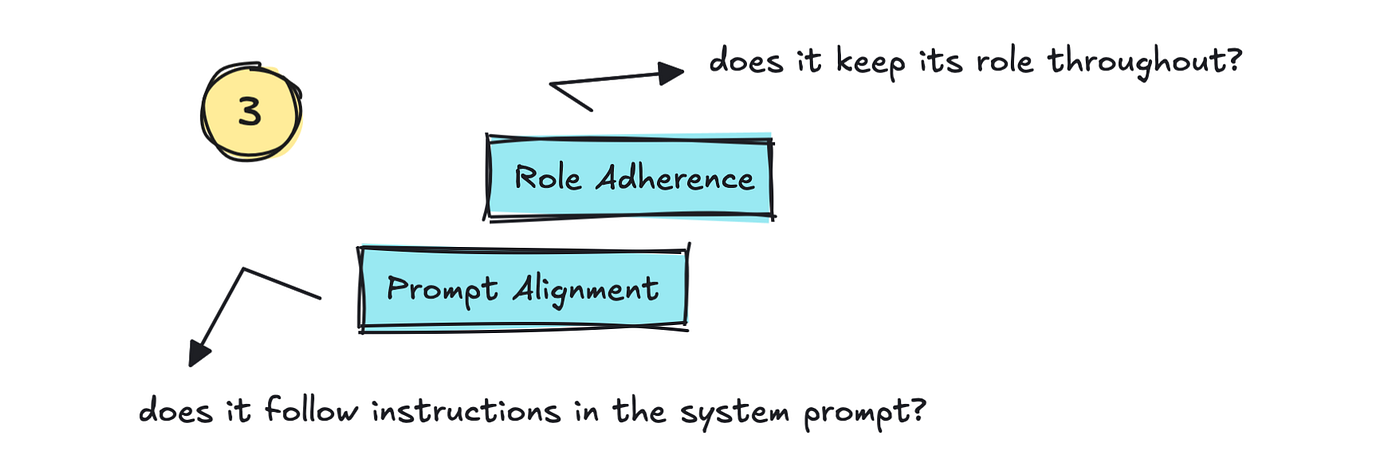

The third half we will observe is Position Adherence and Immediate Alignment. This tracks whether or not the LLM sticks to the position it’s been given and whether or not it follows the directions within the system immediate.

Subsequent are metrics round security, equivalent to Hallucination and Bias/Toxicity.

Hallucination is necessary to trace but in addition fairly troublesome. Folks might attempt to arrange net search to judge the output, or they break up the output into totally different claims which can be evaluated by a bigger mannequin (LLM-as-a-judge type).

There are additionally different strategies, equivalent to SelfCheckGPT, which checks the mannequin’s consistency by calling it a number of occasions on the identical immediate to see if it sticks to its authentic reply and what number of occasions it diverges.

For Bias/Toxicity, you need to use different NLP strategies, equivalent to a fine-tuned classifier.

Different metrics it’s possible you’ll need to observe might be customized to your utility, for instance, code correctness, safety vulnerabilities, JSON correctness, and so forth.

As for easy methods to do the evaluations, you don’t at all times have to make use of an LLM, though in most of those circumstances the usual options do.

In circumstances the place we will extract the proper reply, equivalent to parsing JSON, we naturally don’t want to make use of an LLM. As I mentioned earlier, many LLM suppliers additionally benchmark with unit exams for code-related metrics.

It goes with out saying that utilizing an LLM as a choose isn’t at all times tremendous dependable, identical to the purposes they measure, however I don’t have any numbers for you right here, so that you’ll should hunt for that by yourself.

Retrieval Augmented Era (RAG)

To proceed constructing on what we will observe for multi-turn conversations, we will flip to what we have to measure when utilizing Retrieval Augmented Era (RAG).

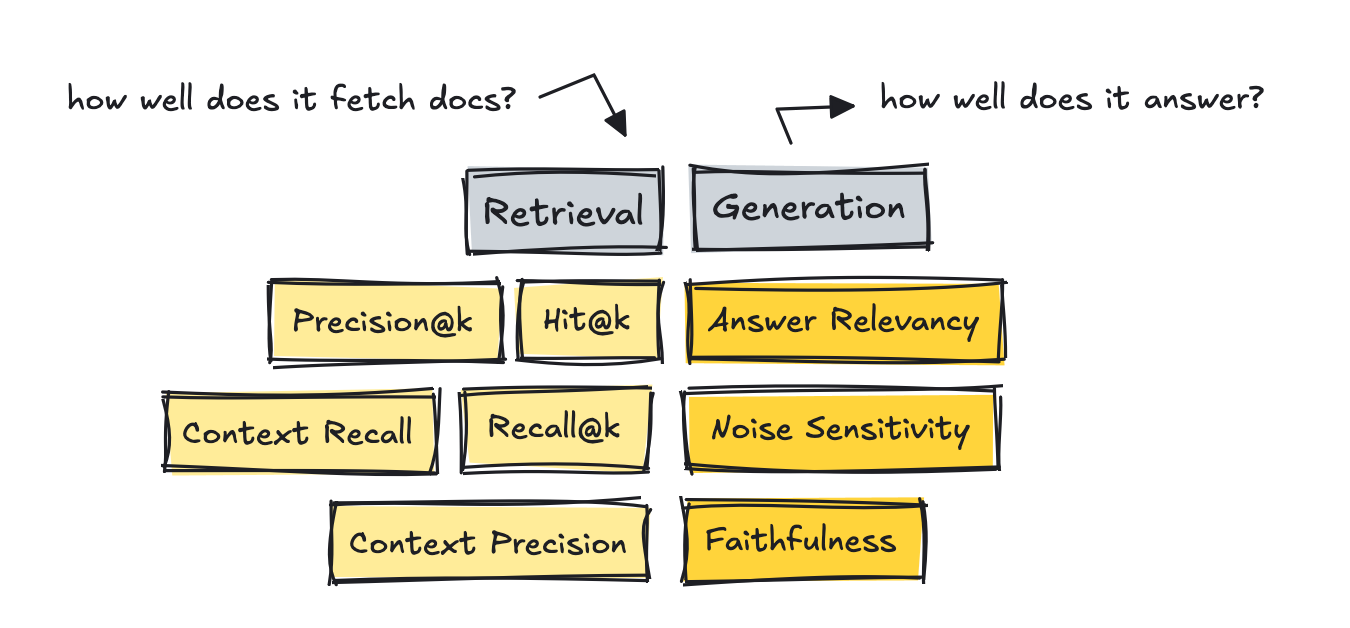

With RAG programs, we have to break up the method into two: measuring retrieval and technology metrics individually.

The primary half to measure is retrieval and whether or not the paperwork which can be fetched are the proper ones for the question.

If we get low scores on the retrieval aspect, we will tune the system by establishing higher chunking methods, altering the embedding mannequin, including strategies equivalent to hybrid search and re-ranking, filtering with metadata, and comparable approaches.

To measure retrieval, we will use older metrics that depend on a curated dataset, or we will use reference-free strategies that use an LLM as a choose.

I would like to say the basic IR metrics first as a result of they have been the primary on the scene. For these, we want “gold” solutions, the place we arrange a question after which rank every doc for that specific question.

Though you need to use an LLM to construct these datasets, we don’t use an LLM to measure, since we have already got scores within the dataset to check towards.

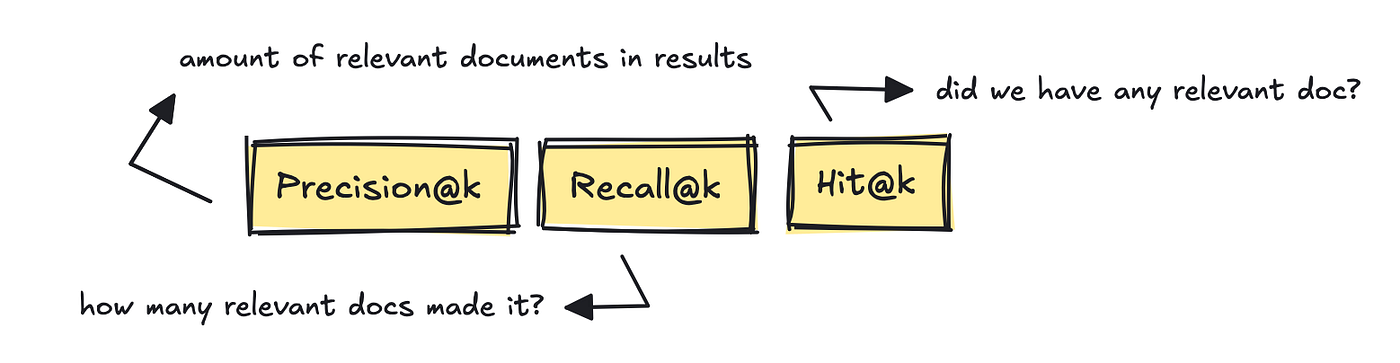

Probably the most well-known IR metrics are Precision@okay, Recall@okay, and Hit@okay.

These measure the quantity of related paperwork within the outcomes, what number of related paperwork have been retrieved primarily based on the gold reference solutions, and whether or not not less than one related doc made it into the outcomes.

The newer frameworks equivalent to RAGAS and DeepEval introduces reference-free, LLM-judge type metrics like Context Recall and Context Precision.

These depend how most of the really related chunks made it into the highest Okay checklist primarily based on the question, utilizing an LLM to guage.

That’s, primarily based on the question, did the system truly return any related paperwork primarily based on the reply, or are there too many irrelevant ones to reply the query correctly?

To construct datasets for evaluating retrieval, you possibly can mine questions from actual logs after which use a human to curate them.

You may as well use dataset turbines with the assistance of an LLM, which exist in most frameworks or as standalone instruments like YourBench.

If you happen to have been to arrange your personal dataset generator utilizing an LLM, you could possibly do one thing like beneath.

# Immediate to generate questions

qa_generate_prompt_tmpl = """

Context info is beneath.

---------------------

{context_str}

---------------------

Given the context info and no prior data

generate solely {num} questions and {num} solutions primarily based on the above context.

...

"""Nevertheless it must be a bit extra superior.

If we flip to the technology a part of the RAG system, we are actually measuring how effectively it solutions the query utilizing the supplied docs.

If this half isn’t performing effectively, we will regulate the immediate, tweak the mannequin settings (temperature, and so on.), substitute the mannequin completely, or fine-tune it for area experience. We will additionally drive it to “motive” utilizing CoT-style loops, examine for self-consistency, and so forth.

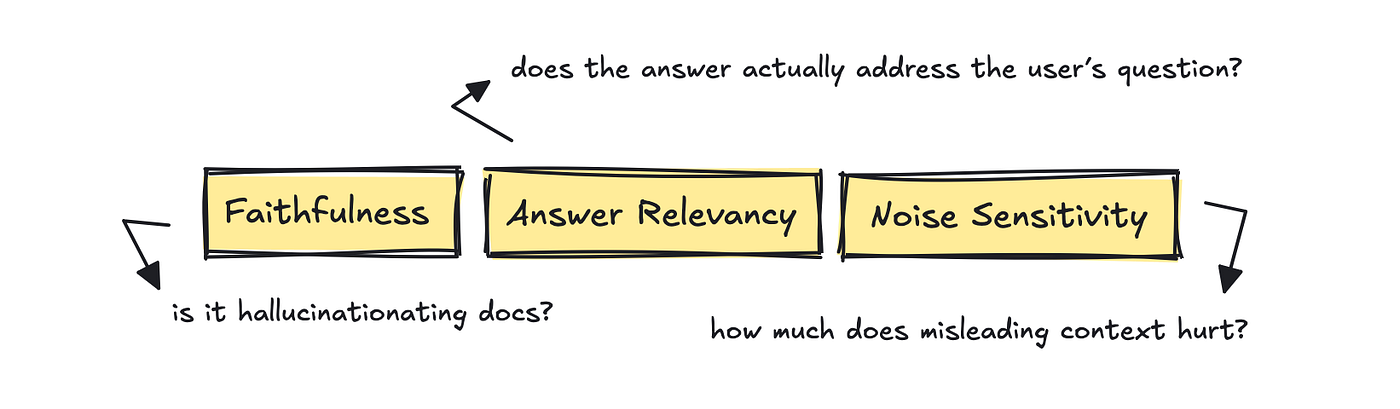

For this half, RAGAS is helpful with its metrics: Reply Relevancy, Faithfulness, and Noise Sensitivity.

These metrics ask whether or not the reply truly addresses the person’s query, whether or not each declare within the reply is supported by the retrieved docs, and whether or not a little bit of irrelevant context throws the mannequin off track.

If we have a look at RAGAS, what they possible do for the primary metric is ask the LLM to “Price from 0 to 1 how straight this reply addresses the query,” offering it with the query, reply, and retrieved context. This returns a uncooked 0–1 rating that can be utilized to compute averages.

So, to conclude we break up the system into two to judge, and though you need to use strategies that depend on the IR metrics it’s also possible to use reference free strategies that depend on an LLM to attain.

The very last thing we have to cowl is how brokers are increasing the set of metrics we now want to trace, past what we’ve already lined.

Brokers

With brokers, we’re not simply wanting on the output, the dialog, and the context.

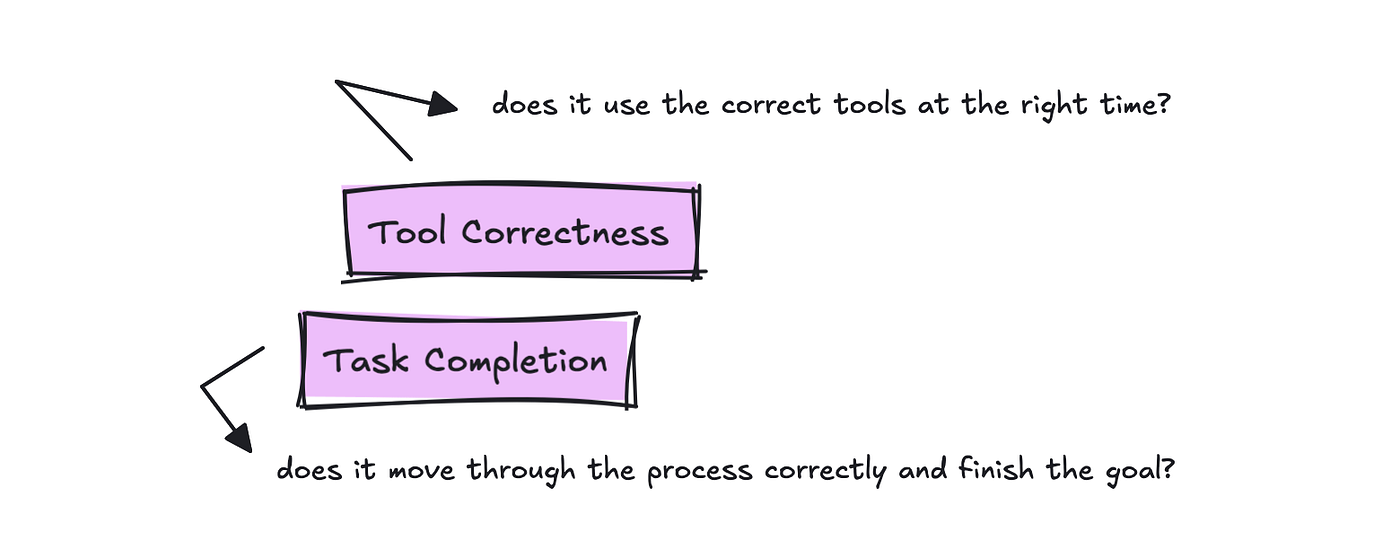

Now we’re additionally evaluating the way it “strikes”: whether or not it will possibly full a job or workflow, how successfully it does so, and whether or not it calls the best instruments on the proper time.

Frameworks will name these metrics in another way, however basically the highest two you need to observe are Process Completion and Software Correctness.

For monitoring device utilization, we need to know if the proper device was used for the person’s question.

We do want some sort of gold script with floor reality in-built to check every run, however you possibly can writer that after after which use it every time you make modifications.

For Process Completion, the analysis is to learn all the hint and the objective, and return a quantity between 0 and 1 with a rationale. This could measure how efficient the agent is at carrying out the duty.

For brokers, you’ll nonetheless want to check different issues we’ve already lined, relying in your utility

I simply have to notice: even when there are fairly just a few outlined metrics out there, your use case will differ, so it’s value registering what the frequent ones are however don’t assume they’re the perfect ones to trace.

Subsequent, let’s flip to get an outline of the favored frameworks on the market that may make it easier to out.

Eval frameworks

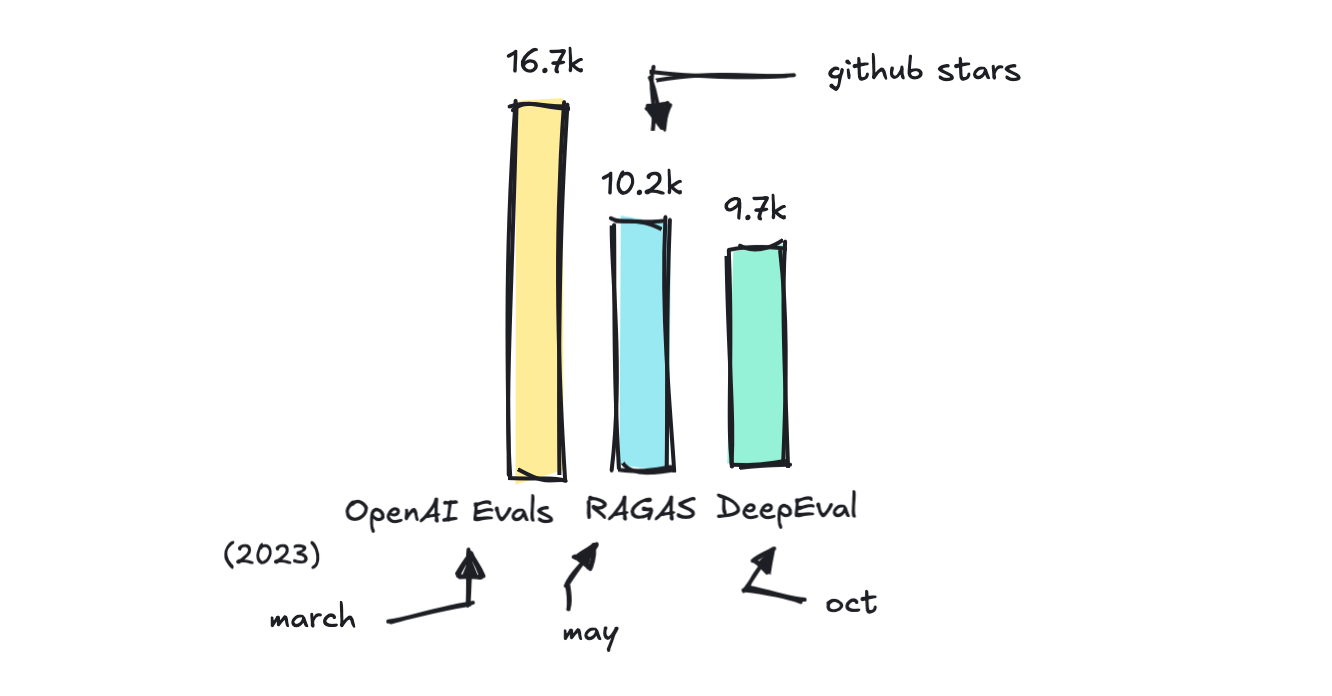

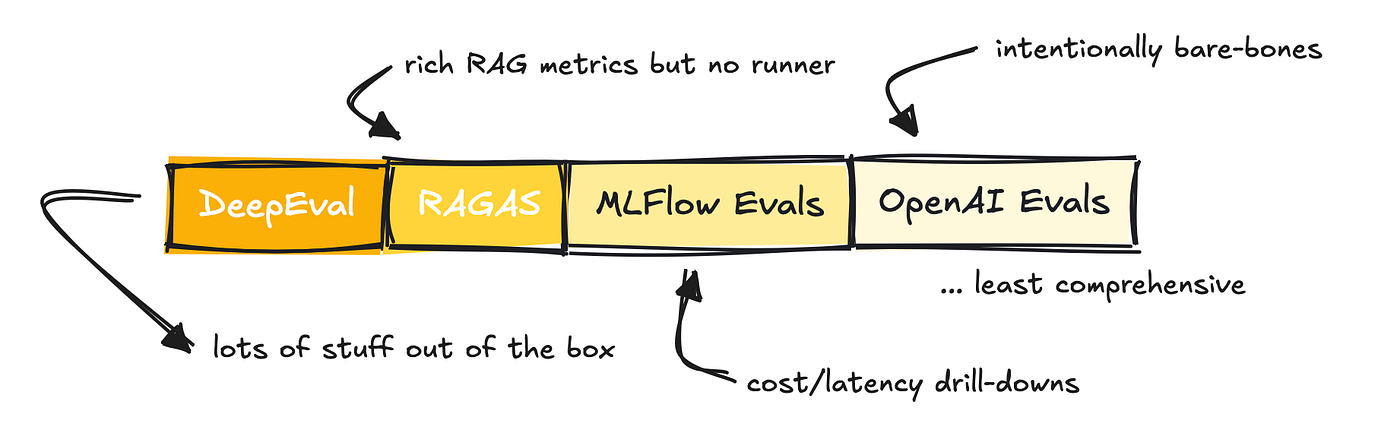

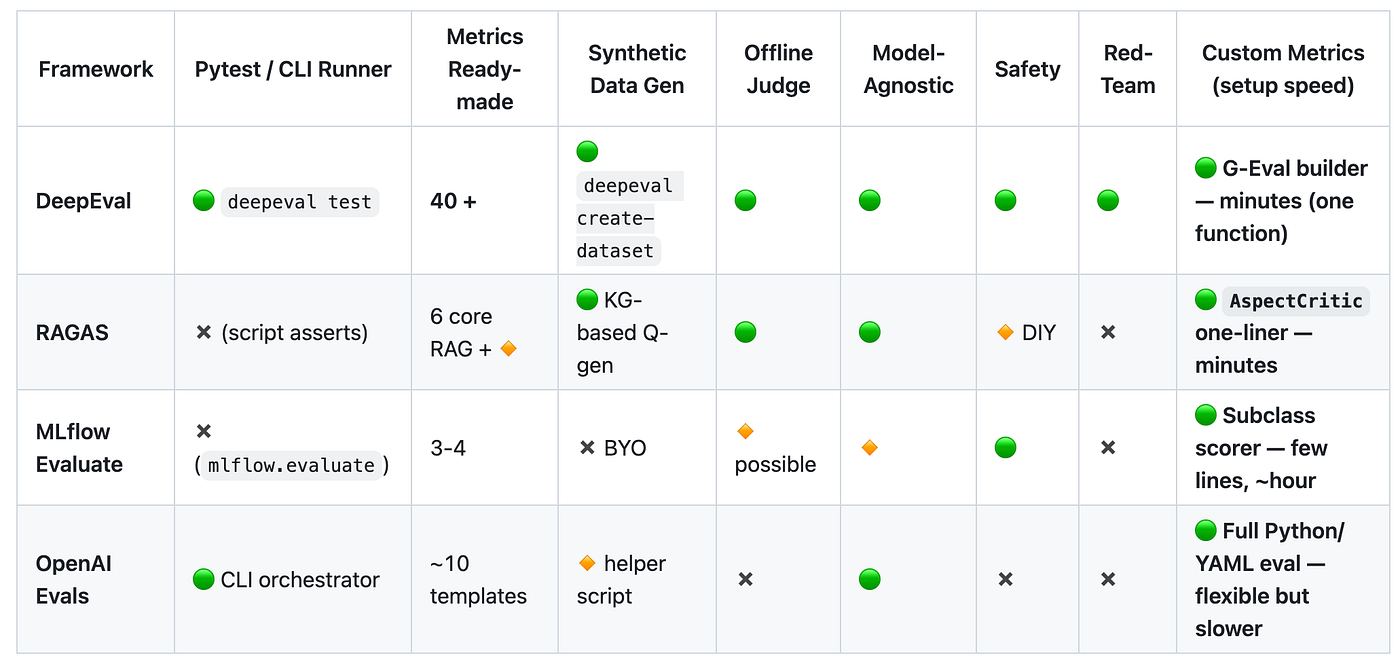

There are fairly just a few frameworks that make it easier to out with evals, however I need to discuss just a few widespread ones: RAGAS, DeepEval, OpenAI’s and MLFlow’s Evals, and break down what they’re good at and when to make use of what.

You’ll find the complete checklist of various eval frameworks I’ve present in this repository.

You may as well use fairly just a few framework-specific eval programs, equivalent to LlamaIndex, particularly for fast prototyping.

OpenAI and MLFlow’s Evals are add-ons quite than stand-alone frameworks, whereas RAGAS was primarily constructed as a metric library for evaluating RAG purposes (though they provide different metrics as effectively).

DeepEval is presumably essentially the most complete analysis library out of all of them.

Nevertheless, it’s necessary to say that all of them provide the power to run evals by yourself dataset, work for multi-turn, RAG, and brokers indirectly or one other, help LLM-as-a-judge, permit establishing customized metrics, and are CI-friendly.

They differ, as talked about, in how complete they’re.

MLFlow was primarily constructed to judge conventional ML pipelines, so the variety of metrics they provide is decrease for LLM-based apps. OpenAI is a really light-weight answer that expects you to arrange your personal metrics, though they supply an instance library that will help you get began.

RAGAS gives fairly just a few metrics and integrates with LangChain so you possibly can run them simply.

DeepEval presents so much out of the field, together with the RAGAS metrics.

You’ll be able to see the repository with the comparisons right here.

If we have a look at the metrics being provided, we will get a way of how in depth these options are.

It’s value noting that those providing metrics don’t at all times comply with a typical in naming. They might imply the identical factor however name it one thing totally different.

For instance, faithfulness in a single might imply the identical as groundedness in one other. Reply relevancy often is the similar as response relevance, and so forth.

This creates a whole lot of pointless confusion and complexity round evaluating programs normally.

However, DeepEval stands out with over 40 metrics out there and in addition presents a framework known as G-Eval, which helps you arrange customized metrics shortly making it the quickest approach from concept to a runnable metric.

OpenAI’s Evals framework is healthier suited while you need bespoke logic, not while you simply want a fast choose.

In line with the DeepEval workforce, customized metrics are what builders arrange essentially the most, so don’t get caught on who presents what metric. Your use case might be distinctive, and so will the way you consider it.

So, which do you have to use for what scenario?

Use RAGAS while you want specialised metrics for RAG pipelines with minimal setup. Choose DeepEval while you desire a full, out-of-the-box eval suite.

MLFlow is an efficient alternative in case you’re already invested in MLFlow or favor built-in monitoring and UI options. OpenAI’s Evals framework is essentially the most barebones, so it’s greatest in case you’re tied into OpenAI infrastructure and wish flexibility.

Lastly, DeepEval additionally gives pink teaming by way of their DeepTeam framework, which automates adversarial testing of LLM programs. There are different frameworks on the market that do that too, though maybe not as extensively.

I’ll should do one thing on adversarial testing of LLM programs and immediate injections sooner or later. It’s an fascinating subject.

The dataset enterprise is profitable enterprise which is why it’s nice that we’re now at this level the place we will use different LLMs to annotate knowledge, or rating exams.

Nevertheless, LLM judges aren’t magic and the evals you’ll arrange you’ll in all probability discover a bit flaky, simply as with every different LLM utility you construct. In line with the world extensive net, most groups and firms sample-audit with people each few weeks to remain actual.

The metrics you arrange to your app will possible be customized, so although I’ve now put you thru listening to about fairly many you’ll in all probability construct one thing by yourself.

It’s good to know what the usual ones are although.

Hopefully it proved academic anyhow.

If you happen to appreciated this one, make sure to learn a few of my different articles right here on TDS, or on Medium.

You’ll be able to comply with me right here, LinkedIn or my web site if you wish to get notified after I launch one thing new.

❤