second in a brief sequence on growing information dashboards utilizing the newest Python-based GUI growth instruments, Streamlit, Gradio, and Taipy.

The supply dataset for every dashboard would be the similar, however saved in several codecs. As a lot as attainable, I’ll additionally attempt to make the precise dashboard layouts for every device resemble one another and have the identical performance.

Within the first a part of this sequence, I created a Streamlit model of the dashboard that retrieves its information from a neighborhood PostgreSQL database. You may view that article right here.

This time, we’re exploring the usage of the Gradio library.

The info for this dashboard can be in a neighborhood CSV file, and Pandas can be our major information processing engine.

If you wish to see a fast demo of the app, I’ve deployed it to Hugging Face Areas. You may run it utilizing the hyperlink under, however observe that the 2 enter date picker pop-ups don’t work as a result of a identified bug within the Hugging Face atmosphere. That is solely the case for deployed apps on HF, you possibly can nonetheless change the dates manually. Working the app domestically works advantageous and doesn’t have this challenge.

What’s Gradio?

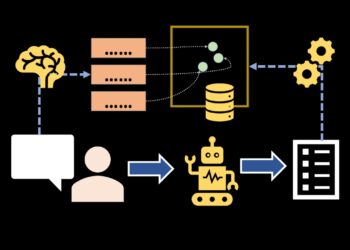

Gradio is an open-source Python bundle that simplifies the method of constructing demos or internet purposes for machine studying fashions, APIs, or any Python perform. With it, you possibly can create demos or internet purposes without having JavaScript, CSS, or internet hosting expertise. By writing just some traces of Python code, you possibly can unlock the ability of Gradio and seamlessly showcase your machine-learning fashions to a broader viewers.

Gradio simplifies the event course of by offering an intuitive framework that eliminates the complexities related to constructing consumer interfaces from scratch. Whether or not you’re a machine studying developer, researcher, or fanatic, Gradio means that you can create lovely and interactive demos that improve the understanding and accessibility of your machine studying fashions.

This open-source Python bundle helps you bridge the hole between your machine studying experience and a broader viewers, making your fashions accessible and actionable.

What we’ll develop

We’re growing a knowledge dashboard. Our supply information can be a single CSV file containing 100,000 artificial gross sales information.

The precise supply of the info isn’t that vital. It may simply as simply be a textual content file, an Excel file, SQLite, or any database you possibly can hook up with.

That is what our closing dashboard will appear to be.

There are 4 fundamental sections.

- The highest row allows the consumer to pick particular begin and finish dates and/or product classes utilizing date pickers and a drop-down listing, respectively.

- The second row — Key metrics — reveals a top-level abstract of the chosen information.

- The Visualisation part permits the consumer to pick one in all three graphs to show the enter dataset.

- The uncooked information part is exactly what it claims to be. This tabular illustration of the chosen information successfully reveals a snapshot of the underlying CSV information file.

Utilizing the dashboard is simple. Initially, stats for the entire information set are displayed. The consumer can then slender the info focus utilizing the three filter fields on the prime of the show. The graphs, key metrics, and uncooked information sections dynamically replace to replicate the consumer’s selections within the filter fields.

The underlying information

As talked about, the dashboard’s supply information is contained in a single comma-separated values (CSV) file. The info consists of 100,000 artificial sales-related information. Listed below are the primary ten information of the file to offer you an concept of what it appears to be like like.

+----------+------------+------------+----------------+------------+---------------+------------+----------+-------+--------------------+

| order_id | order_date | customer_id| customer_name | product_id | product_names | classes | amount | value | complete |

+----------+------------+------------+----------------+------------+---------------+------------+----------+-------+--------------------+

| 0 | 01/08/2022 | 245 | Customer_884 | 201 | Smartphone | Electronics| 3 | 90.02 | 270.06 |

| 1 | 19/02/2022 | 701 | Customer_1672 | 205 | Printer | Electronics| 6 | 12.74 | 76.44 |

| 2 | 01/01/2017 | 184 | Customer_21720 | 208 | Pocket book | Stationery | 8 | 48.35 | 386.8 |

| 3 | 09/03/2013 | 275 | Customer_23770 | 200 | Laptop computer | Electronics| 3 | 74.85 | 224.55 |

| 4 | 23/04/2022 | 960 | Customer_23790 | 210 | Cupboard | Workplace | 6 | 53.77 | 322.62 |

| 5 | 10/07/2019 | 197 | Customer_25587 | 202 | Desk | Workplace | 3 | 47.17 | 141.51 |

| 6 | 12/11/2014 | 510 | Customer_6912 | 204 | Monitor | Electronics| 5 | 22.5 | 112.5 |

| 7 | 12/07/2016 | 150 | Customer_17761 | 200 | Laptop computer | Electronics| 9 | 49.33 | 443.97 |

| 8 | 12/11/2016 | 997 | Customer_23801 | 209 | Espresso Maker | Electronics| 7 | 47.22 | 330.54 |

| 9 | 23/01/2017 | 151 | Customer_30325 | 207 | Pen | Stationery | 6 | 3.5 | 21 |

+----------+------------+------------+----------------+------------+---------------+------------+----------+-------+--------------------+And right here is a few Python code you need to use to generate an analogous dataset. Be certain that each the NumPy and Pandas libraries are put in first.

# generate the 100K file CSV file

#

import polars as pl

import numpy as np

from datetime import datetime, timedelta

def generate(nrows: int, filename: str):

names = np.asarray(

[

"Laptop",

"Smartphone",

"Desk",

"Chair",

"Monitor",

"Printer",

"Paper",

"Pen",

"Notebook",

"Coffee Maker",

"Cabinet",

"Plastic Cups",

]

)

classes = np.asarray(

[

"Electronics",

"Electronics",

"Office",

"Office",

"Electronics",

"Electronics",

"Stationery",

"Stationery",

"Stationery",

"Electronics",

"Office",

"Sundry",

]

)

product_id = np.random.randint(len(names), dimension=nrows)

amount = np.random.randint(1, 11, dimension=nrows)

value = np.random.randint(199, 10000, dimension=nrows) / 100

# Generate random dates between 2010-01-01 and 2023-12-31

start_date = datetime(2010, 1, 1)

end_date = datetime(2023, 12, 31)

date_range = (end_date - start_date).days

# Create random dates as np.array and convert to string format

order_dates = np.array([(start_date + timedelta(days=np.random.randint(0, date_range))).strftime('%Y-%m-%d') for _ in range(nrows)])

# Outline columns

columns = {

"order_id": np.arange(nrows),

"order_date": order_dates,

"customer_id": np.random.randint(100, 1000, dimension=nrows),

"customer_name": [f"Customer_{i}" for i in np.random.randint(2**15, size=nrows)],

"product_id": product_id + 200,

"product_names": names[product_id],

"classes": classes[product_id],

"amount": amount,

"value": value,

"complete": value * amount,

}

# Create Polars DataFrame and write to CSV with express delimiter

df = pl.DataFrame(columns)

df.write_csv(filename, separator=',',include_header=True) # Guarantee comma is used because the delimiter

# Generate 100,000 rows of information with random order_date and save to CSV

generate(100_000, "/mnt/d/sales_data/sales_data.csv")Putting in and utilizing Gradio

Putting in Gradio is simple utilizing pip, however for coding, one of the best observe is to arrange a separate Python atmosphere for all of your work. I exploit Miniconda for that objective, however be at liberty to make use of no matter methodology fits your work observe.

If you wish to go down the conda route and don’t have already got it, you should set up Miniconda (advisable) or Anaconda first.

Please observe that, on the time of writing, Gradio wants a minimum of Python 3.8 put in to work accurately.

As soon as the atmosphere is created, change to it utilizing the ‘activate’ command, after which run ‘pip set up’ to set up our required Python libraries.

#create our check atmosphere

(base) C:Usersthoma>conda create -n gradio_dashboard python=3.12 -y

# Now activate it

(base) C:Usersthoma>conda activate gradio_dashboard

# Set up python libraries, and so on ...

(gradio_dashboard) C:Usersthoma>pip set up gradio pandas matplotlib cachetoolsKey variations between Streamlit and Gradio

As I’ll reveal on this article, it’s attainable to supply very related information dashboards utilizing Streamlit and Gradio. Nevertheless, their ethos differs in a number of key methods.

Focus

- Gradio specialises in creating interfaces for machine studying fashions, while Streamlit is extra designed for general-purpose information purposes and visualisations.

Ease of use

- Gradio is understood for its simplicity and speedy prototyping capabilities, making it simpler for newcomers to make use of. Streamlit presents extra superior options and customisation choices, which can require a steeper studying curve.

Interactivity

- Streamlit makes use of a reactive Programming mannequin the place any enter change triggers an entire script rerun, updating all parts instantly. Gradio, by default, updates solely when a consumer clicks a submit button, although it may be configured for stay updates.

Customization

- Gradio focuses on pre-built parts for rapidly demonstrating AI fashions. Streamlit gives extra in depth customisation choices and suppleness for advanced tasks.

Deployment

- Having deployed each a Streamlit and a Gradio app, I’d say it’s simpler to deploy a Streamlit app than a Gradio app. In Streamlit, deployment could be executed with a single click on by way of the Streamlit Group Cloud. This performance is constructed into any Streamlit app you create. Gradio presents deployment utilizing Hugging Face Areas, but it surely includes extra work. Neither methodology is especially advanced, although.

Use instances

Streamlit excels in creating data-centric purposes and interactive dashboards for advanced tasks. Gradio is right for rapidly showcasing machine studying fashions and constructing easier purposes.

The Gradio Dashboard Code

I’ll break down the code into sections and clarify each as we proceed.

We start by importing the required exterior libraries and loading the complete dataset from the CSV file right into a Pandas DataFrame.

import gradio as gr

import pandas as pd

import matplotlib.pyplot as plt

import datetime

import warnings

import os

import tempfile

from cachetools import cached, TTLCache

warnings.filterwarnings("ignore", class=FutureWarning, module="seaborn")

# ------------------------------------------------------------------

# 1) Load CSV information as soon as

# ------------------------------------------------------------------

csv_data = None

def load_csv_data():

world csv_data

# Non-obligatory: specify column dtypes if identified; regulate as vital

dtype_dict = {

"order_id": "Int64",

"customer_id": "Int64",

"product_id": "Int64",

"amount": "Int64",

"value": "float",

"complete": "float",

"customer_name": "string",

"product_names": "string",

"classes": "string"

}

csv_data = pd.read_csv(

"d:/sales_data/sales_data.csv",

parse_dates=["order_date"],

dayfirst=True, # in case your dates are DD/MM/YYYY format

low_memory=False,

dtype=dtype_dict

)

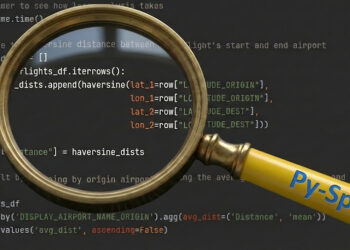

load_csv_data()Subsequent, we configure a time-to-live cache with a most of 128 objects and an expiration of 300 seconds. That is used to retailer the outcomes of pricy perform calls and velocity up repeated lookups

The get_unique_categories perform returns a listing of distinctive, cleaned (capitalised) classes from the `csv_data` DataFrame, caching the outcome for faster entry.

The get_date_range perform returns the minimal and most order dates from the dataset, or None if the info is unavailable.

The filter_data perform filters the csv_data DataFrame primarily based on a specified date vary and non-compulsory class, returning the filtered DataFrame.

The get_dashboard_stats perform retrieves abstract metrics — complete income, complete orders, common order worth, and prime class — for the given filters. Internally it makes use of filter_data() to scope the dataset after which calculate these key statistics.

The get_data_for_table function returns an in depth DataFrame of filtered gross sales information, sorted by order_id and order_date, together with further income for every sale.

The get_plot_data perform codecs information for producing a plot by summing income over time, grouped by date.

The get_revenue_by_category perform aggregates and returns income by class, sorted by income, throughout the specified date vary and class.

The get_top_products perform returns the highest 10 merchandise by income, filtered by date vary and class.

Based mostly on the orientation argument, the create_matplotlib_figure perform generates a bar plot from the info and saves it as a picture file, both vertical or horizontal.

cache = TTLCache(maxsize=128, ttl=300)

@cached(cache)

def get_unique_categories():

world csv_data

if csv_data is None:

return []

cats = sorted(csv_data['categories'].dropna().distinctive().tolist())

cats = [cat.capitalize() for cat in cats]

return cats

def get_date_range():

world csv_data

if csv_data is None or csv_data.empty:

return None, None

return csv_data['order_date'].min(), csv_data['order_date'].max()

def filter_data(start_date, end_date, class):

world csv_data

if isinstance(start_date, str):

start_date = datetime.datetime.strptime(start_date, '%Y-%m-%d').date()

if isinstance(end_date, str):

end_date = datetime.datetime.strptime(end_date, '%Y-%m-%d').date()

df = csv_data.loc[

(csv_data['order_date'] >= pd.to_datetime(start_date)) &

(csv_data['order_date'] <= pd.to_datetime(end_date))

].copy()

if class != "All Classes":

df = df.loc[df['categories'].str.capitalize() == class].copy()

return df

def get_dashboard_stats(start_date, end_date, class):

df = filter_data(start_date, end_date, class)

if df.empty:

return (0, 0, 0, "N/A")

df['revenue'] = df['price'] * df['quantity']

total_revenue = df['revenue'].sum()

total_orders = df['order_id'].nunique()

avg_order_value = total_revenue / total_orders if total_orders else 0

cat_revenues = df.groupby('classes')['revenue'].sum().sort_values(ascending=False)

top_category = cat_revenues.index[0] if not cat_revenues.empty else "N/A"

return (total_revenue, total_orders, avg_order_value, top_category.capitalize())

def get_data_for_table(start_date, end_date, class):

df = filter_data(start_date, end_date, class)

if df.empty:

return pd.DataFrame()

df = df.sort_values(by=["order_id", "order_date"], ascending=[True, False]).copy()

columns_order = [

"order_id", "order_date", "customer_id", "customer_name",

"product_id", "product_names", "categories", "quantity",

"price", "total"

]

columns_order = [col for col in columns_order if col in df.columns]

df = df[columns_order].copy()

df['revenue'] = df['price'] * df['quantity']

return df

def get_plot_data(start_date, end_date, class):

df = filter_data(start_date, end_date, class)

if df.empty:

return pd.DataFrame()

df['revenue'] = df['price'] * df['quantity']

plot_data = df.groupby(df['order_date'].dt.date)['revenue'].sum().reset_index()

plot_data.rename(columns={'order_date': 'date'}, inplace=True)

return plot_data

def get_revenue_by_category(start_date, end_date, class):

df = filter_data(start_date, end_date, class)

if df.empty:

return pd.DataFrame()

df['revenue'] = df['price'] * df['quantity']

cat_data = df.groupby('classes')['revenue'].sum().reset_index()

cat_data = cat_data.sort_values(by='income', ascending=False)

return cat_data

def get_top_products(start_date, end_date, class):

df = filter_data(start_date, end_date, class)

if df.empty:

return pd.DataFrame()

df['revenue'] = df['price'] * df['quantity']

prod_data = df.groupby('product_names')['revenue'].sum().reset_index()

prod_data = prod_data.sort_values(by='income', ascending=False).head(10)

return prod_data

def create_matplotlib_figure(information, x_col, y_col, title, xlabel, ylabel, orientation='v'):

plt.determine(figsize=(10, 6))

if information.empty:

plt.textual content(0.5, 0.5, 'No information out there', ha='heart', va='heart')

else:

if orientation == 'v':

plt.bar(information[x_col], information[y_col])

plt.xticks(rotation=45, ha='proper')

else:

plt.barh(information[x_col], information[y_col])

plt.gca().invert_yaxis()

plt.title(title)

plt.xlabel(xlabel)

plt.ylabel(ylabel)

plt.tight_layout()

with tempfile.NamedTemporaryFile(delete=False, suffix=".png") as tmpfile:

plt.savefig(tmpfile.title)

plt.shut()

return tmpfile.titleThe update_dashboard perform retrieves key gross sales statistics (complete income, complete orders, common order worth, and prime class) by calling theget_dashboard_stats perform. It gathers information for 3 distinct visualisations (income over time, income by class, and prime merchandise), then makes use of create_matplotlib_figure to generate plots. It prepares and returns a knowledge desk (by way of the get_data_for_table() perform) together with all generated plots and stats to allow them to be displayed within the dashboard.

The create_dashboard perform units the date boundaries (minimal and most dates) and establishes the preliminary default filter values. It makes use of Gradio to assemble a consumer interface (UI) that includes date pickers, class drop-downs, key metric shows, plot tabs, and a knowledge desk. It then wires up the filters in order that altering any of them triggers a name to the update_dashboard perform, guaranteeing the dashboard visuals and metrics are all the time in sync with the chosen filters. Lastly, it returns the assembled Gradio interface launched as an online utility.

def update_dashboard(start_date, end_date, class):

total_revenue, total_orders, avg_order_value, top_category = get_dashboard_stats(start_date, end_date, class)

# Generate plots

revenue_data = get_plot_data(start_date, end_date, class)

category_data = get_revenue_by_category(start_date, end_date, class)

top_products_data = get_top_products(start_date, end_date, class)

revenue_over_time_path = create_matplotlib_figure(

revenue_data, 'date', 'income',

"Income Over Time", "Date", "Income"

)

revenue_by_category_path = create_matplotlib_figure(

category_data, 'classes', 'income',

"Income by Class", "Class", "Income"

)

top_products_path = create_matplotlib_figure(

top_products_data, 'product_names', 'income',

"Prime Merchandise", "Income", "Product Title", orientation='h'

)

# Information desk

table_data = get_data_for_table(start_date, end_date, class)

return (

revenue_over_time_path,

revenue_by_category_path,

top_products_path,

table_data,

total_revenue,

total_orders,

avg_order_value,

top_category

)

def create_dashboard():

min_date, max_date = get_date_range()

if min_date is None or max_date is None:

min_date = datetime.datetime.now()

max_date = datetime.datetime.now()

default_start_date = min_date

default_end_date = max_date

with gr.Blocks(css="""

footer {show: none !vital;}

.tabs {border: none !vital;}

.gr-plot {border: none !vital; box-shadow: none !vital;}

""") as dashboard:

gr.Markdown("# Gross sales Efficiency Dashboard")

# Filters row

with gr.Row():

start_date = gr.DateTime(

label="Begin Date",

worth=default_start_date.strftime('%Y-%m-%d'),

include_time=False,

kind="datetime"

)

end_date = gr.DateTime(

label="Finish Date",

worth=default_end_date.strftime('%Y-%m-%d'),

include_time=False,

kind="datetime"

)

category_filter = gr.Dropdown(

selections=["All Categories"] + get_unique_categories(),

label="Class",

worth="All Classes"

)

gr.Markdown("# Key Metrics")

# Stats row

with gr.Row():

total_revenue = gr.Quantity(label="Whole Income", worth=0)

total_orders = gr.Quantity(label="Whole Orders", worth=0)

avg_order_value = gr.Quantity(label="Common Order Worth", worth=0)

top_category = gr.Textbox(label="Prime Class", worth="N/A")

gr.Markdown("# Visualisations")

# Tabs for Plots

with gr.Tabs():

with gr.Tab("Income Over Time"):

revenue_over_time_image = gr.Picture(label="Income Over Time", container=False)

with gr.Tab("Income by Class"):

revenue_by_category_image = gr.Picture(label="Income by Class", container=False)

with gr.Tab("Prime Merchandise"):

top_products_image = gr.Picture(label="Prime Merchandise", container=False)

gr.Markdown("# Uncooked Information")

# Information Desk (under the plots)

data_table = gr.DataFrame(

label="Gross sales Information",

kind="pandas",

interactive=False

)

# When filters change, replace the whole lot

for f in [start_date, end_date, category_filter]:

f.change(

fn=lambda s, e, c: update_dashboard(s, e, c),

inputs=[start_date, end_date, category_filter],

outputs=[

revenue_over_time_image,

revenue_by_category_image,

top_products_image,

data_table,

total_revenue,

total_orders,

avg_order_value,

top_category

]

)

# Preliminary load

dashboard.load(

fn=lambda: update_dashboard(default_start_date, default_end_date, "All Classes"),

outputs=[

revenue_over_time_image,

revenue_by_category_image,

top_products_image,

data_table,

total_revenue,

total_orders,

avg_order_value,

top_category

]

)

return dashboard

if __name__ == "__main__":

dashboard = create_dashboard()

dashboard.launch(share=False)Working the program

Create a Python file, e.g. gradio_test.py, and insert all of the above code snippets. Reserve it, and run it like this,

(gradio_dashboard) $ python gradio_test.py

* Working on native URL: http://127.0.0.1:7860

To create a public hyperlink, set `share=True` in `launch()`.Click on on the native URL proven, and the dashboard will open full display in your browser.

Abstract

This text gives a complete information to constructing an interactive gross sales efficiency dashboard utilizing Gradio and a CSV file as its supply information.

Gradio is a contemporary, Python-based open-source framework that simplifies the creation of data-driven dashboards and GUI purposes. The dashboard I developed permits customers to filter information by date ranges and product classes, view key metrics comparable to complete income and top-performing classes, discover visualisations like income developments and prime merchandise, and navigate by uncooked information with pagination.

I additionally talked about some key variations between growing visualisation instruments utilizing Gradio and Streamlit, one other common front-end Python library.

This information gives a complete implementation of a Gradio information dashboard, overlaying all the course of from creating pattern information to growing Python features for querying information, producing plots, and dealing with consumer enter. This step-by-step method demonstrates learn how to leverage Gradio’s capabilities to create user-friendly and dynamic dashboards, making it perfect for information engineers and scientists who wish to construct interactive information purposes.

Though I used a CSV file for my information, modifying the code to make use of one other information supply, comparable to a relational database administration system (RDBMS) like SQLite, needs to be simple. For instance, in my different article on this sequence on creating an analogous dashboard utilizing Streamlit, the info supply is a PostgreSQL database.