, I current my newest open-source undertaking — Authorities Funding Graph.

The inspiration for this undertaking got here from a want to make higher tooling for grant writing, specifically to counsel analysis matters, funding our bodies, analysis establishments, and researchers. I’ve made Innovate UK grant functions prior to now, so I’ve had an curiosity within the authorities funding panorama for a while.

Concretely, loads of the latest political discourse focuses on authorities spending, specifically Elon Musk’s Division of Authorities Effectivity (DOGE) in america and comparable sentiments echoed right here within the UK, as Kier Starmer seems to be to combine AI into authorities.

Maybe the discharge of this undertaking is kind of well timed. Albeit not the unique intention, I hope as a secondary final result of this text is that it evokes extra exploration into open supply datasets for public spending.

I’ve used Networkx & PyVis to visualise the graph of UKRI API knowledge. Then, I element a LlamaIndex graph RAG implementation. For completeness, I’ve additionally included my preliminary LangChain-based answer. The online framework is Streamlit, the demo is hosted on Streamlit group cloud.

This text incorporates the next sections.

- Definitions

- UKRI API

- Assemble NetworkX Graph

- Filter a NetworkX Graph

- Graph Visualisation Utilizing PyVis

- Graph RAG Utilizing LlamaIndex

- Linting With Pylint

- Streamlit Neighborhood Cloud Demo App (on the very finish of the article)

1. Definitions

What’s UKRI?

UK Analysis and Innovation is a non-departmental public physique sponsored by the Division for Science, Innovation and Expertise (DSIT) that allocates funding for analysis and growth. Typically, funding is awarded to analysis establishments and companies.

“We make investments £8 billion of taxpayers’ cash every year into analysis and innovation and the individuals who make it occur. We work throughout an enormous vary of fields — from biodiversity conservation to quantum computing, and from area telescopes to progressive well being care. We give everybody the chance to contribute and to profit, bringing collectively folks and organisations nationally and globally to create, develop and deploy new concepts and applied sciences.” — UKRI Web site

What’s a Graph?

A graph is a handy knowledge construction displaying the relationships between completely different entities (nodes) and their relationships to one another (edges). In some cases, we additionally affiliate these relationships with a numerical worth.

“In laptop science, a graph is an summary knowledge kind that’s meant to implement the undirected graph and directed graph ideas from the sphere of graph principle inside arithmetic.

A graph knowledge construction consists of a finite (and probably mutable) set of vertices (additionally known as nodes or factors), along with a set of unordered pairs of those vertices for an undirected graph or a set of ordered pairs for a directed graph. These pairs are often called edges (additionally known as hyperlinks or traces), and for a directed graph are also called edges but additionally generally arrows or arcs.” — Wikipedia

What’s NetworkX?

NetworkX is a helpful library on this undertaking to assemble and retailer our graph. Particularly, a digraph although the library helps many graph variants similar to multigraphs, the library additionally helps graph-related utility capabilities.

“NetworkX is a Python bundle for the creation, manipulation, and examine of the construction, dynamics, and capabilities of complicated networks.” — NetworkX Web site

What’s PyVis?

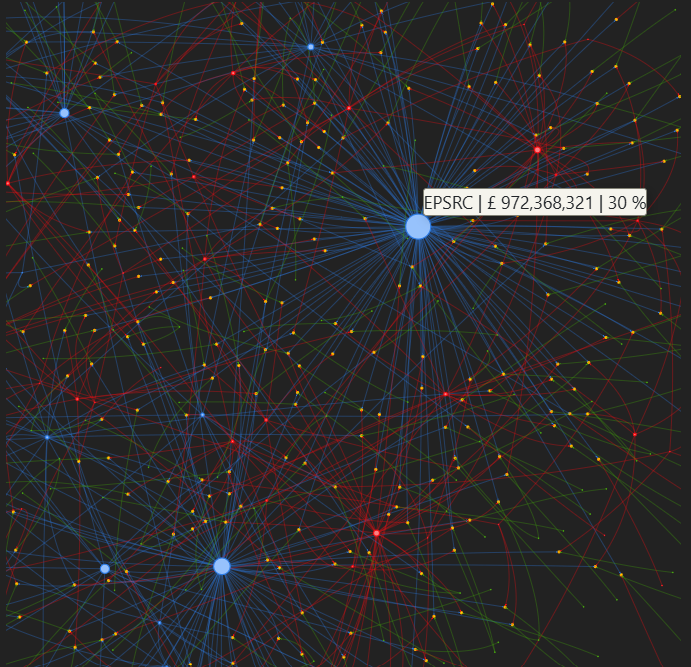

We use the PyVis Python bundle to create dynamic community views for our graph, screenshots of those will be discovered all through the article.

“The pyvis library is supposed for fast technology of visible community graphs with minimal python code. It’s designed as a wrapper across the well-liked Javascript visJS library” — PyVis Docs

What’s LlamaIndex?

LlamaIndex is a well-liked library for LLM functions, together with help for agentic workflows, we use it to carry out the graph RAG part of this undertaking.

“LlamaIndex (GPT Index) is a knowledge framework in your LLM software. Constructing with LlamaIndex sometimes entails working with LlamaIndex core and a selected set of integrations (or plugins).” — LlamaIndex Github

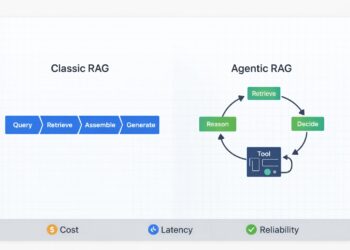

What’s Graph RAG?

Retrieval-augmented technology, or RAG as it’s generally recognized, is an AI framework for which extra context from an exterior data base is used to floor LLM solutions. Graph RAG, by extension, pertains to using a Graph to supply this extra context.

“GraphRAG is a strong retrieval mechanism that improves GenAI functions by benefiting from the wealthy context in graph knowledge constructions… Primary RAG programs rely solely on semantic search in vector databases to retrieve and rank units of remoted textual content fragments. Whereas this strategy can floor some related data, it fails to seize the context connecting these items. Because of this, fundamental RAG programs are ill-equipped to reply complicated, multi-hop questions. That is the place GraphRAG is available in. It makes use of data graphs to signify and join data to seize not solely extra knowledge factors but additionally their relationships. Thus, graph-based retrievers can present extra correct and related outcomes by uncovering hidden connections that aren’t usually apparent however are essential for correlating data.” — Neo4j Web site

What’s Streamlit?

Streamlit is a light-weight Python internet framework we are going to use to create the online software for this undertaking.

“Streamlit is an open-source Python framework for knowledge scientists and AI/ML engineers to ship dynamic knowledge apps with only some traces of code. Construct and deploy highly effective knowledge apps in minutes.” — Streamlit web site

2. UKRI API

The UKRI API is a service that facilitates entry to the general public UKRI grant funding dataset, authentication is just not required and the docs will be discovered right here. I exploit solely two endpoints for our software, they’re the Search initiatives endpoint and the Tasks endpoint. This enables a person to seek for initiatives based mostly on a key phrase search and retrieve all project-specific data.

A search time period, web page measurement and web page quantity are offered as question string parameters. The question string parameters;

selectedSortableField=professional.am&selectedSortOrder=DESC

Be sure that the outcomes are returned by funded worth descending.

I’ve additionally included the code I used for asynchronous pagination.

import math

import requests

import concurrent.futures

import os

from itertools import chain

import urllib.parse

import logging

def search_ukri_projects(args):

"""

Search UKRI initiatives based mostly on a search time period web page measurement and web page quantity.

Extra particulars will be discovered right here: https://gtr.ukri.org/assets/api.html

"""

search_term, page_size, page_number = args

attempt:

encoded_search_term = urllib.parse.quote(search_term)

if (

(

response := requests.get(

f"https://gtr.ukri.org/api/search/undertaking?time period={encoded_search_term}&web page={page_number}&fetchSize={page_size}&selectedSortableField=professional.am&selectedSortOrder=DESC&selectedFacets=&fields=undertaking.abs",

timeout=10,

)

)

and (response.status_code == 200)

and (

objects := response.json()

.get("facetedSearchResultBean", {})

.get("outcomes")

)

):

return objects

besides Exception as error:

logging.exception("ERROR search_ukri_projects: %s", error)

return []

def search_ukri_paginate(search_term, number_of_results, page_size=100):

"""

Asynchronous pagination requests for undertaking lookup.

"""

args = [

(search_term, page_size, page_number + 1)

for page_number in range(int(math.ceil(number_of_results / page_size)))

]

with concurrent.futures.ThreadPoolExecutor(os.cpu_count()) as executor:

future = executor.map(search_ukri_projects, args)

outcomes = [result for result in future if result]

return checklist(chain.from_iterable(outcomes))[:number_of_results]The next operate is used to get project-specific knowledge utilizing the distinctive UKRI undertaking reference. The undertaking reference is derived from the aforementioned undertaking search outcomes.

import requests

import logging

def get_ukri_project_data(project_grant_reference):

"""

Search UKRI undertaking knowledge based mostly on grant reference.

"""

attempt:

if (

(

response := requests.get(

f"https://gtr.ukri.org/api/initiatives?ref={project_grant_reference}",

timeout=10,

)

)

and (response.status_code == 200)

and (objects := response.json().get("projectOverview", {}))

):

return objects

besides Exception as error:

logging.exception("ERROR get_ukri_project_data: %s", error)Equally, we parse out the related knowledge for the development of the graph and take away superfluous data.

def parse_data(initiatives):

"""

Parse undertaking knowledge right into a usable format and validate.

"""

knowledge = []

for undertaking in initiatives:

project_composition = undertaking.get("projectComposition", {})

project_data = project_composition.get("undertaking", {})

fund = project_data.get("fund", {})

funder = fund.get("funder")

value_pounds = fund.get("valuePounds")

lead_research_organisation = project_composition.get("leadResearchOrganisation")

person_roles = project_composition.get("personRoles")

if all(

[

project_composition,

project_data,

fund,

funder,

value_pounds,

lead_research_organisation,

]

):

file = {}

file["funder_name"] = funder.get("title")

file["funder_link"] = funder.get("resourceUrl")

file["project_title"] = project_data.get("title")

file["project_grant_reference"] = project_data.get("grantReference")

file["value"] = value_pounds

file["lead_research_organisation"] = lead_research_organisation.get(

"title", ""

)

file["lead_research_organisation_link"] = lead_research_organisation.get(

"resourceUrl", ""

)

file["people"] = person_roles

file["project_url"] = project_data.get("resourceUrl")

knowledge.append(file)

return knowledge3. Assemble NetworkX Graph

There are several types of graphs, and I elected for a directed graph the place the path of the sides are necessary. Extra formally;

“A DiGraph shops nodes and edges with non-obligatory knowledge, or attributes. DiGraphs maintain directed edges. Self loops are allowed however a number of (parallel) edges usually are not.” — NetworkX Web site

To assemble the NetworkX graph, we should add nodes and edges — together with the sequential updating of node attributes.

The usual attributes, appropriate with PyVis graph rendering for nodes are as follows;

- Title (The label that seems on hover over)

- Group (The color coding)

- Measurement (How giant the nodes seem within the graph)

We additionally use the customized attribute “funding”, which we are going to use to sum the entire funding for analysis and funding organizations. This can be normalized to set the node measurement in accordance with the proportion of complete funding for a specific group.

For our graph, now we have nodes from 4 teams. They’re categorised as: funder_name, lead_research_organisation, project_title and person_name.

HTML hyperlinks can be utilized within the node title to permit the person to simply click on by way of to a URL. I’ve included a helper operate to do that under. There are undertaking, folks, and analysis organisation-specific hyperlinks that, if redirected to supply extra data to the person.

The code to assemble the NetworkX graph will be seen under. The DiGraph class has strategies to test if a graph already has a node and equally for edges. There are additionally strategies for including nodes and edges. As we iterate by way of initiatives, we wish to sum the overall funding quantity for the funding group and lead analysis establishment. There are strategies to each get an attribute from a node within the graph and set an attribute on a node. Relying on the supply and vacation spot node, we additionally apply completely different titles and labels to mirror that particular predicate. These will be seen within the code under.

import networkx as nx

def get_link_html(hyperlink, textual content):

"""

Helper operate to assemble a HTML hyperlink.

"""

return f"""{textual content}"""

def set_networkx_attribute(graph, node_label, attribute_name, worth):

"""

Helper to set attribute for networkx graph.

"""

attrs = {node_label: {attribute_name: worth}}

nx.set_node_attributes(graph, attrs)

def append_networkx_value(graph, node_label, attribute_name, worth):

"""

Helper to append worth to present node attribute scalar worth.

"""

current_map = nx.get_node_attributes(graph, attribute_name, default=0)

current_value = current_map[node_label]

current_value = current_value + worth

set_networkx_attribute(graph, node_label, attribute_name, current_value)

def create_networkx(knowledge):

"""

Create networkx graph from UKRI knowledge.

"""

graph = nx.DiGraph()

for row in knowledge:

if (

(funder_name := row.get("funder_name"))

and (project_title := row.get("project_title"))

and (lead_research_organisation := row.get("lead_research_organisation"))

):

project_data_lookup = row.get("project_data_lookup", {})

if not graph.has_node(funder_name):

graph.add_node(

funder_name, title=funder_name, group="funder_name", measurement=100

)

if not graph.has_node(project_title):

link_html = get_link_html(

row.get("project_url", "").exchange("api/", ""), project_title

)

graph.add_node(

project_title,

title=link_html,

group="project_title",

project_data_lookup=project_data_lookup,

measurement=25,

)

if not graph.has_edge(funder_name, project_title):

graph.add_edge(

funder_name,

project_title,

worth=row.get("worth"),

title=f"{'£{:,.2f}'.format(row.get('worth'))}",

label=f"{'£{:,.2f}'.format(row.get('worth'))}",

)

if not graph.has_node(lead_research_organisation):

link_html = get_link_html(

row.get("lead_research_organisation_link").exchange("api/", ""),

lead_research_organisation,

)

graph.add_node(

lead_research_organisation,

title=link_html,

group="lead_research_organisation",

measurement=50,

)

if not graph.has_edge(lead_research_organisation, project_title):

graph.add_edge(

lead_research_organisation, project_title, title="RELATES TO"

)

append_networkx_value(graph, funder_name, "funding", row.get("worth", 0))

append_networkx_value(graph, project_title, "funding", row.get("worth", 0))

append_networkx_value(

graph, lead_research_organisation, "funding", row.get("worth", 0)

)

person_roles = row.get(

"folks", []

)

for individual in person_roles:

if (

(person_name := individual.get("fullName"))

and (person_link := individual.get("resourceUrl"))

and (project_title := row.get("project_title"))

and (roles := individual.get("roles"))

):

if not graph.has_node(person_name):

link_html = get_link_html(

person_link.exchange("api/", ""), person_name

)

graph.add_node(

person_name, title=link_html, group="person_name", measurement=10

)

for position in roles:

if (not graph.has_edge(person_name, project_title)) or (

not graph[person_name][project_title]["title"]

== position.get("title")

):

graph.add_edge(

person_name,

project_title,

title=position.get("title"),

label=position.get("title"),

)

return graphAs soon as the graph has been constructed and as beforehand described, I wished to normalize the node sizes relying on the proportion of the overall quantity of funding for explicit teams. I additionally append the overall funding, each as a summation and as a proportion to the node label so it may be extra simply seen by a person.

The size issue is only a a number of utilized for aesthetic causes, such that the node sizes seem relative to the opposite node teams current.

import networkx as nx

import math

import utils.config as config # pylint: disable=consider-using-from-import, import-error

def set_networkx_attribute(graph, node_label, attribute_name, worth):

"""

Helper to set attribute for networkx graph.

"""

attrs = {node_label: {attribute_name: worth}}

nx.set_node_attributes(graph, attrs)

def calculate_total_funding_from_group(graph, group):

"""

Helper to calculate complete funding for a bunch.

"""

return sum(

[

data.get("funding")

for node_label, data in graph.nodes(data=True)

if data.get("funding") and data.get("group") == group

]

)

def set_weighted_size_helper(graph, node_label, totals, knowledge):

"""

Create normalized weights based mostly on proportion funding quantity.

"""

if (

(group := knowledge.get("group"))

and (total_funding := totals.get(group))

and (funding := knowledge.get("funding"))

):

div = funding / total_funding

funding_percentage = math.ceil(((100.0 * div)))

set_networkx_attribute(graph, node_label, "measurement", funding_percentage)

def annotate_value_on_graph(graph):

"""

Calculate normalized graph sizes and append to title.

"""

totals = {}

for group in ["lead_research_organisation", "funder_name"]:

totals[group] = calculate_total_funding_from_group(graph, group)

for node_label, knowledge in graph.nodes(knowledge=True):

if (

(funding := knowledge.get("funding"))

and (group := knowledge.get("group"))

and (title := knowledge.get("title"))

):

new_title = f"{title} | {'£ {:,.0f}'.format(funding)}"

if total_funding := totals.get(group):

div = funding / total_funding

funding_percentage = math.ceil(((100.0 * div)))

set_networkx_attribute(

graph,

node_label,

"measurement",

config.NODE_SIZE_SCALE_FACTOR * funding_percentage,

)

new_title += f" | {' {:,.0f}'.format(funding_percentage)} %"

set_networkx_attribute(graph, node_label, "title", new_title)4. Filter a NetworkX Graph

I enable the person to filter nodes by way of the UI to create a subgraph. The shape to do that in Streamlit is under. I additionally discover the neighbors of neighbors for the filtered nodes. I had some points with Pylint elevating pointless comprehension errors from the generator, which I’ve disabled — extra on Pylint later within the article. A smaller graph will take much less time to render and can be sure that irrelevant context can be excluded.

import networkx as nx

import streamlit as st

def find_neighbor_nodes_helper(node_list, graph):

"""

Discover distinctive node neighbors and flatten.

"""

successors_generator_array = [

# pylint: disable=unnecessary-comprehension

[item for item in graph.successors(node)]

for node in node_list

]

predecessors_generator_array = [

# pylint: disable=unnecessary-comprehension

[item for item in graph.predecessors(node)]

for node in node_list

]

neighbors = successors_generator_array + predecessors_generator_array

flat = sum(neighbors, [])

return checklist(set(flat))

def render_filter_form(annotated_node_data, graph):

"""

Render type to permit the person to outline search nodes.

"""

st.session_state["filter"] = st.radio(

"Filter", ["No filter", "Filter results"], index=0, horizontal=True

)

if (filter_determinant := st.session_state.get("filter")) and (

filter_determinant == "Filter outcomes"

):

st.session_state["node_group"] = st.selectbox(

"Entity kind", checklist(annotated_node_data.keys())

)

if node_group := st.session_state.get("node_group"):

ordered_lookup = dict(

sorted(

annotated_node_data[node_group].objects(),

key=lambda merchandise: merchandise[1].get("neighbor_len"),

reverse=True,

)

)

st.session_state["search_nodes_label"] = st.multiselect(

"Filter initiatives", checklist(ordered_lookup.keys())

)

if search_nodes_label := st.session_state.get("search_nodes_label"):

filter_nodes = [

ordered_lookup[label].get("label") for label in search_nodes_label

]

search_nodes_neighbors = find_neighbor_nodes_helper(filter_nodes, graph)

search_nodes = find_neighbor_nodes_helper(search_nodes_neighbors, graph)

st.session_state["search_nodes"] = checklist(

set(search_nodes + filter_nodes + search_nodes_neighbors)

)NetworkX makes it simple to create a subgraph from a listing of nodes with the subgraph_view operate, which takes a callable as a parameter. The callable takes a graph node as a parameter and if the boolean True worth is returned, the node could be included within the subgraph.

import networkx as nx

import streamlit as st

def filter_node(node):

"""

Verify to see if the filter time period is within the nodes chosen.

"""

if (

(filter_term := st.session_state.get("filter"))

and (filter_term == "Filter outcomes")

and (search_nodes := st.session_state.get("search_nodes"))

):

if node not in search_nodes:

return False

return True

graph = nx.subgraph_view(graph, filter_node=filter_node)5. Graph Visualisation Utilizing PyVis

To supply the visualizations I’ve introduced earlier within the article, we should first convert the NetworkX graph to a PyVis community after which render the HTML file throughout the Streamlit UI.

If you’re unfamiliar with Streamlit, you possibly can see one in every of my different articles that discover the subject right here.

Changing a NetworkX graph to PyVis format is comparatively trivial and will be achieved with the code under. The Community class is the principle class for visualization performance, first we instantiate the category and on this instance, the graph is directed. The barnes_hut technique is then known as, which is a gravity mannequin. The from_nx technique takes an current NetworkX graph as an argument and interprets it to PyVis, which is known as in place.

from pyvis.community import Community

def convert_graph(graph):

"""

Convert networkx to pyvis graph.

"""

internet = Community(

top="700px",

width="100%",

bgcolor="#222222",

font_color="white",

directed=True,

)

internet.barnes_hut()

internet.from_nx(graph)

return internetTo render the Graph to the UI, we first create a singular person ID as we use the PyVis save_graph technique to save lots of the HTML file for the graph on the server. The uuid ensures a singular file title, which is then learn into the streamlit UI and after the file is deleted.

import uuid

import contextlib

import os

import streamlit as st

def render_graphs(internet):

"""

Helper to render graph visualization from pyvis graph.

"""

uuid4 = uuid.uuid4()

file_name = f"./output/{uuid4}.html"

with contextlib.suppress(FileNotFoundError):

os.take away(file_name)

internet.save_graph(file_name)

with open(file_name, "r", encoding="utf-8") as html_file:

source_code = html_file.learn()

st.elements.v1.html(source_code, top=650, width=650)

os.take away(file_name)6. Graph RAG Utilizing LlamaIndex

Via graph retrieval-augmented technology, we are able to question our graph knowledge instantly, an instance will be seen within the prior screenshot. Extracted entities from the person question are seemed up within the graph to provide particular context to the AI to floor its response, as this data would possible not have been within the coaching corpus, and therefore any reply given would have had an elevated chance of being a hallucination.

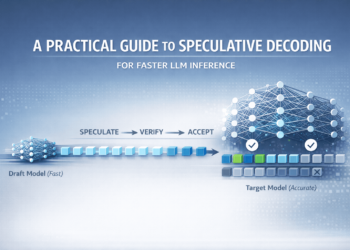

We create a chat engine to cross a person’s earlier question historical past into the mannequin. Normally, the Open AI API secret is learn as an setting variable inside LlamaIndex — nonetheless, since that is user-submitted for our software and we don’t wish to save customers’ Open AI credentials, we have to cross credentials to the LLM and embedding mannequin courses as key phrase arguments.

We then create an empty LlamaIndex Information Graph Index and populate the data graph by inserting triples. The triples come from traversing the sides of our NetworkX graph and calling the upsert_triplet_and_node technique, which can create the triple and node in the event that they don’t exist already.

For the reason that graph is directed, we are able to interchange the themes and objects in order that the graph is traversable in both path. The chat engine makes use of the tree_summarize possibility for the response builder.

“Tree summarize response builder. This response builder recursively merges textual content chunks and summarizes them in a bottom-up trend (i.e. constructing a tree from leaves to root). Extra concretely, at every recursively step: 1. we repack the textual content chunks so that every chunk fills the context window of the LLM 2. if there is just one chunk, we give the ultimate response 3. in any other case, we summarize every chunk and recursively summarize the summaries.”— LlamaIndex Web site

Calling the chat technique with the person’s question and setting up the chat historical past from the Streamlit state object is included right here.

from llama_index.core import KnowledgeGraphIndex

from llama_index.core.schema import TextNode

from llama_index.embeddings.openai import OpenAIEmbedding

from llama_index.llms.openai import OpenAI

from llama_index.core.llms import ChatMessage, MessageRole

import streamlit as st

import utils.ui_utils as ui_utils # pylint: disable=consider-using-from-import, import-error

def init_llama_index_graph(graph_nx, open_ai_api_key):

"""

Assemble a data graph utilizing llama index.

"""

llm = OpenAI(mannequin="gpt-3.5-turbo", api_key=open_ai_api_key)

embed_model = OpenAIEmbedding(api_key=open_ai_api_key)

graph = KnowledgeGraphIndex(

[], llm=llm, embed_model=embed_model, api_key=open_ai_api_key

)

for subject_entity, object_entity in graph_nx.edges():

predicate = graph_nx[subject_entity][object_entity].get("label", "pertains to")

graph.upsert_triplet_and_node(

(subject_entity, predicate, object_entity), TextNode(textual content=subject_entity)

)

graph.upsert_triplet_and_node(

(object_entity, predicate, subject_entity), TextNode(textual content=subject_entity)

)

chat_engine = graph.as_chat_engine(

include_text=True,

response_mode="tree_summarize",

embedding_mode="hybrid",

similarity_top_k=5,

verbose=True,

llm=llm,

)

return chat_engine

def add_result_to_state(query, response):

"""

Add mannequin output to state.

"""

if response:

graph_answers = st.session_state.get("graph_answers") or []

graph_answers.append((query, response))

st.session_state["graph_answers"] = graph_answers

else:

st.error("Question failed, please attempt once more later.", icon="⚠️")

def query_llama_index_graph(query_engine, query):

"""

Question llama index data graph utilizing graph RAG.

"""

graph_answers = st.session_state.get("graph_answers", [])

chat_history = []

for question, reply in graph_answers:

chat_history.append(ChatMessage(position=MessageRole.USER, content material=question))

chat_history.append(

ChatMessage(position=MessageRole.ASSISTANT, content material=reply)

)

if response := query_engine.chat(query, chat_history):

add_result_to_state(query, response.response)Equally, I initially explored a LangChain implementation, although throughout some experimentation, I made a decision to proceed wth the LlamaIndex-based strategy beforehand demonstrated. For reference, I’ve included this under whether it is helpful to you.

Within the curiosity of brevity, the reason is omitted, although it must be self-explanatory for the reader.

from langchain_community.chains.graph_qa.base import GraphQAChain

from langchain_community.graphs import NetworkxEntityGraph

from langchain_community.graphs.networkx_graph import KnowledgeTriple

from langchain_openai import ChatOpenAI

import streamlit as st

def add_result_to_state(query, response):

"""

Add mannequin output to state.

"""

if response:

graph_answers = st.session_state.get("graph_answers") or []

graph_answers.append((query, response))

st.session_state["graph_answers"] = graph_answers

else:

st.error("Question failed, please attempt once more later.", icon="⚠️")

def construct_graph_langchain(graph_nx, open_ai_api_key, query):

"""

Assemble a data graph in Langchain and preform graph RAG.

"""

graph = NetworkxEntityGraph()

for node in graph_nx:

graph.add_node(node)

for subject_entity, object_entity in graph_nx.edges():

predicate = graph_nx[subject_entity][object_entity].get("label", "pertains to")

graph.add_triple(KnowledgeTriple(subject_entity, predicate, object_entity))

llm = ChatOpenAI(

api_key=open_ai_api_key, mannequin="gpt-4", temperature=0, max_retries=2

)

chain = GraphQAChain.from_llm(llm=llm, graph=graph, verbose=True)

if response := chain.invoke({"question": query}):

reply = response.get("consequence")

add_result_to_state(query, reply)7. Linting With Pylint

Since I’ve left some feedback within the code to disable the linter within the examples above (examples are referenced from the GitHub repo), I believed I’d cowl the subject of linting briefly.

For these unfamiliar, linting helps to test your code for potential bugs and stylistic points. Linters routinely implement coding requirements.

To get began, set up Pylint by working the command.

pip set up pylintSecondly, we have to create a .pylintrc file on the root of the undertaking (we are able to additionally set default world and user-specific settings relying on the place we create the .pylintrc file). To do that, you’ll need to run.

pylint --generate-rcfile > .pylintrcWe are able to configure this file to suit our preferences by updating the default values throughout the .pylintrc file.

To run the linter manually, you need to use.

pylint ./most important.py && pylint ./**/*.pyWhen the Docker picture is constructed, it should routinely run Pylint and lift an error ought to it detect a problem with the code. This may be seen within the Dockerfile.

FROM python:3.10.16 AS base

WORKDIR /app

COPY necessities.txt .

RUN pip set up --upgrade pip

RUN pip set up -r necessities.txt

COPY . .

RUN mkdir -p /app/output

RUN pylint ./most important.py && pylint ./**/*.py

RUN python -m unittest -v checks.test_ukri_utils.Testing

CMD ["streamlit", "run", "./main.py"]A preferred formatter that you may additionally discover helpful is Black —

“Black is a PEP 8 compliant opinionated formatter. Black reformats whole information in place.”

Operating Black will routinely resolve among the points that might be raised by the linter.

8. Streamlit Neighborhood Cloud Demo App

With Streamlit Neighborhood Cloud, anybody can host their software at no cost. You probably have an software you’d wish to deploy, you possibly can comply with this tutorial.

To see the hosted demo, please click on the hyperlink under.

https://governmentfundinggraph.streamlit.app

Thanks for studying my article — as promised, you could find all of the code within the GitHub repo right here.

Any and all suggestions is efficacious to me because it gives path for my future initiatives. In the event you discovered this text helpful, please let me know.

You may also discover me over on LinkedIn when you’ve got particular questions.

All for open-source AI grant writing initiatives? Join our mailing checklist right here.

*All pictures, until in any other case famous, are by the creator.