Fingers on How a lot can reinforcement studying – and a bit of additional verification – enhance massive language fashions, aka LLMs? Alibaba’s Qwen staff goals to search out out with its newest launch, QwQ.

Regardless of having a fraction of DeepSeek R1’s claimed 671 billion parameters, Alibaba touts its comparatively compact 32-billion “reasoning” mannequin as outperforming R1 in choose math, coding, and function-calling benchmarks.

Very like R1, the Qwen staff fine-tuned QwQ utilizing reinforcement studying to enhance its chain-of-thought reasoning for drawback evaluation and breakdown. This strategy sometimes reinforces stepwise reasoning by rewarding fashions for proper solutions, encouraging extra correct responses. Nevertheless, for QwQ, the staff additionally built-in a so-called accuracy verifier and a code execution server to make sure rewards got just for appropriate math options and practical code.

The consequence, the Qwen staff claims, is a mannequin that punches far above its weight class, attaining efficiency on par with and, in some circumstances, edging out far bigger fashions.

Here is how Alibaba claims QwQ stacks up in opposition to the competitors in benchmarks – click on to enlarge

Nevertheless, AI benchmarks aren’t all the time what they appear to be. So, let’s check out how these claims maintain up in the true world, after which we’ll present you get QwQ up and working so you may check it out for your self.

How does it stack up?

We ran QwQ by way of a slate of take a look at prompts starting from common data to spatial reasoning, drawback fixing, arithmetic, and different questions identified to journey up even the very best LLMs.

As a result of the total mannequin requires substantial reminiscence, we ran our checks in two configurations to cater to these of you who’ve a variety of RAM and people of you who do not. First, we evaluated the total mannequin utilizing the QwQ demo on Hugging Face. Then, we examined a 4-bit quantized model on a 24 GB GPU (Nvidia 3090 or AMD Radeon RX 7900XTX) to evaluate the affect of quantization on accuracy.

As for many common data questions, we discovered that QwQ carried out equally to DeepSeek’s 671 billion parameter R1 and different reasoning fashions like OpenAI’s o3-mini, spending a number of seconds to compose its ideas earlier than spitting out the reply to the question.

The place the mannequin stands out, maybe unsurprisingly, is when it is tasked with fixing extra complicated logic, coding, or arithmetic challenges, so we’ll deal with these earlier than addressing a few of its weak factors.

Spatial reasoning

For enjoyable, we determined to start out with a comparatively new spatial-reasoning take a look at developed by the oldsters at Homebrew Analysis as a part of their AlphaMaze challenge.

The take a look at, illustrated above, presents the mannequin with a maze within the type of a textual content immediate, just like the one under. The mannequin’s goal is then to navigate from the origin “O” to the goal “T.”

You're a useful assistant that solves mazes. You may be given a maze represented by a sequence of tokens. The tokens characterize: - Coordinates: <|row-col|> (e.g., <|0-0|>, <|2-4|>) - Partitions: <|no_wall|>, <|up_wall|>, <|down_wall|>, <|left_wall|>, <|right_wall|>, <|up_down_wall|>, and many others. - Origin: <|origin|> - Goal: <|goal|> - Motion: <|up|>, <|down|>, <|left|>, <|proper|>, <|clean|> Your process is to output the sequence of actions (<|up|>, <|down|>, <|left|>, <|proper|>) required to navigate from the origin to the goal, primarily based on the offered maze illustration. Assume step-by-step. At every step, predict solely the subsequent motion token. Output solely the transfer tokens, separated by areas. MAZE: <|0-0|><|up_left_right_wall|><|clean|><|0-1|><|up_down_left_wall|><|clean|><|0-2|><|up_down_wall|><|clean|><|0-3|><|up_wall|><|clean|><|0-4|><|up_right_wall|><|clean|> <|1-0|><|down_left_wall|><|clean|><|1-1|><|up_right_wall|><|clean|><|1-2|><|up_left_wall|><|clean|><|1-3|><|down_right_wall|><|goal|><|1-4|><|down_left_right_wall|><|clean|> <|2-0|><|up_left_right_wall|><|clean|><|2-1|><|left_right_wall|><|clean|><|2-2|><|down_left_wall|><|clean|><|2-3|><|up_down_wall|><|clean|><|2-4|><|up_right_wall|><|clean|> <|3-0|><|left_right_wall|><|clean|><|3-1|><|down_left_wall|><|origin|><|3-2|><|up_down_wall|><|clean|><|3-3|><|up_down_wall|><|clean|><|3-4|><|right_wall|><|clean|> <|4-0|><|down_left_wall|><|clean|><|4-1|><|up_down_wall|><|clean|><|4-2|><|up_down_wall|><|clean|><|4-3|><|up_down_wall|><|clean|><|4-4|><|down_right_wall|><|clean|>

Each our regionally hosted QwQ occasion and the full-sized mannequin have been capable of clear up these puzzles efficiently each time, although every run did take a couple of minutes to complete.

The identical could not be stated of DeepSeek’s R1 and its 32B distill. Each fashions have been capable of clear up the primary maze, however R1 struggled to finish the second, whereas the 32B distill solved it accurately 9 occasions out of ten. This stage of variation is not too stunning contemplating R1 and the distill use utterly totally different base fashions.

Whereas QwQ outperformed DeepSeek on this take a look at, we did observe some unusual conduct with our 4-bit mannequin, which required practically twice as many “thought” tokens to finish the take a look at. At first, it seemed as if this can be as a result of quantization-related losses – a problem we explored right here. However, because it turned out, the quantized mannequin was simply damaged out of the field. After adjusting the hyperparameters – don’t be concerned, we’ll present you repair these in a bit – and the checks run once more, the issue disappeared.

A one-shot code champ?

Since its launch, QwQ has garnered a variety of curiosity from netizens curious as as to whether the mannequin can generate usable code on the primary try in a so-called one-shot take a look at. And this specific problem actually appears to be a brilliant spot for the mannequin.

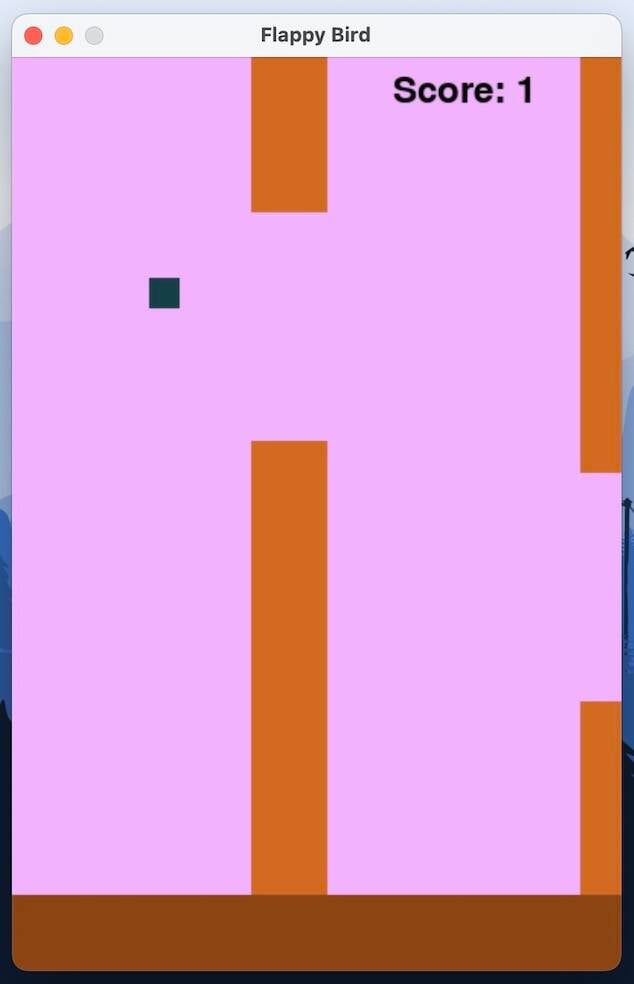

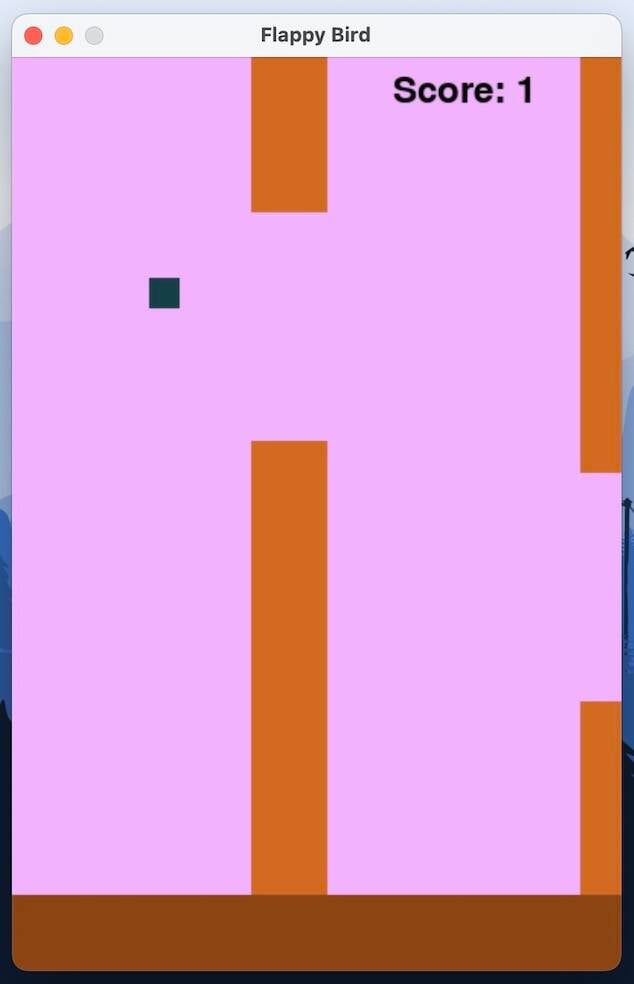

We requested the mannequin to recreate various comparatively easy video games, particularly Pong, Breakout, Asteroids, and Flappy Chook, in Python utilizing the pygame library.

Pong and Breakout weren’t a lot of a problem for QwQ. After a couple of minutes of labor, the mannequin spat out working variations of every.

In our testing, QwQ was capable of recreate traditional arcade video games like Breakout in a single shot with relative ease – click on to enlarge

Tasked with recreating Asteroids, nevertheless, QwQ fell on its face. Whereas the code ran, each the graphics and sport mechanics have been continuously distorted and buggy. By comparability, on its first try, R1 faithfully recreated the traditional arcade shooter.

Some of us have even managed to get R1 and QwQ to one-shot code a minimalist model of Flappy Chook, which we are able to verify additionally labored with out difficulty. In the event you’re , you will discover the immediate we examined right here.

It has occurred to us that these fashions have been educated on an enormous set of overtly out there supply code, which little question included reproductions of traditional video games. Aren’t the fashions due to this fact simply remembering what they discovered throughout coaching moderately than independently determining sport mechanics from scratch? That is the entire phantasm of those huge neural networks.

Right here you may see the minimalist model QwQ wrote in Python in a single shot – click on to enlarge

No less than in terms of recreating traditional arcade video games, QwQ performs properly past what its parameter depend would possibly recommend, even when it will possibly’t match R1 in each take a look at. To borrow a phrase from the automotive world, there is no alternative for displacement. This would possibly clarify why Alibaba is not stopping with QwQ 32B and has a “Max” model within the works. Not that we count on to be working that regionally anytime quickly.

With all that stated, in comparison with DeepSeek’s equally sized R1 Qwen 2.5 32B distill, Alibaba’s choice to combine a code execution server into its reinforcement studying pipeline could have given it an edge in programming-related challenges.

Can it do math? Certain, however please do not

Traditionally, LLMs have been actually unhealthy at arithmetic – unsurprising given their language-focused coaching. Whereas newer fashions have improved, QwQ nonetheless faces challenges, however not for the explanations you would possibly assume.

QwQ was capable of clear up the entire arithmetic issues we threw at R1 in our earlier deep dive. So, QwQ can deal with primary arithmetic and even some algebra – it simply takes eternally to do it. Asking an LLM to do math appears bonkers to us; calculators and direct computation nonetheless work in 2025.

For instance, to unravel a easy equation like what's 7*43?, QwQ required producing greater than 1,000 tokens over about 23 seconds on an RTX 3090 Ti – all for an issue that may have taken much less time to punch right into a pocket calculator than to sort the immediate.

And the inefficiency does not cease there. To resolve 3394*35979, a much more difficult multiplication drawback past the capabilities of most non-reasoning fashions we have examined, our native occasion of QwQ wanted three minutes and greater than 5,000 tokens to reach at a solution.

That is when it is configured accurately. Earlier than we utilized the hyperparameter repair, that very same equation wanted 9 minutes and practically 12,000 tokens to unravel.

The takeaway right here is that simply because a mannequin can brute pressure its strategy to the precise reply doesn’t suggest it is the precise instrument for the job. As a substitute, we suggest giving QwQ entry to a Python calculator. In the event you’re new to LLM perform calling, try our information right here.

Tasked with fixing the identical 3394*35979 equation utilizing tooling, QwQ’s response time dropped to eight seconds because the calculator dealt with all of the heavy lifting.

However ‘wait’…

In the event you wade by way of QwQ’s “ideas,” you are certain to run into the phrase “wait” lots, notably on complicated duties or phrase issues, because the mannequin checks its work in opposition to various outcomes.

This sort of conduct is frequent for reasoning fashions, however it’s notably irritating when QwQ generates the mistaken reply – even after demonstrating that it acknowledged the proper one throughout its “thought” course of.

We bumped into this drawback a good bit with QwQ. Nevertheless, one of many prompts that demonstrated this most clearly was AutoGen AI’s tackle the traditional wolf, goat, and cabbage drawback. The immediate is a spin on a traditional transportation optimization puzzle and it goes like this:

The trick is that the reply is embedded within the immediate. With three safe compartments, the farmer can transport each animals and his produce in a single journey. However, as a result of it mirrors the traditional puzzle so carefully, fashions typically overlook the compartments.

In our testing, QwQ persistently received this puzzle mistaken, and, peering into its thought course of, it wasn’t as a result of it missed the three compartments. Actually, it acknowledged them however determined that that may be too simple:

Wait, if the farmer can take all three in a single journey, then he can simply do this and be accomplished. However that may make the issue trivial, which is unlikely. So maybe the compartments are separate however the boat can solely carry two objects plus the farmer?

No matter whether or not we ran this take a look at on the total mannequin within the cloud or regionally on our machine, QwQ simply could not clear up this persistently.

Hypersensitive hyperparameters

In comparison with different fashions we have examined, we discovered QwQ to be notably twitchy in terms of its configuration. Initially, Alibaba really useful setting the next sampling parameters:

- Temperature: 0.6

- TopP: 0.95

- TopK: between 20 and 40

Since then, it is up to date its suggestions to additionally set:

- MinP: 0

- Presence Penalty: between 0 and a couple of

Attributable to what seems to be a bug in Llama.cpp’s dealing with of sampling parameters – we use Llama.cpp for working inference on fashions – we discovered it was additionally essential to disable the repeat penalty by setting it to 1.

As we talked about earlier, the outcomes have been fairly dramatic – greater than halving the variety of “considering” tokens to reach at a solution. Nevertheless, this bug seems to be particular to GGUF-quantized variations of the mannequin when working on the Llama.cpp inference engine, which is utilized by in style apps comparable to Ollama and LM Studio.

In the event you plan to make use of Llama.cpp, we suggest trying out Unsloth’s information to correcting the sampling order.

Attempt it for your self

If you would like to check out QwQ for your self, it is pretty simple to stand up and working in Ollama. Sadly, it does require a GPU with a good bit of vRAM. We managed to get the mannequin working on a 24 GB 3090 Ti with a big sufficient context window to be helpful.

Technically talking, you would run the mannequin in your CPU and system reminiscence, however until you have received a high-end workstation or server mendacity round, there is a good likelihood you will find yourself ready half an hour or extra for it to reply.

Stipulations

- You will want a machine that is able to working medium-sized LLMs at 4-bit quantization. For this, we suggest a suitable GPU with not less than 24 GB of vRAM. You could find a full record of supported playing cards right here.

- For Apple Silicon Macs, we suggest one with not less than 32 GB of reminiscence.

This information additionally assumes some familiarity with a Linux-world command-line interface in addition to Ollama. If that is your first time utilizing the latter, you will discover our information right here.

Putting in Ollama

Ollama is a well-liked mannequin runner that gives a straightforward technique for downloading and serving LLMs on shopper {hardware}. For these working Home windows or macOS, head over to ollama.com and obtain and set up it like some other utility.

For Linux customers, Ollama presents a handy one-liner that ought to have you ever up and working in a matter of minutes. Alternatively, Ollama gives guide set up directions for individuals who do not need to run shell scripts straight from the supply, which may be discovered right here.

That one-liner to put in Ollama on Linux is:

curl -fsSL https://ollama.com/set up.sh | sh

Deploying QwQ

With a view to deploy QwQ with out working out of reminiscence on our 24 GB card, we have to launch Ollama with a few further flags. Begin by closing Ollama if it is already working. For Mac and Home windows customers, this is so simple as proper clicking on the Ollama icon within the taskbar and clicking shut.

These working systemd-based working methods, comparable to Ubuntu, ought to terminate it by working:

sudo systemctl cease ollama

From there, spin Ollama again up utilizing our particular flags, utilizing the next instructions:

OLLAMA_FLASH_ATTENTION=true OLLAMA_KV_CACHE_TYPE=q4_0 ollama serve

In case you are questioning, OLLAMA_FLASH_ATTENTION=true allows a expertise known as Flash Consideration for supported GPUs that helps to maintain reminiscence consumption in test when utilizing massive context home windows. OLLAMA_KV_CACHE_TYPE=q4_0, in the meantime, compresses the key-value cache used to retailer our context to 4-bits.

Collectively, these flags ought to enable us to suit QwQ together with a decent-sized context window into lower than 24 GB of vRAM.

Subsequent, we’ll open a second terminal window and pull down our mannequin by working the command under. Relying on the velocity of your web connection, this might take a couple of minutes, because the mannequin information are round 20 GB in dimension.

ollama pull qwq

At this level, we might usually have the ability to run the mannequin in our terminal. Sadly, on the time of writing, solely the mannequin’s temperature parameter has been set accurately.

To resolve this, we’ll create a customized model of the mannequin with a number of tweaks that seem to appropriate the problem. To do that, create a brand new file in your house listing known as Modelfile and paste within the following:

FROM qwq PARAMETER temperature 0.6 PARAMETER repeat_penalty 1 PARAMETER top_k 40 PARAMETER top_p 0.95 PARAMETER min_p 0 PARAMETER num_ctx 10240

This may configure QwQ to run with optimized parameters by default and inform Ollama to run the mannequin with a ten,240 token context size by default. In the event you run into points with Ollama offloading a part of the mannequin onto the CPU, chances are you’ll want to scale back this to eight,192 tokens.

A phrase on context size

In the event you’re not accustomed to it, you may consider a mannequin’s context window a bit like its short-term reminiscence. Set it too low, and ultimately the mannequin will begin forgetting particulars. That is problematic for reasoning fashions, as their “thought” or “reasoning” tokens can burn by way of the context window fairly shortly.

To treatment this, QwQ helps a pretty big 131,072 (128K) token context window. Sadly for anybody concerned with working the mannequin at residence, you in all probability haven’t got sufficient reminiscence to get anyplace near that.

Nonetheless in your house listing, run the next command to generate a brand new mannequin with the fixes utilized. We’ll title the brand new mannequin qwq-fixed:

ollama create qwq-fixed

We’ll then take a look at it is working by loading up the mannequin and chatting with it within the terminal:

ollama run qwq-fixed

If you would like to tweak any of the hyperparameters we set earlier, for instance, the context size or top_k settings, you are able to do so by querying the next after the mannequin has loaded:

/set parameter top_k

Lastly, if you happen to’d favor to make use of QwQ in a extra ChatGPT-style person interface, we suggest trying out our retrieval-augmented technology information, which can stroll you thru the method of deploying a mannequin utilizing Open WebUI in a Docker Container.

The Register goals to deliver you extra on utilizing LLMs and different AI applied sciences – with out the hype – quickly. We need to pull again the curtain and present how these things actually suits collectively. When you’ve got any burning questions on AI infrastructure, software program, or fashions, we might love to listen to about them within the feedback part under. ®

Editor’s notice: The Register was offered an RTX 6000 Ada Era graphics card by Nvidia, an Arc A770 GPU by Intel, and a Radeon Professional W7900 DS by AMD to assist tales like this. None of those distributors had any enter as to the content material of this or different articles.

Fingers on How a lot can reinforcement studying – and a bit of additional verification – enhance massive language fashions, aka LLMs? Alibaba’s Qwen staff goals to search out out with its newest launch, QwQ.

Regardless of having a fraction of DeepSeek R1’s claimed 671 billion parameters, Alibaba touts its comparatively compact 32-billion “reasoning” mannequin as outperforming R1 in choose math, coding, and function-calling benchmarks.

Very like R1, the Qwen staff fine-tuned QwQ utilizing reinforcement studying to enhance its chain-of-thought reasoning for drawback evaluation and breakdown. This strategy sometimes reinforces stepwise reasoning by rewarding fashions for proper solutions, encouraging extra correct responses. Nevertheless, for QwQ, the staff additionally built-in a so-called accuracy verifier and a code execution server to make sure rewards got just for appropriate math options and practical code.

The consequence, the Qwen staff claims, is a mannequin that punches far above its weight class, attaining efficiency on par with and, in some circumstances, edging out far bigger fashions.

Here is how Alibaba claims QwQ stacks up in opposition to the competitors in benchmarks – click on to enlarge

Nevertheless, AI benchmarks aren’t all the time what they appear to be. So, let’s check out how these claims maintain up in the true world, after which we’ll present you get QwQ up and working so you may check it out for your self.

How does it stack up?

We ran QwQ by way of a slate of take a look at prompts starting from common data to spatial reasoning, drawback fixing, arithmetic, and different questions identified to journey up even the very best LLMs.

As a result of the total mannequin requires substantial reminiscence, we ran our checks in two configurations to cater to these of you who’ve a variety of RAM and people of you who do not. First, we evaluated the total mannequin utilizing the QwQ demo on Hugging Face. Then, we examined a 4-bit quantized model on a 24 GB GPU (Nvidia 3090 or AMD Radeon RX 7900XTX) to evaluate the affect of quantization on accuracy.

As for many common data questions, we discovered that QwQ carried out equally to DeepSeek’s 671 billion parameter R1 and different reasoning fashions like OpenAI’s o3-mini, spending a number of seconds to compose its ideas earlier than spitting out the reply to the question.

The place the mannequin stands out, maybe unsurprisingly, is when it is tasked with fixing extra complicated logic, coding, or arithmetic challenges, so we’ll deal with these earlier than addressing a few of its weak factors.

Spatial reasoning

For enjoyable, we determined to start out with a comparatively new spatial-reasoning take a look at developed by the oldsters at Homebrew Analysis as a part of their AlphaMaze challenge.

The take a look at, illustrated above, presents the mannequin with a maze within the type of a textual content immediate, just like the one under. The mannequin’s goal is then to navigate from the origin “O” to the goal “T.”

You're a useful assistant that solves mazes. You may be given a maze represented by a sequence of tokens. The tokens characterize: - Coordinates: <|row-col|> (e.g., <|0-0|>, <|2-4|>) - Partitions: <|no_wall|>, <|up_wall|>, <|down_wall|>, <|left_wall|>, <|right_wall|>, <|up_down_wall|>, and many others. - Origin: <|origin|> - Goal: <|goal|> - Motion: <|up|>, <|down|>, <|left|>, <|proper|>, <|clean|> Your process is to output the sequence of actions (<|up|>, <|down|>, <|left|>, <|proper|>) required to navigate from the origin to the goal, primarily based on the offered maze illustration. Assume step-by-step. At every step, predict solely the subsequent motion token. Output solely the transfer tokens, separated by areas. MAZE: <|0-0|><|up_left_right_wall|><|clean|><|0-1|><|up_down_left_wall|><|clean|><|0-2|><|up_down_wall|><|clean|><|0-3|><|up_wall|><|clean|><|0-4|><|up_right_wall|><|clean|> <|1-0|><|down_left_wall|><|clean|><|1-1|><|up_right_wall|><|clean|><|1-2|><|up_left_wall|><|clean|><|1-3|><|down_right_wall|><|goal|><|1-4|><|down_left_right_wall|><|clean|> <|2-0|><|up_left_right_wall|><|clean|><|2-1|><|left_right_wall|><|clean|><|2-2|><|down_left_wall|><|clean|><|2-3|><|up_down_wall|><|clean|><|2-4|><|up_right_wall|><|clean|> <|3-0|><|left_right_wall|><|clean|><|3-1|><|down_left_wall|><|origin|><|3-2|><|up_down_wall|><|clean|><|3-3|><|up_down_wall|><|clean|><|3-4|><|right_wall|><|clean|> <|4-0|><|down_left_wall|><|clean|><|4-1|><|up_down_wall|><|clean|><|4-2|><|up_down_wall|><|clean|><|4-3|><|up_down_wall|><|clean|><|4-4|><|down_right_wall|><|clean|>

Each our regionally hosted QwQ occasion and the full-sized mannequin have been capable of clear up these puzzles efficiently each time, although every run did take a couple of minutes to complete.

The identical could not be stated of DeepSeek’s R1 and its 32B distill. Each fashions have been capable of clear up the primary maze, however R1 struggled to finish the second, whereas the 32B distill solved it accurately 9 occasions out of ten. This stage of variation is not too stunning contemplating R1 and the distill use utterly totally different base fashions.

Whereas QwQ outperformed DeepSeek on this take a look at, we did observe some unusual conduct with our 4-bit mannequin, which required practically twice as many “thought” tokens to finish the take a look at. At first, it seemed as if this can be as a result of quantization-related losses – a problem we explored right here. However, because it turned out, the quantized mannequin was simply damaged out of the field. After adjusting the hyperparameters – don’t be concerned, we’ll present you repair these in a bit – and the checks run once more, the issue disappeared.

A one-shot code champ?

Since its launch, QwQ has garnered a variety of curiosity from netizens curious as as to whether the mannequin can generate usable code on the primary try in a so-called one-shot take a look at. And this specific problem actually appears to be a brilliant spot for the mannequin.

We requested the mannequin to recreate various comparatively easy video games, particularly Pong, Breakout, Asteroids, and Flappy Chook, in Python utilizing the pygame library.

Pong and Breakout weren’t a lot of a problem for QwQ. After a couple of minutes of labor, the mannequin spat out working variations of every.

In our testing, QwQ was capable of recreate traditional arcade video games like Breakout in a single shot with relative ease – click on to enlarge

Tasked with recreating Asteroids, nevertheless, QwQ fell on its face. Whereas the code ran, each the graphics and sport mechanics have been continuously distorted and buggy. By comparability, on its first try, R1 faithfully recreated the traditional arcade shooter.

Some of us have even managed to get R1 and QwQ to one-shot code a minimalist model of Flappy Chook, which we are able to verify additionally labored with out difficulty. In the event you’re , you will discover the immediate we examined right here.

It has occurred to us that these fashions have been educated on an enormous set of overtly out there supply code, which little question included reproductions of traditional video games. Aren’t the fashions due to this fact simply remembering what they discovered throughout coaching moderately than independently determining sport mechanics from scratch? That is the entire phantasm of those huge neural networks.

Right here you may see the minimalist model QwQ wrote in Python in a single shot – click on to enlarge

No less than in terms of recreating traditional arcade video games, QwQ performs properly past what its parameter depend would possibly recommend, even when it will possibly’t match R1 in each take a look at. To borrow a phrase from the automotive world, there is no alternative for displacement. This would possibly clarify why Alibaba is not stopping with QwQ 32B and has a “Max” model within the works. Not that we count on to be working that regionally anytime quickly.

With all that stated, in comparison with DeepSeek’s equally sized R1 Qwen 2.5 32B distill, Alibaba’s choice to combine a code execution server into its reinforcement studying pipeline could have given it an edge in programming-related challenges.

Can it do math? Certain, however please do not

Traditionally, LLMs have been actually unhealthy at arithmetic – unsurprising given their language-focused coaching. Whereas newer fashions have improved, QwQ nonetheless faces challenges, however not for the explanations you would possibly assume.

QwQ was capable of clear up the entire arithmetic issues we threw at R1 in our earlier deep dive. So, QwQ can deal with primary arithmetic and even some algebra – it simply takes eternally to do it. Asking an LLM to do math appears bonkers to us; calculators and direct computation nonetheless work in 2025.

For instance, to unravel a easy equation like what's 7*43?, QwQ required producing greater than 1,000 tokens over about 23 seconds on an RTX 3090 Ti – all for an issue that may have taken much less time to punch right into a pocket calculator than to sort the immediate.

And the inefficiency does not cease there. To resolve 3394*35979, a much more difficult multiplication drawback past the capabilities of most non-reasoning fashions we have examined, our native occasion of QwQ wanted three minutes and greater than 5,000 tokens to reach at a solution.

That is when it is configured accurately. Earlier than we utilized the hyperparameter repair, that very same equation wanted 9 minutes and practically 12,000 tokens to unravel.

The takeaway right here is that simply because a mannequin can brute pressure its strategy to the precise reply doesn’t suggest it is the precise instrument for the job. As a substitute, we suggest giving QwQ entry to a Python calculator. In the event you’re new to LLM perform calling, try our information right here.

Tasked with fixing the identical 3394*35979 equation utilizing tooling, QwQ’s response time dropped to eight seconds because the calculator dealt with all of the heavy lifting.

However ‘wait’…

In the event you wade by way of QwQ’s “ideas,” you are certain to run into the phrase “wait” lots, notably on complicated duties or phrase issues, because the mannequin checks its work in opposition to various outcomes.

This sort of conduct is frequent for reasoning fashions, however it’s notably irritating when QwQ generates the mistaken reply – even after demonstrating that it acknowledged the proper one throughout its “thought” course of.

We bumped into this drawback a good bit with QwQ. Nevertheless, one of many prompts that demonstrated this most clearly was AutoGen AI’s tackle the traditional wolf, goat, and cabbage drawback. The immediate is a spin on a traditional transportation optimization puzzle and it goes like this:

The trick is that the reply is embedded within the immediate. With three safe compartments, the farmer can transport each animals and his produce in a single journey. However, as a result of it mirrors the traditional puzzle so carefully, fashions typically overlook the compartments.

In our testing, QwQ persistently received this puzzle mistaken, and, peering into its thought course of, it wasn’t as a result of it missed the three compartments. Actually, it acknowledged them however determined that that may be too simple:

Wait, if the farmer can take all three in a single journey, then he can simply do this and be accomplished. However that may make the issue trivial, which is unlikely. So maybe the compartments are separate however the boat can solely carry two objects plus the farmer?

No matter whether or not we ran this take a look at on the total mannequin within the cloud or regionally on our machine, QwQ simply could not clear up this persistently.

Hypersensitive hyperparameters

In comparison with different fashions we have examined, we discovered QwQ to be notably twitchy in terms of its configuration. Initially, Alibaba really useful setting the next sampling parameters:

- Temperature: 0.6

- TopP: 0.95

- TopK: between 20 and 40

Since then, it is up to date its suggestions to additionally set:

- MinP: 0

- Presence Penalty: between 0 and a couple of

Attributable to what seems to be a bug in Llama.cpp’s dealing with of sampling parameters – we use Llama.cpp for working inference on fashions – we discovered it was additionally essential to disable the repeat penalty by setting it to 1.

As we talked about earlier, the outcomes have been fairly dramatic – greater than halving the variety of “considering” tokens to reach at a solution. Nevertheless, this bug seems to be particular to GGUF-quantized variations of the mannequin when working on the Llama.cpp inference engine, which is utilized by in style apps comparable to Ollama and LM Studio.

In the event you plan to make use of Llama.cpp, we suggest trying out Unsloth’s information to correcting the sampling order.

Attempt it for your self

If you would like to check out QwQ for your self, it is pretty simple to stand up and working in Ollama. Sadly, it does require a GPU with a good bit of vRAM. We managed to get the mannequin working on a 24 GB 3090 Ti with a big sufficient context window to be helpful.

Technically talking, you would run the mannequin in your CPU and system reminiscence, however until you have received a high-end workstation or server mendacity round, there is a good likelihood you will find yourself ready half an hour or extra for it to reply.

Stipulations

- You will want a machine that is able to working medium-sized LLMs at 4-bit quantization. For this, we suggest a suitable GPU with not less than 24 GB of vRAM. You could find a full record of supported playing cards right here.

- For Apple Silicon Macs, we suggest one with not less than 32 GB of reminiscence.

This information additionally assumes some familiarity with a Linux-world command-line interface in addition to Ollama. If that is your first time utilizing the latter, you will discover our information right here.

Putting in Ollama

Ollama is a well-liked mannequin runner that gives a straightforward technique for downloading and serving LLMs on shopper {hardware}. For these working Home windows or macOS, head over to ollama.com and obtain and set up it like some other utility.

For Linux customers, Ollama presents a handy one-liner that ought to have you ever up and working in a matter of minutes. Alternatively, Ollama gives guide set up directions for individuals who do not need to run shell scripts straight from the supply, which may be discovered right here.

That one-liner to put in Ollama on Linux is:

curl -fsSL https://ollama.com/set up.sh | sh

Deploying QwQ

With a view to deploy QwQ with out working out of reminiscence on our 24 GB card, we have to launch Ollama with a few further flags. Begin by closing Ollama if it is already working. For Mac and Home windows customers, this is so simple as proper clicking on the Ollama icon within the taskbar and clicking shut.

These working systemd-based working methods, comparable to Ubuntu, ought to terminate it by working:

sudo systemctl cease ollama

From there, spin Ollama again up utilizing our particular flags, utilizing the next instructions:

OLLAMA_FLASH_ATTENTION=true OLLAMA_KV_CACHE_TYPE=q4_0 ollama serve

In case you are questioning, OLLAMA_FLASH_ATTENTION=true allows a expertise known as Flash Consideration for supported GPUs that helps to maintain reminiscence consumption in test when utilizing massive context home windows. OLLAMA_KV_CACHE_TYPE=q4_0, in the meantime, compresses the key-value cache used to retailer our context to 4-bits.

Collectively, these flags ought to enable us to suit QwQ together with a decent-sized context window into lower than 24 GB of vRAM.

Subsequent, we’ll open a second terminal window and pull down our mannequin by working the command under. Relying on the velocity of your web connection, this might take a couple of minutes, because the mannequin information are round 20 GB in dimension.

ollama pull qwq

At this level, we might usually have the ability to run the mannequin in our terminal. Sadly, on the time of writing, solely the mannequin’s temperature parameter has been set accurately.

To resolve this, we’ll create a customized model of the mannequin with a number of tweaks that seem to appropriate the problem. To do that, create a brand new file in your house listing known as Modelfile and paste within the following:

FROM qwq PARAMETER temperature 0.6 PARAMETER repeat_penalty 1 PARAMETER top_k 40 PARAMETER top_p 0.95 PARAMETER min_p 0 PARAMETER num_ctx 10240

This may configure QwQ to run with optimized parameters by default and inform Ollama to run the mannequin with a ten,240 token context size by default. In the event you run into points with Ollama offloading a part of the mannequin onto the CPU, chances are you’ll want to scale back this to eight,192 tokens.

A phrase on context size

In the event you’re not accustomed to it, you may consider a mannequin’s context window a bit like its short-term reminiscence. Set it too low, and ultimately the mannequin will begin forgetting particulars. That is problematic for reasoning fashions, as their “thought” or “reasoning” tokens can burn by way of the context window fairly shortly.

To treatment this, QwQ helps a pretty big 131,072 (128K) token context window. Sadly for anybody concerned with working the mannequin at residence, you in all probability haven’t got sufficient reminiscence to get anyplace near that.

Nonetheless in your house listing, run the next command to generate a brand new mannequin with the fixes utilized. We’ll title the brand new mannequin qwq-fixed:

ollama create qwq-fixed

We’ll then take a look at it is working by loading up the mannequin and chatting with it within the terminal:

ollama run qwq-fixed

If you would like to tweak any of the hyperparameters we set earlier, for instance, the context size or top_k settings, you are able to do so by querying the next after the mannequin has loaded:

/set parameter top_k

Lastly, if you happen to’d favor to make use of QwQ in a extra ChatGPT-style person interface, we suggest trying out our retrieval-augmented technology information, which can stroll you thru the method of deploying a mannequin utilizing Open WebUI in a Docker Container.

The Register goals to deliver you extra on utilizing LLMs and different AI applied sciences – with out the hype – quickly. We need to pull again the curtain and present how these things actually suits collectively. When you’ve got any burning questions on AI infrastructure, software program, or fashions, we might love to listen to about them within the feedback part under. ®

Editor’s notice: The Register was offered an RTX 6000 Ada Era graphics card by Nvidia, an Arc A770 GPU by Intel, and a Radeon Professional W7900 DS by AMD to assist tales like this. None of those distributors had any enter as to the content material of this or different articles.