Deep Studying (DL) functions usually require processing video knowledge for duties resembling object detection, classification, and segmentation. Nevertheless, typical video processing pipelines are sometimes inefficient for deep studying inference, resulting in efficiency bottlenecks. On this submit will leverage PyTorch and FFmpeg with NVIDIA {hardware} acceleration to realize this optimisation.

The inefficiency comes from how video frames are sometimes decoded and transferred between CPU and GPU. The usual workflow that we could discover within the majority of tutorials observe this construction:

- Decode Frames on CPU: Video information are first decoded into uncooked frames utilizing CPU-based decoding instruments (e.g., OpenCV, FFmpeg with out GPU help).

- Switch to GPU: These frames are then transferred from CPU to GPU reminiscence to carry out deep studying inference utilizing frameworks like TensorFlow, Pytorch, ONNX, and many others.

- Inference on GPU: As soon as the frames are in GPU reminiscence, the mannequin performs inference.

- Switch Again to CPU (if wanted): Some post-processing steps could require knowledge to be moved again to the CPU.

This CPU-GPU switch course of introduces a big efficiency bottleneck, particularly when processing high-resolution movies at excessive body charges. The pointless reminiscence copies and context switches decelerate the general inference pace, limiting real-time processing capabilities.

For example, the next snippet has the everyday Video Processing pipeline that you simply got here throughout when you find yourself beginning to study deep studying:

The Resolution: GPU-Primarily based Video Decoding and Inference

A extra environment friendly method is to maintain your complete pipeline on the GPU, from video decoding to inference, eliminating redundant CPU-GPU transfers. This may be achieved utilizing FFmpeg with NVIDIA GPU {hardware} acceleration.

Key Optimisations

- GPU-Accelerated Video Decoding: As a substitute of utilizing CPU-based decoding, we leverage FFmpeg with NVIDIA GPU acceleration (NVDEC) to decode video frames instantly on the GPU.

- Zero-Copy Body Processing: The decoded frames stay in GPU reminiscence, avoiding pointless reminiscence transfers.

- GPU-Optimized Inference: As soon as the frames are decoded, we carry out inference instantly utilizing any mannequin on the identical GPU, considerably lowering latency.

Palms on!

Stipulations

With a view to obtain the aforementioned enhancements, we might be utilizing the next dependencies:

Set up

Please, to get a deep perception of how FFmpeg is put in with NVIDIA gpu acceleration, observe these directions.

Examined with:

- System: Ubuntu 22.04

- NVIDIA Driver Model: 550.120

- CUDA Model: 12.4

- Torch: 2.4.0

- Torchaudio: 2.4.0

- Torchvision: 0.19.0

1. Set up the NV-Codecs

2. Clone and configure FFmpeg

3. Validate whether or not the set up was profitable with torchaudio.utils

Time to code an optimised pipeline!

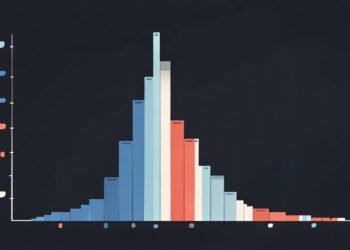

Benchmarking

To benchmark whether or not it’s making any distinction, we might be utilizing this video from Pexels by Pawel Perzanowski. Since most movies there are actually brief, I’ve stacked the identical video a number of instances to offer some outcomes with totally different video lengths. The unique video is 32 seconds lengthy which supplies us a complete of 960 frames. The brand new modified movies have 5520 and 9300 frames respectively.

Unique video

- typical workflow: 28.51s

- optimised workflow: 24.2s

Okay… it doesn’t appear to be an actual enchancment, proper? Let’s take a look at it with longer movies.

Modified video v1 (5520 frames)

- typical workflow: 118.72s

- optimised workflow: 100.23s

Modified video v2 (9300 frames)

- typical workflow: 292.26s

- optimised workflow: 240.85s

Because the video length will increase, the advantages of the optimization turn into extra evident. Within the longest take a look at case, we obtain an 18% speedup, demonstrating a big discount in processing time. These efficiency positive factors are notably essential when dealing with massive video datasets and even in real-time video evaluation duties, the place small effectivity enhancements accumulate into substantial time financial savings.

Conclusion

In immediately’s submit, we now have explored two video processing pipelines, the everyday one the place frames are copied from CPU to GPU, introducing noticeable bottlenecks, and an optimised pipeline, wherein frames are decoded within the GPU and move them on to inference, saving a significantly period of time as movies’ length improve.