The consistently altering nature of the world round us poses a big problem for the event of AI fashions. Typically, fashions are educated on longitudinal knowledge with the hope that the coaching knowledge used will precisely symbolize inputs the mannequin might obtain sooner or later. Extra typically, the default assumption that every one coaching knowledge are equally related usually breaks in apply. For instance, the determine beneath reveals photos from the CLEAR nonstationary studying benchmark, and it illustrates how visible options of objects evolve considerably over a ten yr span (a phenomenon we consult with as sluggish idea drift), posing a problem for object categorization fashions.

|

| Pattern photos from the CLEAR benchmark. (Tailored from Lin et al.) |

Various approaches, resembling on-line and continuous studying, repeatedly replace a mannequin with small quantities of latest knowledge so as to preserve it present. This implicitly prioritizes latest knowledge, because the learnings from previous knowledge are regularly erased by subsequent updates. Nevertheless in the actual world, completely different sorts of data lose relevance at completely different charges, so there are two key points: 1) By design they focus completely on the newest knowledge and lose any sign from older knowledge that’s erased. 2) Contributions from knowledge situations decay uniformly over time no matter the contents of the info.

In our latest work, “Occasion-Conditional Timescales of Decay for Non-Stationary Studying”, we suggest to assign every occasion an significance rating throughout coaching so as to maximize mannequin efficiency on future knowledge. To perform this, we make use of an auxiliary mannequin that produces these scores utilizing the coaching occasion in addition to its age. This mannequin is collectively realized with the first mannequin. We tackle each the above challenges and obtain vital positive aspects over different sturdy studying strategies on a variety of benchmark datasets for nonstationary studying. As an illustration, on a latest large-scale benchmark for nonstationary studying (~39M photographs over a ten yr interval), we present as much as 15% relative accuracy positive aspects by way of realized reweighting of coaching knowledge.

The problem of idea drift for supervised studying

To achieve quantitative perception into sluggish idea drift, we constructed classifiers on a latest photograph categorization process, comprising roughly 39M pictures sourced from social media web sites over a ten yr interval. We in contrast offline coaching, which iterated over all of the coaching knowledge a number of occasions in random order, and continuous coaching, which iterated a number of occasions over every month of knowledge in sequential (temporal) order. We measured mannequin accuracy each in the course of the coaching interval and through a subsequent interval the place each fashions had been frozen, i.e., not up to date additional on new knowledge (proven beneath). On the finish of the coaching interval (left panel, x-axis = 0), each approaches have seen the identical quantity of knowledge, however present a big efficiency hole. This is because of catastrophic forgetting, an issue in continuous studying the place a mannequin’s data of knowledge from early on within the coaching sequence is diminished in an uncontrolled method. Alternatively, forgetting has its benefits — over the take a look at interval (proven on the correct), the continuous educated mannequin degrades a lot much less quickly than the offline mannequin as a result of it’s much less depending on older knowledge. The decay of each fashions’ accuracy within the take a look at interval is affirmation that the info is certainly evolving over time, and each fashions change into more and more much less related.

|

| Evaluating offline and frequently educated fashions on the photograph classification process. |

Time-sensitive reweighting of coaching knowledge

We design a technique combining the advantages of offline studying (the pliability of successfully reusing all accessible knowledge) and continuous studying (the flexibility to downplay older knowledge) to handle sluggish idea drift. We construct upon offline studying, then add cautious management over the affect of previous knowledge and an optimization goal, each designed to cut back mannequin decay sooner or later.

Suppose we want to practice a mannequin, M, given some coaching knowledge collected over time. We suggest to additionally practice a helper mannequin that assigns a weight to every level primarily based on its contents and age. This weight scales the contribution from that knowledge level within the coaching goal for M. The target of the weights is to enhance the efficiency of M on future knowledge.

In our work, we describe how the helper mannequin might be meta-learned, i.e., realized alongside M in a way that helps the training of the mannequin M itself. A key design selection of the helper mannequin is that we separated out instance- and age-related contributions in a factored method. Particularly, we set the load by combining contributions from a number of completely different mounted timescales of decay, and study an approximate “task” of a given occasion to its most suited timescales. We discover in our experiments that this type of the helper mannequin outperforms many different options we thought of, starting from unconstrained joint features to a single timescale of decay (exponential or linear), on account of its mixture of simplicity and expressivity. Full particulars could also be discovered within the paper.

Occasion weight scoring

The highest determine beneath reveals that our realized helper mannequin certainly up-weights extra modern-looking objects within the CLEAR object recognition problem; older-looking objects are correspondingly down-weighted. On nearer examination (backside determine beneath, gradient-based characteristic significance evaluation), we see that the helper mannequin focuses on the first object inside the picture, versus, e.g., background options which will spuriously be correlated with occasion age.

|

| Pattern photos from the CLEAR benchmark (digicam & laptop classes) assigned the very best and lowest weights respectively by our helper mannequin. |

|

| Characteristic significance evaluation of our helper mannequin on pattern photos from the CLEAR benchmark. |

Outcomes

Good points on large-scale knowledge

We first research the large-scale photograph categorization process (PCAT) on the YFCC100M dataset mentioned earlier, utilizing the primary 5 years of knowledge for coaching and the subsequent 5 years as take a look at knowledge. Our methodology (proven in pink beneath) improves considerably over the no-reweighting baseline (black) in addition to many different sturdy studying methods. Apparently, our methodology intentionally trades off accuracy on the distant previous (coaching knowledge unlikely to reoccur sooner or later) in alternate for marked enhancements within the take a look at interval. Additionally, as desired, our methodology degrades lower than different baselines within the take a look at interval.

|

| Comparability of our methodology and related baselines on the PCAT dataset. |

Broad applicability

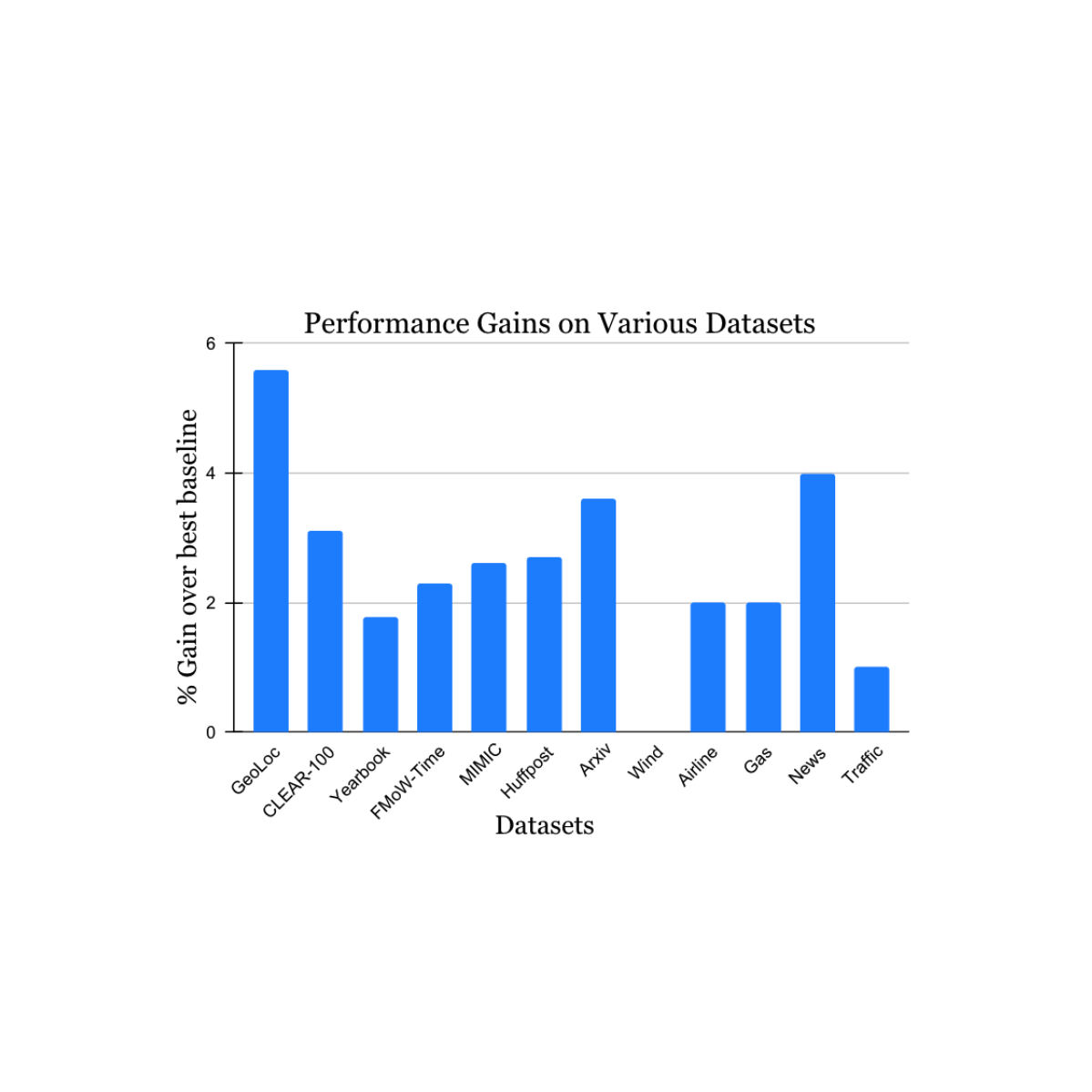

We validated our findings on a variety of nonstationary studying problem datasets sourced from the educational literature (see 1, 2, 3, 4 for particulars) that spans knowledge sources and modalities (photographs, satellite tv for pc photos, social media textual content, medical data, sensor readings, tabular knowledge) and sizes (starting from 10k to 39M situations). We report vital positive aspects within the take a look at interval when in comparison with the closest printed benchmark methodology for every dataset (proven beneath). Observe that the earlier best-known methodology could also be completely different for every dataset. These outcomes showcase the broad applicability of our method.

|

| Efficiency acquire of our methodology on a wide range of duties learning pure idea drift. Our reported positive aspects are over the earlier best-known methodology for every dataset. |

Extensions to continuous studying

Lastly, we think about an fascinating extension of our work. The work above described how offline studying might be prolonged to deal with idea drift utilizing concepts impressed by continuous studying. Nevertheless, typically offline studying is infeasible — for instance, if the quantity of coaching knowledge accessible is just too massive to take care of or course of. We tailored our method to continuous studying in an easy method by making use of temporal reweighting inside the context of every bucket of knowledge getting used to sequentially replace the mannequin. This proposal nonetheless retains some limitations of continuous studying, e.g., mannequin updates are carried out solely on most-recent knowledge, and all optimization choices (together with our reweighting) are solely remodeled that knowledge. However, our method constantly beats common continuous studying in addition to a variety of different continuous studying algorithms on the photograph categorization benchmark (see beneath). Since our method is complementary to the concepts in lots of baselines in contrast right here, we anticipate even bigger positive aspects when mixed with them.

|

| Outcomes of our methodology tailored to continuous studying, in comparison with the newest baselines. |

Conclusion

We addressed the problem of knowledge drift in studying by combining the strengths of earlier approaches — offline studying with its efficient reuse of knowledge, and continuous studying with its emphasis on newer knowledge. We hope that our work helps enhance mannequin robustness to idea drift in apply, and generates elevated curiosity and new concepts in addressing the ever present drawback of sluggish idea drift.

Acknowledgements

We thank Mike Mozer for a lot of fascinating discussions within the early part of this work, in addition to very useful recommendation and suggestions throughout its growth.