Picture by Writer

# Introduction

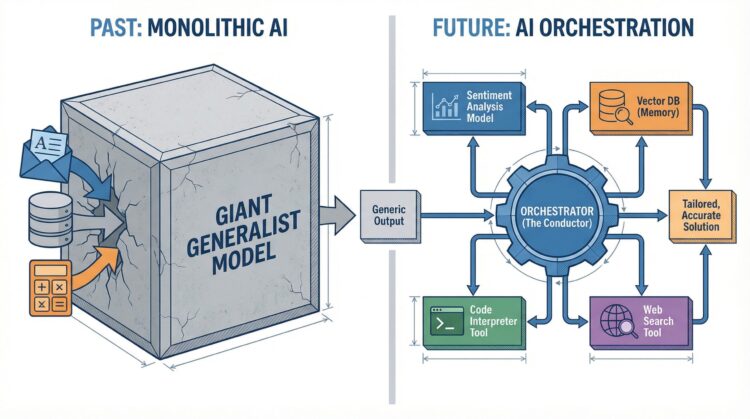

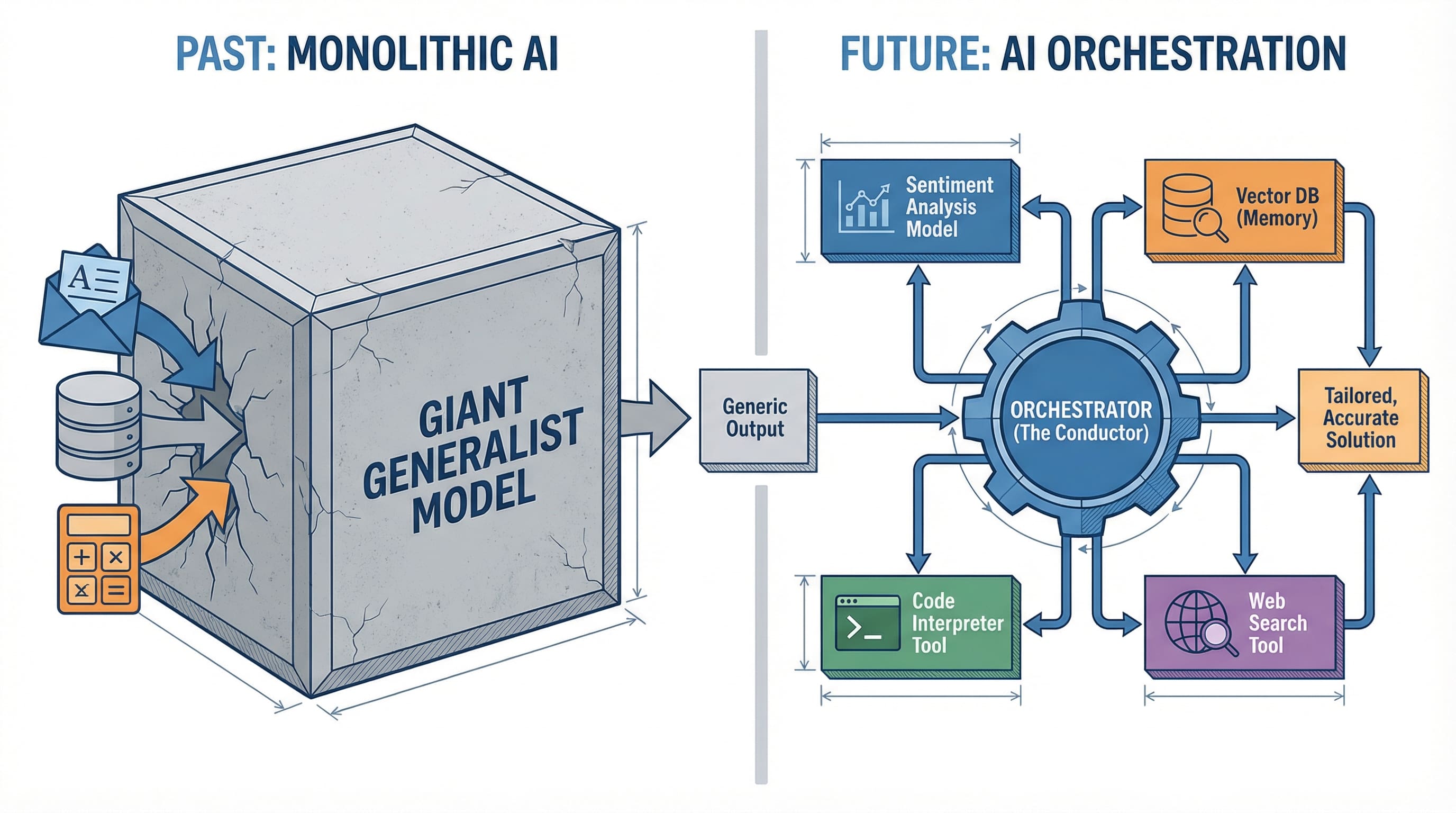

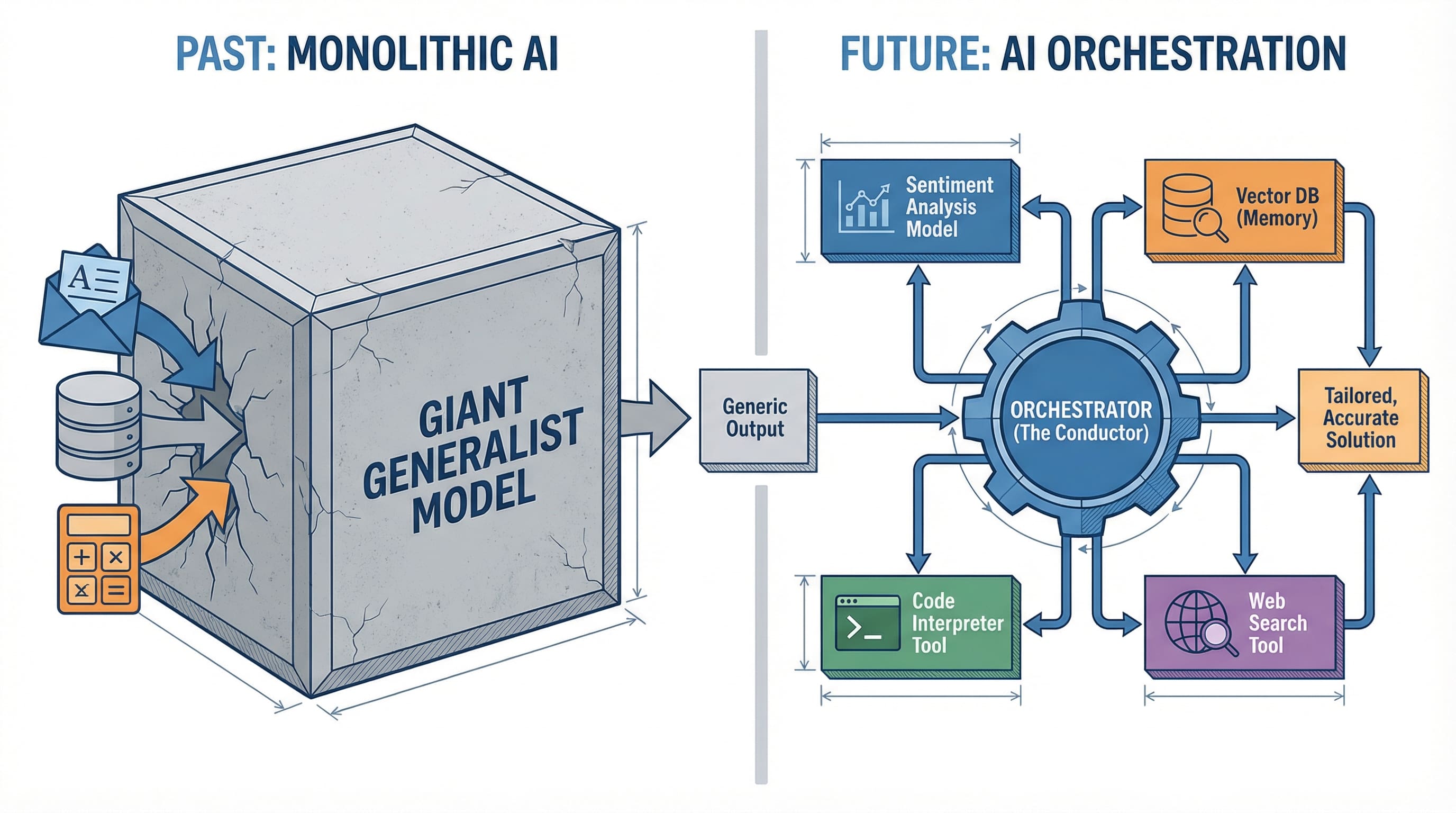

For the previous two years, the AI business has been locked in a race to construct ever-larger language fashions. GPT-4, Claude, Gemini: every promising to be the singular resolution to each AI downside. However whereas corporations competed to create the most important mind, a quiet revolution was occurring in manufacturing environments. Builders stopped asking “which mannequin is finest?” and began asking “how do I make a number of fashions work collectively?”

This shift marks the rise of AI orchestration, and it is altering how we construct clever functions.

# Why One AI Cannot Rule Them All

The dream of a single, omnipotent AI mannequin is interesting. One API name, one response, one invoice. However actuality has confirmed extra advanced.

Contemplate a customer support software. You want sentiment evaluation to gauge buyer emotion, data retrieval to search out related data, response technology to craft replies, and high quality checking to make sure accuracy. Whereas GPT-4 can technically deal with all these duties, every requires totally different optimization. A mannequin skilled to excel at sentiment evaluation makes totally different architectural tradeoffs than one optimized for textual content technology.

The breakthrough is not in constructing one mannequin to rule all of them. It is in coordinating a number of specialists.

This mirrors a sample we have seen earlier than in software program structure. Microservices changed monolithic functions not as a result of any single microservice was superior, however as a result of coordinated specialised providers proved extra maintainable, scalable, and efficient. AI is having its microservices second.

# The Three-Layer Stack

Understanding fashionable AI functions requires pondering in layers. The structure that is emerged from manufacturing deployments appears to be like remarkably constant.

// The Mannequin Layer

The Mannequin Layer sits on the basis. This consists of your LLMs, whether or not GPT-4, Claude, native fashions like Llama, or specialised fashions for imaginative and prescient, code, or evaluation. Every mannequin brings particular capabilities: reasoning, technology, classification, or transformation. The important thing perception is that you simply’re not selecting one mannequin. You are composing a set.

// The Software Layer

The Software Layer permits motion. Language fashions can assume however cannot do something on their very own. They want instruments to work together with the world. This layer consists of internet search, database queries, API calls, code execution environments, and file techniques. When Claude “searches the net” or ChatGPT “runs Python code,” they’re utilizing instruments from this layer. The Mannequin Context Protocol (MCP), lately launched by Anthropic, is standardizing how fashions connect with instruments, making this layer more and more plug-and-play.

// The Orchestration Layer

The Orchestration Layer coordinates every part. That is the place the intelligence of your system really lives. The orchestrator decides which mannequin to invoke for which job, when to name instruments, how you can chain operations collectively, and how you can deal with failures. It is the conductor of your AI symphony.

Fashions are musicians, instruments are devices, and orchestration is the sheet music that tells everybody when to play.

# Orchestration Frameworks: Understanding the Patterns

Simply as React and Vue standardized frontend growth, orchestration frameworks are standardizing how we construct AI techniques. However earlier than we focus on particular instruments, we have to perceive the architectural patterns they characterize. Instruments come and go. Patterns endure.

// The Chain Sample (Sequential Logic)

The Chain Sample (Sequential Logic) is orchestration’s most elementary sample. Consider it as a knowledge pipeline the place every step’s output turns into the subsequent step’s enter. Person query, retrieve context, generate response, validate output. Every operation occurs in sequence, with the orchestrator managing the handoffs. LangChain pioneered this sample and constructed a whole framework round making chains composable and reusable.

The power of chains lies of their simplicity: you possibly can purpose in regards to the stream, debug step-by-step, and optimize particular person phases. The limitation is rigidity. Chains do not adapt primarily based on intermediate outcomes. If step two discovers the query is unanswerable, the chain nonetheless marches by way of steps three and 4. However for predictable workflows with clear phases, chains work nicely.

// The RAG Sample (Retrieval-First Logic)

The RAG Sample (Retrieval-First Logic) emerged from a selected downside: language fashions hallucinate once they lack data. The answer is straightforward: retrieve related data first, then generate responses grounded in that knowledge.

However architecturally, RAG represents one thing deeper: Simply-in-Time Context Injection. Consider it because the separation of Compute (the LLM) from Reminiscence (the Vector Retailer). The mannequin itself stays static. It would not be taught new information. As a substitute, you swap what’s within the mannequin’s “RAM” by injecting related context into its immediate window. You are not retraining the mind. You are giving it entry to the precise data it wants, exactly when it wants it.

This architectural precept (Question, Search data base, Rank outcomes by relevance, Inject into context, Generate response) works as a result of it turns a generative downside right into a retrieval plus synthesis downside, and retrieval is extra dependable than technology.

What makes this a long-lasting sample moderately than only a approach is that this separation of issues. The mannequin handles reasoning and synthesis. The vector retailer handles reminiscence and recall. The orchestrator manages the injection timing. LlamaIndex constructed its whole framework round optimizing this sample, dealing with the exhausting elements of doc chunking, embedding technology, vector storage, and retrieval rating. You may see how RAG works in apply even with easy no-code instruments.

// The Multi-Agent Sample (Delegation Logic)

The Multi-Agent Sample (Delegation Logic) represents orchestration’s most subtle evolution. As a substitute of 1 sequential stream or one retrieval step, you create specialised brokers that delegate to one another. A “planner” agent breaks down advanced duties. “Researcher” brokers collect data. “Analyst” brokers course of knowledge. “Author” brokers produce output. “Critic” brokers overview high quality.

CrewAI exemplifies this sample, however the idea predates the instrument. The architectural perception is that advanced intelligence emerges from coordination between specialists, not from one generalist attempting to do every part. Every agent has a slim accountability, clear success standards, and the flexibility to request assist from different brokers. The orchestrator manages the delegation graph, guaranteeing brokers do not loop infinitely and work progresses towards the purpose. If you wish to dive deeper into how brokers work collectively, take a look at key agentic AI ideas.

The selection between patterns is not about which is “finest.” It is about matching sample to downside. Easy, predictable workflows? Use chains. Information-intensive functions? Use RAG. Advanced, multi-step reasoning requiring totally different specializations? Use multi-agent. Manufacturing techniques typically mix all three: a multi-agent system the place every agent makes use of RAG internally and communicates by way of chains.

The Mannequin Context Protocol deserves particular point out because the rising normal beneath these patterns. MCP is not a sample itself however a common protocol for a way fashions connect with instruments and knowledge sources. Launched by Anthropic in late 2024, it is changing into the muse layer that frameworks construct upon, the HTTP of AI orchestration. As MCP adoption grows, we’re shifting towards standardized interfaces the place any sample can use any instrument, no matter which framework you have chosen.

# From Immediate to Pipeline: The Router Adjustments All the things

Understanding orchestration conceptually is one factor. Seeing it in manufacturing reveals why it issues and exposes the element that determines success or failure.

Contemplate a coding assistant that helps builders debug points. A single-model method would ship code and error messages to GPT-4 and hope for the perfect. An orchestrated system works in another way, and its success hinges on one crucial element: the Router.

The Router is the decision-making engine on the coronary heart of each orchestrated system. It examines incoming requests and determines which pathway by way of your system they need to take. This is not simply plumbing. Routing accuracy determines whether or not your orchestrated system outperforms a single mannequin or wastes money and time on pointless complexity.

Let’s return to our debugging assistant. When a developer submits an issue, the Router should determine: Is that this a syntax error? A runtime error? A logic error? Every kind requires totally different dealing with.

How an Clever Router acts as a call engine to direct inputs to specialised pathways | Picture by Writer

Syntax errors path to a specialised code analyzer, a light-weight mannequin fine-tuned for parsing violations. Runtime errors set off the debugger instrument to look at program state, then go findings to a reasoning mannequin that understands execution context. Logic errors require a unique path fully: search Stack Overflow for comparable points, retrieve related context, then invoke a reasoning mannequin to synthesize options.

However how does the Router determine? Three approaches dominate manufacturing techniques.

Semantic routing makes use of embedding similarity. Convert the person’s query right into a vector, evaluate it to embeddings of instance questions for every route, and ship it down the trail with highest similarity. Quick and efficient for clearly distinct classes. The debugger makes use of this when error sorts are well-defined and examples are plentiful.

Key phrase routing examines specific alerts. If the error message accommodates “SyntaxError,” path to the parser. If it accommodates “NullPointerException,” path to the runtime handler. Easy, quick, and surprisingly strong when you have got dependable indicators. Many manufacturing techniques begin right here earlier than including complexity.

LLM-decision routing makes use of a small, quick mannequin because the Router itself. Ship the request to a specialised classification mannequin that is been skilled or prompted to make routing selections. Extra versatile than key phrases, extra dependable than pure semantic similarity, however provides latency and value. GitHub Copilot and comparable instruments use variations of this method.

This is the perception that issues: The success of your orchestrated system relies upon 90% on Router accuracy, not on the sophistication of your downstream fashions. An ideal GPT-4 response despatched down the fallacious path helps nobody. A good response from a specialised mannequin routed accurately solves the issue.

This creates an sudden optimization goal. Groups obsess over which LLM to make use of for technology however neglect Router engineering. They need to do the other. A easy Router making appropriate selections beats a posh Router that is ceaselessly fallacious. Manufacturing groups measure routing accuracy religiously. It is the metric that predicts system success.

The Router additionally handles failures and fallbacks. What if semantic routing is not assured? What if the net search returns nothing? Manufacturing Routers implement choice bushes: strive semantic routing first, fall again to key phrase matching if confidence is low, escalate to LLM-decision routing for edge instances, and all the time keep a default path for really ambiguous inputs.

This explains why orchestrated techniques persistently outperform single fashions regardless of added complexity. It isn’t that orchestration magically makes fashions smarter. It is that correct routing ensures specialised fashions solely see issues they’re optimized to unravel. A syntax analyzer solely analyzes syntax. A reasoning mannequin solely causes. Every element operates in its zone of excellence as a result of the Router protected it from issues it will possibly’t deal with.

The structure sample is common: Router on the entrance, specialised processors behind it, orchestrator managing the stream. Whether or not you are constructing a customer support bot, a analysis assistant, or a coding instrument, getting the Router proper determines whether or not your orchestrated system succeeds or turns into an costly, gradual different to GPT-4.

# When to Orchestrate, When to Preserve It Easy

Not each AI software wants orchestration. A chatbot that solutions FAQs? Single mannequin. A system that classifies assist tickets? Single mannequin. Producing product descriptions? Single mannequin.

Orchestration is smart whenever you want:

A number of capabilities that no single mannequin handles nicely. Customer support requiring sentiment evaluation, data retrieval, and response technology advantages from orchestration. Easy Q&A would not.

Exterior knowledge or actions. In case your AI wants to look databases, name APIs, or execute code, orchestration manages these instrument interactions higher than attempting to immediate a single mannequin to “fake” it will possibly entry knowledge.

Reliability by way of redundancy. Manufacturing techniques typically chain a quick, low cost mannequin for preliminary processing with a succesful, costly mannequin for advanced instances. The orchestrator routes primarily based on problem.

Value optimization. Utilizing GPT-4 for every part is dear. Orchestration permits you to route easy duties to cheaper fashions and reserve costly fashions for exhausting issues.

The choice framework is easy: begin easy. Use a single mannequin till you hit clear limitations. Add orchestration when the complexity pays for itself in higher outcomes, decrease prices, or new capabilities.

# Last Ideas

AI orchestration represents a maturation of the sphere. We’re shifting from “which mannequin ought to I take advantage of?” to “how ought to I architect my AI system?” This mirrors each expertise’s evolution, from monolithic to distributed, from selecting the perfect instrument to composing the appropriate instruments.

The frameworks exist. The patterns are rising. The query now could be whether or not you may construct AI functions the previous method (hoping one mannequin can do every part) or the brand new method: orchestrating specialised fashions and instruments into techniques which might be larger than the sum of their elements.

The way forward for AI is not find the right mannequin. It is in studying to conduct the orchestra.

Vinod Chugani is an AI and knowledge science educator who bridges the hole between rising AI applied sciences and sensible software for working professionals. His focus areas embody agentic AI, machine studying functions, and automation workflows. By way of his work as a technical mentor and teacher, Vinod has supported knowledge professionals by way of talent growth and profession transitions. He brings analytical experience from quantitative finance to his hands-on educating method. His content material emphasizes actionable methods and frameworks that professionals can apply instantly.

Picture by Writer

# Introduction

For the previous two years, the AI business has been locked in a race to construct ever-larger language fashions. GPT-4, Claude, Gemini: every promising to be the singular resolution to each AI downside. However whereas corporations competed to create the most important mind, a quiet revolution was occurring in manufacturing environments. Builders stopped asking “which mannequin is finest?” and began asking “how do I make a number of fashions work collectively?”

This shift marks the rise of AI orchestration, and it is altering how we construct clever functions.

# Why One AI Cannot Rule Them All

The dream of a single, omnipotent AI mannequin is interesting. One API name, one response, one invoice. However actuality has confirmed extra advanced.

Contemplate a customer support software. You want sentiment evaluation to gauge buyer emotion, data retrieval to search out related data, response technology to craft replies, and high quality checking to make sure accuracy. Whereas GPT-4 can technically deal with all these duties, every requires totally different optimization. A mannequin skilled to excel at sentiment evaluation makes totally different architectural tradeoffs than one optimized for textual content technology.

The breakthrough is not in constructing one mannequin to rule all of them. It is in coordinating a number of specialists.

This mirrors a sample we have seen earlier than in software program structure. Microservices changed monolithic functions not as a result of any single microservice was superior, however as a result of coordinated specialised providers proved extra maintainable, scalable, and efficient. AI is having its microservices second.

# The Three-Layer Stack

Understanding fashionable AI functions requires pondering in layers. The structure that is emerged from manufacturing deployments appears to be like remarkably constant.

// The Mannequin Layer

The Mannequin Layer sits on the basis. This consists of your LLMs, whether or not GPT-4, Claude, native fashions like Llama, or specialised fashions for imaginative and prescient, code, or evaluation. Every mannequin brings particular capabilities: reasoning, technology, classification, or transformation. The important thing perception is that you simply’re not selecting one mannequin. You are composing a set.

// The Software Layer

The Software Layer permits motion. Language fashions can assume however cannot do something on their very own. They want instruments to work together with the world. This layer consists of internet search, database queries, API calls, code execution environments, and file techniques. When Claude “searches the net” or ChatGPT “runs Python code,” they’re utilizing instruments from this layer. The Mannequin Context Protocol (MCP), lately launched by Anthropic, is standardizing how fashions connect with instruments, making this layer more and more plug-and-play.

// The Orchestration Layer

The Orchestration Layer coordinates every part. That is the place the intelligence of your system really lives. The orchestrator decides which mannequin to invoke for which job, when to name instruments, how you can chain operations collectively, and how you can deal with failures. It is the conductor of your AI symphony.

Fashions are musicians, instruments are devices, and orchestration is the sheet music that tells everybody when to play.

# Orchestration Frameworks: Understanding the Patterns

Simply as React and Vue standardized frontend growth, orchestration frameworks are standardizing how we construct AI techniques. However earlier than we focus on particular instruments, we have to perceive the architectural patterns they characterize. Instruments come and go. Patterns endure.

// The Chain Sample (Sequential Logic)

The Chain Sample (Sequential Logic) is orchestration’s most elementary sample. Consider it as a knowledge pipeline the place every step’s output turns into the subsequent step’s enter. Person query, retrieve context, generate response, validate output. Every operation occurs in sequence, with the orchestrator managing the handoffs. LangChain pioneered this sample and constructed a whole framework round making chains composable and reusable.

The power of chains lies of their simplicity: you possibly can purpose in regards to the stream, debug step-by-step, and optimize particular person phases. The limitation is rigidity. Chains do not adapt primarily based on intermediate outcomes. If step two discovers the query is unanswerable, the chain nonetheless marches by way of steps three and 4. However for predictable workflows with clear phases, chains work nicely.

// The RAG Sample (Retrieval-First Logic)

The RAG Sample (Retrieval-First Logic) emerged from a selected downside: language fashions hallucinate once they lack data. The answer is straightforward: retrieve related data first, then generate responses grounded in that knowledge.

However architecturally, RAG represents one thing deeper: Simply-in-Time Context Injection. Consider it because the separation of Compute (the LLM) from Reminiscence (the Vector Retailer). The mannequin itself stays static. It would not be taught new information. As a substitute, you swap what’s within the mannequin’s “RAM” by injecting related context into its immediate window. You are not retraining the mind. You are giving it entry to the precise data it wants, exactly when it wants it.

This architectural precept (Question, Search data base, Rank outcomes by relevance, Inject into context, Generate response) works as a result of it turns a generative downside right into a retrieval plus synthesis downside, and retrieval is extra dependable than technology.

What makes this a long-lasting sample moderately than only a approach is that this separation of issues. The mannequin handles reasoning and synthesis. The vector retailer handles reminiscence and recall. The orchestrator manages the injection timing. LlamaIndex constructed its whole framework round optimizing this sample, dealing with the exhausting elements of doc chunking, embedding technology, vector storage, and retrieval rating. You may see how RAG works in apply even with easy no-code instruments.

// The Multi-Agent Sample (Delegation Logic)

The Multi-Agent Sample (Delegation Logic) represents orchestration’s most subtle evolution. As a substitute of 1 sequential stream or one retrieval step, you create specialised brokers that delegate to one another. A “planner” agent breaks down advanced duties. “Researcher” brokers collect data. “Analyst” brokers course of knowledge. “Author” brokers produce output. “Critic” brokers overview high quality.

CrewAI exemplifies this sample, however the idea predates the instrument. The architectural perception is that advanced intelligence emerges from coordination between specialists, not from one generalist attempting to do every part. Every agent has a slim accountability, clear success standards, and the flexibility to request assist from different brokers. The orchestrator manages the delegation graph, guaranteeing brokers do not loop infinitely and work progresses towards the purpose. If you wish to dive deeper into how brokers work collectively, take a look at key agentic AI ideas.

The selection between patterns is not about which is “finest.” It is about matching sample to downside. Easy, predictable workflows? Use chains. Information-intensive functions? Use RAG. Advanced, multi-step reasoning requiring totally different specializations? Use multi-agent. Manufacturing techniques typically mix all three: a multi-agent system the place every agent makes use of RAG internally and communicates by way of chains.

The Mannequin Context Protocol deserves particular point out because the rising normal beneath these patterns. MCP is not a sample itself however a common protocol for a way fashions connect with instruments and knowledge sources. Launched by Anthropic in late 2024, it is changing into the muse layer that frameworks construct upon, the HTTP of AI orchestration. As MCP adoption grows, we’re shifting towards standardized interfaces the place any sample can use any instrument, no matter which framework you have chosen.

# From Immediate to Pipeline: The Router Adjustments All the things

Understanding orchestration conceptually is one factor. Seeing it in manufacturing reveals why it issues and exposes the element that determines success or failure.

Contemplate a coding assistant that helps builders debug points. A single-model method would ship code and error messages to GPT-4 and hope for the perfect. An orchestrated system works in another way, and its success hinges on one crucial element: the Router.

The Router is the decision-making engine on the coronary heart of each orchestrated system. It examines incoming requests and determines which pathway by way of your system they need to take. This is not simply plumbing. Routing accuracy determines whether or not your orchestrated system outperforms a single mannequin or wastes money and time on pointless complexity.

Let’s return to our debugging assistant. When a developer submits an issue, the Router should determine: Is that this a syntax error? A runtime error? A logic error? Every kind requires totally different dealing with.

How an Clever Router acts as a call engine to direct inputs to specialised pathways | Picture by Writer

Syntax errors path to a specialised code analyzer, a light-weight mannequin fine-tuned for parsing violations. Runtime errors set off the debugger instrument to look at program state, then go findings to a reasoning mannequin that understands execution context. Logic errors require a unique path fully: search Stack Overflow for comparable points, retrieve related context, then invoke a reasoning mannequin to synthesize options.

However how does the Router determine? Three approaches dominate manufacturing techniques.

Semantic routing makes use of embedding similarity. Convert the person’s query right into a vector, evaluate it to embeddings of instance questions for every route, and ship it down the trail with highest similarity. Quick and efficient for clearly distinct classes. The debugger makes use of this when error sorts are well-defined and examples are plentiful.

Key phrase routing examines specific alerts. If the error message accommodates “SyntaxError,” path to the parser. If it accommodates “NullPointerException,” path to the runtime handler. Easy, quick, and surprisingly strong when you have got dependable indicators. Many manufacturing techniques begin right here earlier than including complexity.

LLM-decision routing makes use of a small, quick mannequin because the Router itself. Ship the request to a specialised classification mannequin that is been skilled or prompted to make routing selections. Extra versatile than key phrases, extra dependable than pure semantic similarity, however provides latency and value. GitHub Copilot and comparable instruments use variations of this method.

This is the perception that issues: The success of your orchestrated system relies upon 90% on Router accuracy, not on the sophistication of your downstream fashions. An ideal GPT-4 response despatched down the fallacious path helps nobody. A good response from a specialised mannequin routed accurately solves the issue.

This creates an sudden optimization goal. Groups obsess over which LLM to make use of for technology however neglect Router engineering. They need to do the other. A easy Router making appropriate selections beats a posh Router that is ceaselessly fallacious. Manufacturing groups measure routing accuracy religiously. It is the metric that predicts system success.

The Router additionally handles failures and fallbacks. What if semantic routing is not assured? What if the net search returns nothing? Manufacturing Routers implement choice bushes: strive semantic routing first, fall again to key phrase matching if confidence is low, escalate to LLM-decision routing for edge instances, and all the time keep a default path for really ambiguous inputs.

This explains why orchestrated techniques persistently outperform single fashions regardless of added complexity. It isn’t that orchestration magically makes fashions smarter. It is that correct routing ensures specialised fashions solely see issues they’re optimized to unravel. A syntax analyzer solely analyzes syntax. A reasoning mannequin solely causes. Every element operates in its zone of excellence as a result of the Router protected it from issues it will possibly’t deal with.

The structure sample is common: Router on the entrance, specialised processors behind it, orchestrator managing the stream. Whether or not you are constructing a customer support bot, a analysis assistant, or a coding instrument, getting the Router proper determines whether or not your orchestrated system succeeds or turns into an costly, gradual different to GPT-4.

# When to Orchestrate, When to Preserve It Easy

Not each AI software wants orchestration. A chatbot that solutions FAQs? Single mannequin. A system that classifies assist tickets? Single mannequin. Producing product descriptions? Single mannequin.

Orchestration is smart whenever you want:

A number of capabilities that no single mannequin handles nicely. Customer support requiring sentiment evaluation, data retrieval, and response technology advantages from orchestration. Easy Q&A would not.

Exterior knowledge or actions. In case your AI wants to look databases, name APIs, or execute code, orchestration manages these instrument interactions higher than attempting to immediate a single mannequin to “fake” it will possibly entry knowledge.

Reliability by way of redundancy. Manufacturing techniques typically chain a quick, low cost mannequin for preliminary processing with a succesful, costly mannequin for advanced instances. The orchestrator routes primarily based on problem.

Value optimization. Utilizing GPT-4 for every part is dear. Orchestration permits you to route easy duties to cheaper fashions and reserve costly fashions for exhausting issues.

The choice framework is easy: begin easy. Use a single mannequin till you hit clear limitations. Add orchestration when the complexity pays for itself in higher outcomes, decrease prices, or new capabilities.

# Last Ideas

AI orchestration represents a maturation of the sphere. We’re shifting from “which mannequin ought to I take advantage of?” to “how ought to I architect my AI system?” This mirrors each expertise’s evolution, from monolithic to distributed, from selecting the perfect instrument to composing the appropriate instruments.

The frameworks exist. The patterns are rising. The query now could be whether or not you may construct AI functions the previous method (hoping one mannequin can do every part) or the brand new method: orchestrating specialised fashions and instruments into techniques which might be larger than the sum of their elements.

The way forward for AI is not find the right mannequin. It is in studying to conduct the orchestra.

Vinod Chugani is an AI and knowledge science educator who bridges the hole between rising AI applied sciences and sensible software for working professionals. His focus areas embody agentic AI, machine studying functions, and automation workflows. By way of his work as a technical mentor and teacher, Vinod has supported knowledge professionals by way of talent growth and profession transitions. He brings analytical experience from quantitative finance to his hands-on educating method. His content material emphasizes actionable methods and frameworks that professionals can apply instantly.