On this article, you’ll learn to transfer past Andrew Ng’s machine studying course by rebuilding your psychological mannequin for neural networks, shifting from algorithms to architectures, and working towards with actual, messy information and language fashions.

Matters we’ll cowl embody:

- Reframing illustration studying and mastering backpropagation as data circulation.

- Understanding architectures and pipelines as composable programs.

- Working at information scale, instrumenting experiments, and choosing tasks that stretch you.

Let’s break it down.

Leveling Up Your Machine Studying: What To Do After Andrew Ng’s Course

Picture by Editor

Attending to “Begin”

Ending Andrew Ng’s machine studying course can really feel like an odd second. You perceive linear regression, logistic regression, bias–variance trade-offs, and why gradient descent works, but fashionable machine studying conversations can look like they’re taking place in one other universe.

Transformers, embeddings, fine-tuning, diffusion, giant language mannequin (LLM) brokers. None of that was on the syllabus. The hole isn’t a failure of the course; it’s a mismatch between foundational schooling and the place the sphere jumped subsequent.

What you want now shouldn’t be one other seize bag of algorithms, however a deliberate development that turns classical instinct into neural fluency. That is the place machine studying stops being a set of formulation and begins behaving like a system you’ll be able to motive about, debug, and lengthen.

Rebuilding Your Psychological Mannequin for Neural Networks

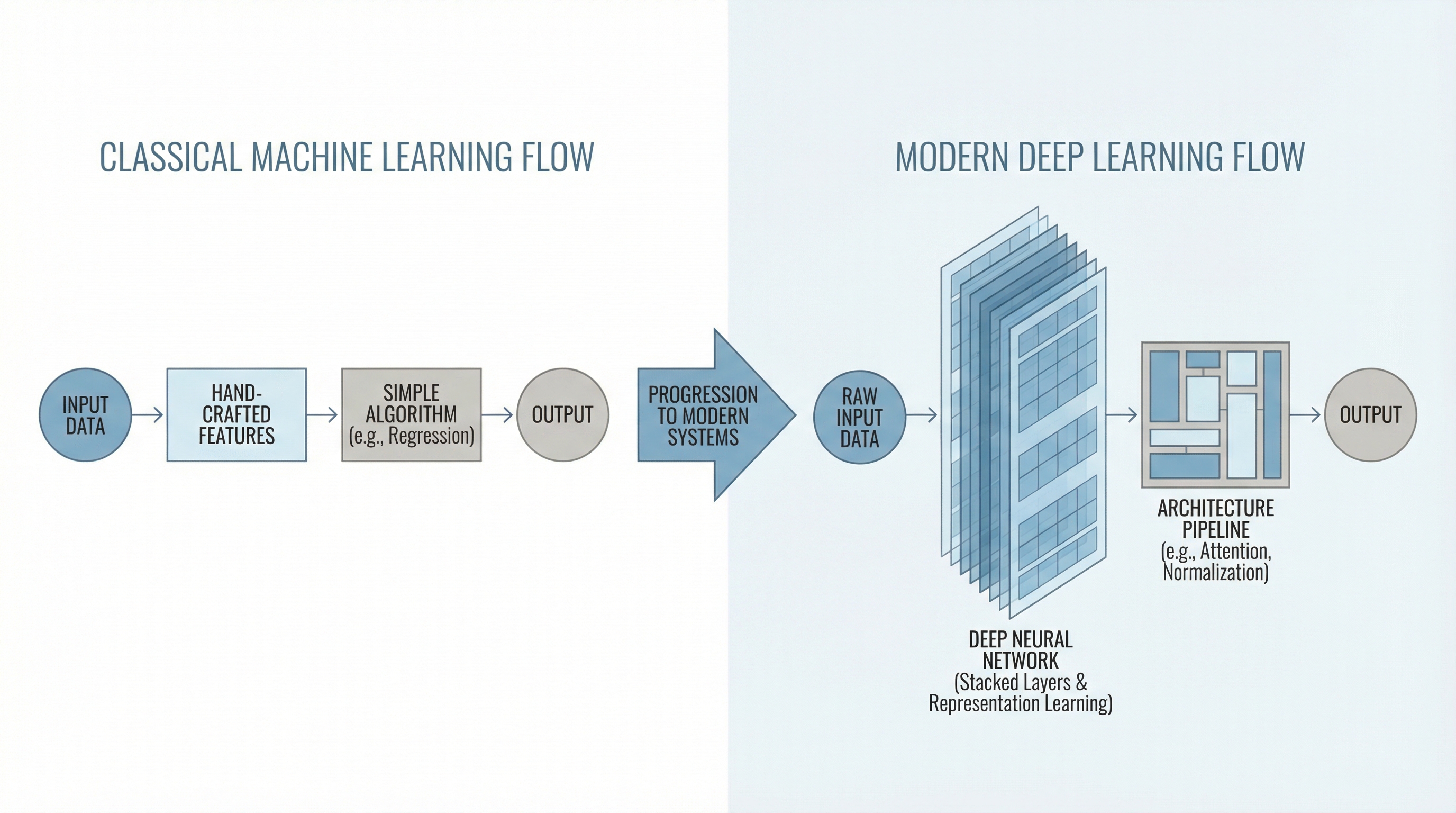

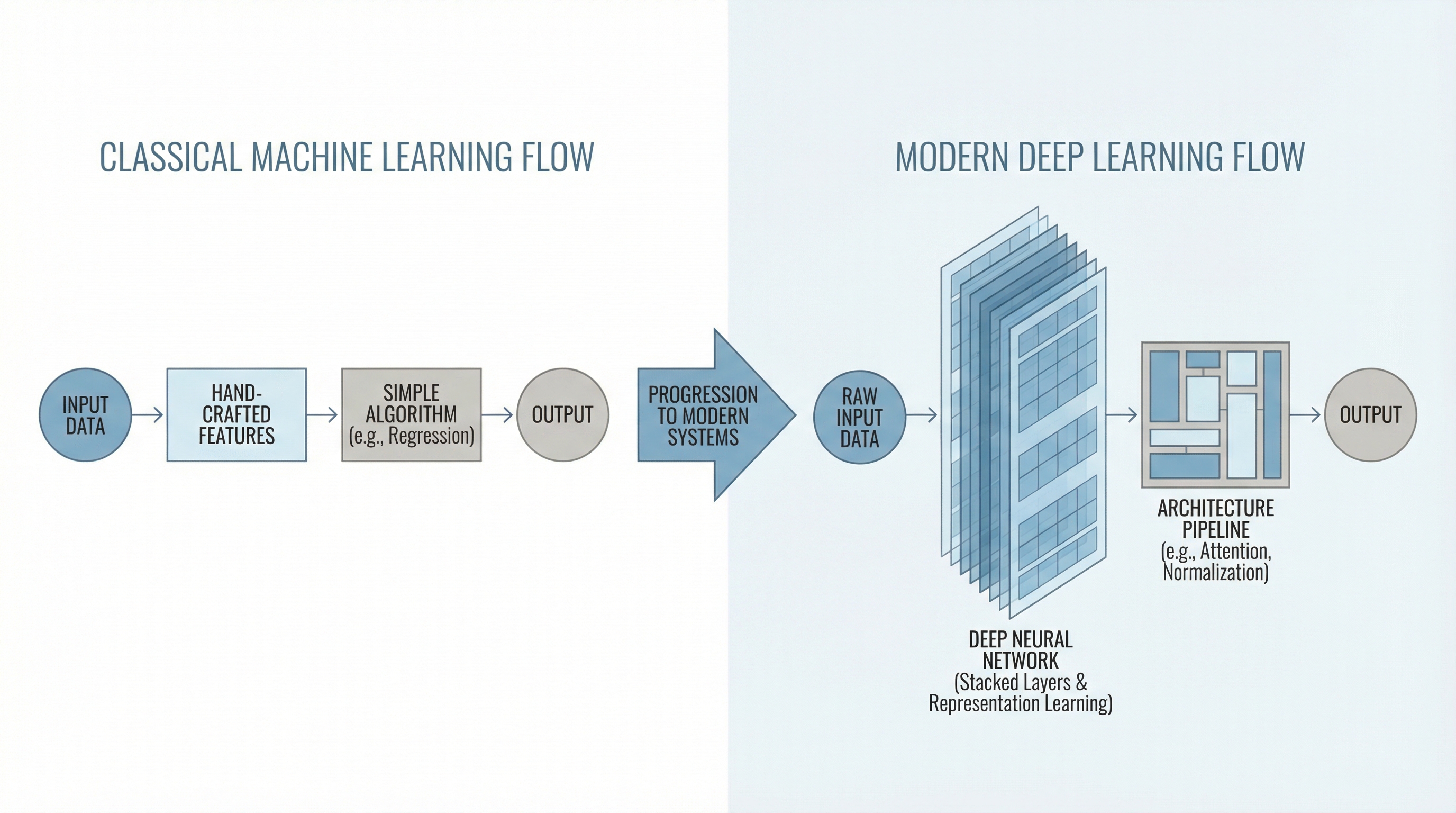

Conventional machine studying teaches you to assume when it comes to options, goal features, and optimization. Neural networks ask you to carry the identical concepts, however at a distinct scale and with extra abstraction.

Step one ahead shouldn’t be memorizing architectures, however reframing how illustration studying works. As an alternative of hand-engineering options, you might be studying transformations that invent options for you, layer by layer. This shift sounds apparent, but it surely modifications the way you debug, consider, and enhance fashions.

Spend time deeply understanding backpropagation in multilayer networks, not simply as an algorithm however as a circulation of knowledge and blame task. When a community fails, the query isn’t “Which mannequin ought to I take advantage of?” and extra typically “The place did studying collapse?” Vanishing gradients, useless neurons, saturation, and initialization points all reside right here. If this layer is opaque, every part constructed on high of it stays mysterious.

Frameworks like PyTorch assist, however they’ll additionally conceal important mechanics. Reimplementing a small neural community from scratch, even as soon as, forces readability. Immediately, tensor shapes matter. Activation decisions cease being arbitrary. Loss curves develop into diagnostic instruments as an alternative of charts you merely hope go down. That is the place instinct begins to type.

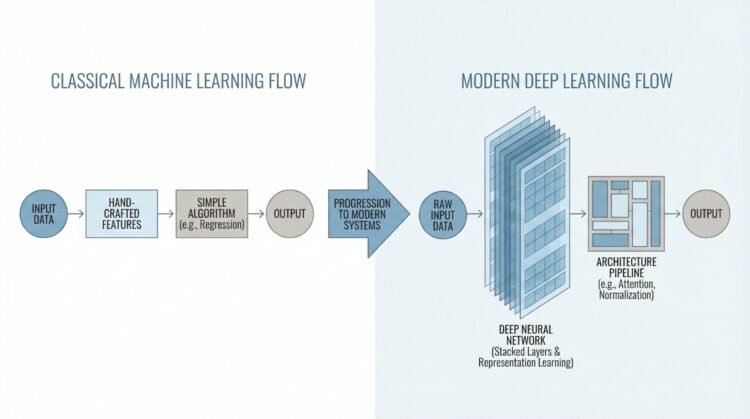

Shifting From Algorithms to Architectures

Andrew Ng’s course trains you to pick out algorithms primarily based on information properties. Fashionable machine studying shifts decision-making towards architectures. Convolutional networks encode spatial assumptions. Recurrent fashions encode sequence dependencies. Transformers assume consideration is the primitive value scaling. Understanding these assumptions is extra vital than memorizing mannequin diagrams.

Begin by finding out why sure architectures changed others. CNNs didn’t win as a result of they have been modern, however as a result of weight sharing and locality aligned with visible construction. Transformers didn’t dominate language as a result of recurrence was damaged, however as a result of consideration scaled higher and parallelized studying. Each structure is a speculation about construction in information. Be taught to learn them that method.

That is additionally the second to cease pondering when it comes to single fashions and begin pondering when it comes to pipelines. Tokenization, embeddings, optimizing cloud prices, positional encoding, normalization, and decoding methods are all a part of the system. Efficiency features typically come from adjusting these elements, not swapping out the core mannequin. When you see architectures as composable programs, the sphere begins to really feel navigable quite than overwhelming.

Studying to Work With Actual Information at Scale

Basic coursework typically makes use of clear, preprocessed datasets the place the laborious components are politely eliminated. Actual-world machine studying is the alternative. Information is messy, biased, incomplete, and consistently shifting. The sooner you confront this, the sooner you degree up.

Fashionable neural fashions are delicate to information distribution in methods linear fashions not often are. Small preprocessing choices can quietly dominate outcomes. Normalization decisions, sequence truncation, class imbalance dealing with, and augmentation methods are usually not peripheral issues. They’re central to efficiency and stability. Studying to examine information statistically and visually turns into a core ability, not a hygiene step.

You additionally have to get snug with experiments that don’t converge cleanly. Coaching runs that diverge, stall, or behave inconsistently are regular. Instrumentation issues. Logging gradients, activations, and intermediate metrics helps you distinguish between information issues, optimization issues, and architectural limits. That is the place machine studying begins to resemble engineering greater than math.

Understanding Language Fashions With out Treating Them as Magic

Language fashions can really feel like a cliff after conventional machine studying. The maths appears to be like acquainted, however the conduct feels alien. The secret is to floor LLMs in ideas you already know. They’re neural networks skilled with maximum-likelihood targets over token sequences. Nothing supernatural is going on, even when the outputs really feel uncanny.

Focus first on embeddings and a focus. Embeddings translate discrete symbols into steady areas the place similarity turns into geometric. Consideration learns which components of a sequence matter for predicting the following token. As soon as these concepts click on, transformers cease feeling like black packing containers and begin wanting like very giant, very common neural networks.

Tremendous-tuning and prompting ought to come later. Earlier than adapting fashions, perceive pretraining targets, scaling legal guidelines, and failure modes like hallucination and bias. Deal with language fashions as probabilistic programs with strengths and blind spots, not oracles. This mindset makes you far simpler whenever you ultimately deploy them.

Constructing Initiatives That Really Stretch You

Initiatives are the bridge between information and functionality, however provided that they’re chosen fastidiously. Reimplementing tutorials teaches familiarity, not fluency. The purpose is to come across issues the place the answer shouldn’t be already written out for you.

Good tasks contain trade-offs. Coaching instability, restricted information, computational constraints, or unclear analysis metrics pressure you to make choices. These choices are the place studying occurs. A modest mannequin you perceive deeply beats a large one you copied blindly.

Deal with every challenge as an experiment. Doc assumptions, failures, and shocking behaviors. Over time, this creates a private information base that no course can present. When you’ll be able to clarify why a mannequin failed and what you’ll strive subsequent, you might be now not simply studying machine studying. You might be working towards it.

Conclusion

The trail past Andrew Ng’s course shouldn’t be about abandoning fundamentals, however about extending them into programs that study representations, scale with information, and behave probabilistically in the actual world. Neural networks, architectures, and language fashions are usually not a separate self-discipline.

They’re the continuation of the identical concepts, pushed to their limits. Progress comes from rebuilding instinct layer by layer, confronting messy information, and resisting the temptation to deal with fashionable fashions as magic. When you make that shift, the sphere stops feeling like a transferring goal and begins feeling like a panorama you’ll be able to discover with confidence.

On this article, you’ll learn to transfer past Andrew Ng’s machine studying course by rebuilding your psychological mannequin for neural networks, shifting from algorithms to architectures, and working towards with actual, messy information and language fashions.

Matters we’ll cowl embody:

- Reframing illustration studying and mastering backpropagation as data circulation.

- Understanding architectures and pipelines as composable programs.

- Working at information scale, instrumenting experiments, and choosing tasks that stretch you.

Let’s break it down.

Leveling Up Your Machine Studying: What To Do After Andrew Ng’s Course

Picture by Editor

Attending to “Begin”

Ending Andrew Ng’s machine studying course can really feel like an odd second. You perceive linear regression, logistic regression, bias–variance trade-offs, and why gradient descent works, but fashionable machine studying conversations can look like they’re taking place in one other universe.

Transformers, embeddings, fine-tuning, diffusion, giant language mannequin (LLM) brokers. None of that was on the syllabus. The hole isn’t a failure of the course; it’s a mismatch between foundational schooling and the place the sphere jumped subsequent.

What you want now shouldn’t be one other seize bag of algorithms, however a deliberate development that turns classical instinct into neural fluency. That is the place machine studying stops being a set of formulation and begins behaving like a system you’ll be able to motive about, debug, and lengthen.

Rebuilding Your Psychological Mannequin for Neural Networks

Conventional machine studying teaches you to assume when it comes to options, goal features, and optimization. Neural networks ask you to carry the identical concepts, however at a distinct scale and with extra abstraction.

Step one ahead shouldn’t be memorizing architectures, however reframing how illustration studying works. As an alternative of hand-engineering options, you might be studying transformations that invent options for you, layer by layer. This shift sounds apparent, but it surely modifications the way you debug, consider, and enhance fashions.

Spend time deeply understanding backpropagation in multilayer networks, not simply as an algorithm however as a circulation of knowledge and blame task. When a community fails, the query isn’t “Which mannequin ought to I take advantage of?” and extra typically “The place did studying collapse?” Vanishing gradients, useless neurons, saturation, and initialization points all reside right here. If this layer is opaque, every part constructed on high of it stays mysterious.

Frameworks like PyTorch assist, however they’ll additionally conceal important mechanics. Reimplementing a small neural community from scratch, even as soon as, forces readability. Immediately, tensor shapes matter. Activation decisions cease being arbitrary. Loss curves develop into diagnostic instruments as an alternative of charts you merely hope go down. That is the place instinct begins to type.

Shifting From Algorithms to Architectures

Andrew Ng’s course trains you to pick out algorithms primarily based on information properties. Fashionable machine studying shifts decision-making towards architectures. Convolutional networks encode spatial assumptions. Recurrent fashions encode sequence dependencies. Transformers assume consideration is the primitive value scaling. Understanding these assumptions is extra vital than memorizing mannequin diagrams.

Begin by finding out why sure architectures changed others. CNNs didn’t win as a result of they have been modern, however as a result of weight sharing and locality aligned with visible construction. Transformers didn’t dominate language as a result of recurrence was damaged, however as a result of consideration scaled higher and parallelized studying. Each structure is a speculation about construction in information. Be taught to learn them that method.

That is additionally the second to cease pondering when it comes to single fashions and begin pondering when it comes to pipelines. Tokenization, embeddings, optimizing cloud prices, positional encoding, normalization, and decoding methods are all a part of the system. Efficiency features typically come from adjusting these elements, not swapping out the core mannequin. When you see architectures as composable programs, the sphere begins to really feel navigable quite than overwhelming.

Studying to Work With Actual Information at Scale

Basic coursework typically makes use of clear, preprocessed datasets the place the laborious components are politely eliminated. Actual-world machine studying is the alternative. Information is messy, biased, incomplete, and consistently shifting. The sooner you confront this, the sooner you degree up.

Fashionable neural fashions are delicate to information distribution in methods linear fashions not often are. Small preprocessing choices can quietly dominate outcomes. Normalization decisions, sequence truncation, class imbalance dealing with, and augmentation methods are usually not peripheral issues. They’re central to efficiency and stability. Studying to examine information statistically and visually turns into a core ability, not a hygiene step.

You additionally have to get snug with experiments that don’t converge cleanly. Coaching runs that diverge, stall, or behave inconsistently are regular. Instrumentation issues. Logging gradients, activations, and intermediate metrics helps you distinguish between information issues, optimization issues, and architectural limits. That is the place machine studying begins to resemble engineering greater than math.

Understanding Language Fashions With out Treating Them as Magic

Language fashions can really feel like a cliff after conventional machine studying. The maths appears to be like acquainted, however the conduct feels alien. The secret is to floor LLMs in ideas you already know. They’re neural networks skilled with maximum-likelihood targets over token sequences. Nothing supernatural is going on, even when the outputs really feel uncanny.

Focus first on embeddings and a focus. Embeddings translate discrete symbols into steady areas the place similarity turns into geometric. Consideration learns which components of a sequence matter for predicting the following token. As soon as these concepts click on, transformers cease feeling like black packing containers and begin wanting like very giant, very common neural networks.

Tremendous-tuning and prompting ought to come later. Earlier than adapting fashions, perceive pretraining targets, scaling legal guidelines, and failure modes like hallucination and bias. Deal with language fashions as probabilistic programs with strengths and blind spots, not oracles. This mindset makes you far simpler whenever you ultimately deploy them.

Constructing Initiatives That Really Stretch You

Initiatives are the bridge between information and functionality, however provided that they’re chosen fastidiously. Reimplementing tutorials teaches familiarity, not fluency. The purpose is to come across issues the place the answer shouldn’t be already written out for you.

Good tasks contain trade-offs. Coaching instability, restricted information, computational constraints, or unclear analysis metrics pressure you to make choices. These choices are the place studying occurs. A modest mannequin you perceive deeply beats a large one you copied blindly.

Deal with every challenge as an experiment. Doc assumptions, failures, and shocking behaviors. Over time, this creates a private information base that no course can present. When you’ll be able to clarify why a mannequin failed and what you’ll strive subsequent, you might be now not simply studying machine studying. You might be working towards it.

Conclusion

The trail past Andrew Ng’s course shouldn’t be about abandoning fundamentals, however about extending them into programs that study representations, scale with information, and behave probabilistically in the actual world. Neural networks, architectures, and language fashions are usually not a separate self-discipline.

They’re the continuation of the identical concepts, pushed to their limits. Progress comes from rebuilding instinct layer by layer, confronting messy information, and resisting the temptation to deal with fashionable fashions as magic. When you make that shift, the sphere stops feeling like a transferring goal and begins feeling like a panorama you’ll be able to discover with confidence.