In the event you improvement environments (IDEs) paired with coding brokers, you’ve probably seen code strategies and edits which can be surprisingly correct and related.

This degree of high quality and precision comes from the brokers being grounded in a deep understanding of your codebase.

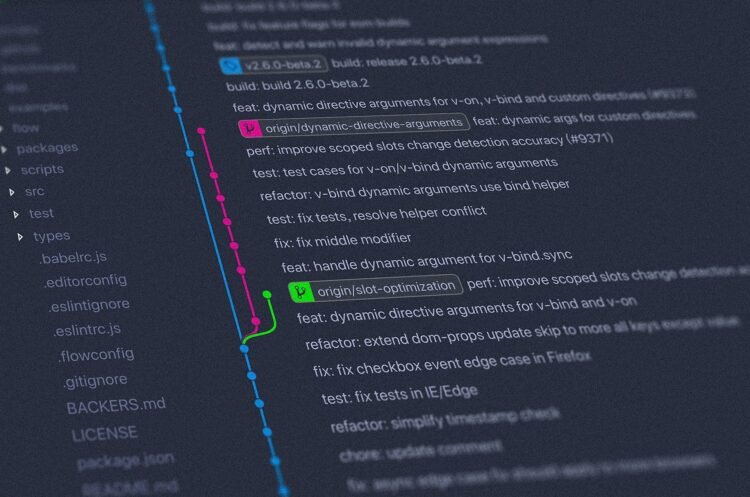

Take Cursor for example. Within the Index & Docs tab, you may see a bit exhibiting that Cursor has already “ingested” and listed your venture’s codebase:

So how will we construct a complete understanding of a codebase within the first place?

At its core, the reply is retrieval-augmented era (RAG), an idea many readers might already be accustomed to. Like most RAG-based programs, these instruments depend on semantic search as a key functionality.

Slightly than organizing information purely by uncooked textual content, the codebase is listed and retrieved based mostly on that means.

This permits natural-language queries to fetch probably the most related codes, which coding brokers can then use to motive, modify, and generate responses extra successfully.

On this article, we discover the RAG pipeline in Cursor that permits coding brokers to do its work utilizing contextual consciousness of the codebase.

Contents

(1) Exploring the Codebase RAG Pipeline

(2) Preserving Codebase Index As much as Date

(3) Wrapping It Up

(1) Exploring the Codebase RAG Pipeline

Let’s discover the steps in Cursor’s RAG pipeline for indexing and contextualizing codebases:

Step 1 — Chunking

In most RAG pipelines, we first must handle knowledge loading, textual content preprocessing, and doc parsing from a number of sources.

Nonetheless, when working with a codebase, a lot of this effort could be prevented. Supply code is already effectively structured and cleanly organized inside a venture repo, permitting us to skip the customary doc parsing and transfer straight into chunking.

On this context, the aim of chunking is to interrupt code into significant, semantically coherent models (e.g., capabilities, lessons, and logical code blocks) moderately than splitting code textual content arbitrarily.

Semantic code chunking ensures that every chunk captures the essence of a selected code part, resulting in extra correct retrieval and helpful era downstream.

To make this extra concrete, let’s have a look at how code chunking works. Think about the next instance Python script (don’t fear about what the code does; the main target right here is on its construction):

After making use of code chunking, the script is cleanly divided into 4 structurally significant and coherent chunks:

As you may see, the chunks are significant and contextually related as a result of they respect code semantics. In different phrases, chunking avoids splitting code in the midst of a logical block except required by dimension constraints.

In apply, it means chunk splits are usually created between capabilities moderately than inside them, and between statements moderately than mid-line.

For the instance above, I used Chonkie, a light-weight open-source framework designed particularly for code chunking. It supplies a easy and sensible method to implement code chunking, amongst many different chunking methods obtainable.

[Optional Reading] Below the Hood of Code Chunking

The code chunking above isn’t unintentional, neither is it achieved by naively splitting code utilizing character counts or common expressions.

It begins with an understanding of the code’s syntax. The method sometimes begins by utilizing a supply code parser (resembling tree-sitter) to transform the uncooked code into an summary syntax tree (AST).

An summary syntax tree is basically a tree-shaped illustration of code that captures its construction, and never the precise textual content. As an alternative of seeing code as a string, the system now sees it as logical models of code like capabilities, lessons, strategies, and blocks.

Think about the next line of Python code:

x = a + bSlightly than being handled as plain textual content, the code is transformed right into a conceptual construction like this:

Project

├── Variable(x)

└── BinaryExpression(+)

├── Variable(a)

└── Variable(b)This structural understanding is what allows efficient code chunking.

Every significant code assemble, resembling a perform, block, or assertion, is represented as a node within the syntax tree.

As an alternative of working on uncooked textual content, the chunking works instantly on the syntax tree.

The chunker will traverse these nodes and teams adjoining ones collectively till a token restrict is reached, producing chunks which can be semantically coherent and size-bounded.

Right here is an instance of a barely extra sophisticated code and the corresponding summary syntax tree:

whereas b != 0:

if a > b:

a := a - b

else:

b := b - a

return

Step 2 — Producing Embeddings and Metadata

As soon as the chunks are ready, an embedding mannequin is utilized to generate a vector illustration (aka embeddings) for every code chunk.

These embeddings seize the semantic that means of the code, enabling retrieval for consumer queries and era prompts to be matched with semantically associated code, even when precise key phrases don’t overlap.

This considerably improves retrieval high quality for duties resembling code understanding, refactoring, and debugging.

Past producing embeddings, one other crucial step is enriching every chunk with related metadata.

For instance, metadata such because the file path and the corresponding code line vary for every chunk is saved alongside its embedding vector.

This metadata not solely supplies necessary context about the place a bit comes from, but in addition allows metadata-based key phrase filtering throughout retrieval.

Step 3 — Enhancing Knowledge Privateness

As with all RAG-based system, knowledge privateness is a main concern. This naturally raises the query of whether or not file paths themselves might comprise delicate info.

In apply, file and listing names usually reveal greater than anticipated, resembling inner venture constructions, product codenames, shopper identifiers, or possession boundaries inside a codebase.

In consequence, file paths are handled as delicate metadata and require cautious dealing with.

To handle this, Cursor applies file path obfuscation (aka path masking) on the shopper aspect earlier than any knowledge is transmitted. Every part of the trail, break up by / and ., is masked utilizing a secret key and a small fastened nonce.

This method hides the precise file and folder names whereas preserving sufficient listing construction to help efficient retrieval and filtering.

For instance, src/funds/invoice_processor.py could also be reworked into a9f3/x72k/qp1m8d.f4.

Be aware: Customers can management which elements of their codebase are shared with Cursor by using a

.cursorignorefile. Cursor makes a finest effort to stop the listed content material from being transmitted or referenced in LLM requests.

Step 4— Storing Embeddings

As soon as generated, the chunk embeddings (with the corresponding metadata) are saved in a vector database utilizing Turbopuffer, which is optimized for quick semantic search throughout hundreds of thousands of code chunks.

Turbopuffer is a serverless, high-performance search engine that mixes vector and full-text search and is backed by low-cost object storage.

To hurry up re-indexing, embeddings are additionally cached in AWS and keyed by the hash of every chunk, permitting unchanged code to be reused throughout subsequent indexing execution.

From an information privateness perspective, it is very important word that solely embeddings and metadata are saved within the cloud. It implies that our unique supply code stays on our native machine and is by no means saved on Cursor servers or in Turbopuffer.

Step 5 — Operating Semantic Search

After we submit a question in Cursor, it’s first transformed right into a vector utilizing the identical embedding mannequin for the chunk embeddings era. It ensures that each queries and code chunks dwell in the identical semantic house.

From the angle of semantic search, the method unfolds as follows:

- Cursor compares the question embedding towards code embeddings within the vector database to determine probably the most semantically related code chunks.

- These candidate chunks are returned by Turbopuffer in ranked order based mostly on their similarity scores.

- Since uncooked supply code isn’t saved within the cloud or the vector database, the search outcomes consist solely of metadata, particularly the masked file paths and corresponding code line ranges.

- By resolving the metadata of decrypted file paths and line ranges, the native shopper is then in a position to retrieve the precise code chunks from the native codebase.

- The retrieved code chunks, in its unique textual content type, are then offered as context alongside the question to the LLM to generate a context-aware response.

As a part of a hybrid search (semantic + key phrase) technique, the coding agent also can use instruments resembling grep and ripgrep to find code snippets based mostly on precise string matches.

OpenCode is a well-liked open-source coding agent framework obtainable within the terminal, IDEs, and desktop environments.

Not like Cursor, it really works instantly on the codebase utilizing textual content search, file matching, and LSP-based navigation moderately than embedding-based semantic search.

In consequence, OpenCode supplies sturdy structural consciousness however lacks the deeper semantic retrieval capabilities present in Cursor.

As a reminder, our unique supply code is not saved on Cursor servers or in Turbopuffer.

Nonetheless, when answering a question, Cursor nonetheless must briefly go the related unique code chunks to the coding agent so it may produce an correct response.

It is because the chunk embeddings can’t be used to instantly reconstruct the unique code.

Plain textual content code is retrieved solely at inference time and just for the precise recordsdata and features wanted. Outdoors of this short-lived inference runtime, the codebase isn’t saved or persevered remotely.

(2) Preserving Codebase Index As much as Date

Overview

Our codebase evolves shortly as we both settle for the agent-generated edits or as we make handbook code modifications.

To maintain semantic retrieval correct, Cursor routinely synchronizes the code index by periodic checks, sometimes each 5 minutes.

Throughout every sync, the system securely detects modifications and refreshes solely the affected recordsdata by eradicating outdated embeddings and producing new ones.

As well as, recordsdata are processed in batches to optimize efficiency and decrease disruption to our improvement workflow.

Utilizing Merkle Timber

So how does Cursor make this work so seamlessly? It scans the opened folder and computes a Merkle tree of file hashes, which permits the system to effectively detect and observe modifications throughout the codebase.

Alright, so what’s a Merkle tree?

It’s a knowledge construction that works like a system of digital cryptographic fingerprints, permitting modifications throughout a big set of recordsdata to be tracked effectively.

Every code file is transformed into a brief fingerprint, and these fingerprints are mixed hierarchically right into a single top-level fingerprint that represents the whole folder.

When a file modifications, solely its fingerprint and a small variety of associated fingerprints have to be up to date.

The Merkle tree of the codebase is synced to the Cursor server, which periodically checks for fingerprint mismatches to determine what has modified.

In consequence, it may pinpoint which recordsdata have been modified and replace solely these recordsdata throughout index synchronization, preserving the method quick and environment friendly.

Dealing with Totally different File Varieties

Right here is how Cursor effectively handles completely different file sorts as a part of the indexing course of:

- New recordsdata: Mechanically added to index

- Modified recordsdata: Previous embeddings eliminated, recent ones created

- Deleted recordsdata: Promptly faraway from index

- Giant/complicated recordsdata: Could also be skipped for efficiency

Be aware: Cursor’s codebase indexing begins routinely everytime you open a workspace.

(3) Wrapping It Up

On this article, we appeared past LLM era to discover the pipeline behind instruments like Cursor that builds the suitable context by RAG.

By chunking code alongside significant boundaries, indexing it effectively, and constantly refreshing that context because the codebase evolves, coding brokers are in a position to ship way more related and dependable strategies.