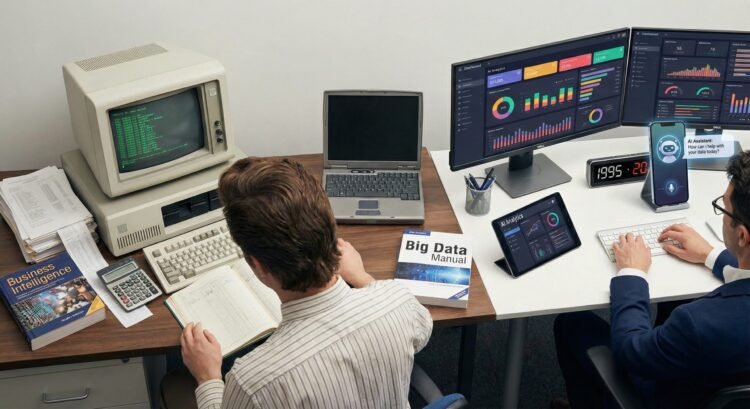

I’ve been working within the Analytics area for over 20 years. Again then, it was not referred to as “analytics”, it was “Enterprise Intelligence” and even “Resolution Help Methods” in older instances. The phrases change, from knowledge warehouses to Large Knowledge, to lakehouses, and now with AI, the essence and the everlasting promise of self-service Analytics stays the identical: extracting fact from knowledge to empower customers with out counting on somebody from the information group. AI with out people within the loop? That sounds controversial.

With the appearance of Giant Language Fashions (LLMs), one use case I discover fascinating is growing conversational interfaces to speak with databases (Textual content-to-SQL). The potential right here is immense, promising to democratize knowledge entry throughout organizations.

Nonetheless, for this particular use case, the answer must be binary. It both works or it doesn’t.

An accuracy of 80% and even 90% is, sadly, not sufficient. Giving your end-users an AI analytical software that hallucinates tables or misinterprets filters is not any joke. You can’t compromise on accuracy as a result of it instantly erodes belief. And what occurs when a system loses belief? It won’t be used. Adoption will decline, with out forgetting the catastrophic danger of enterprise choices being made primarily based on the improper knowledge.

The Complexity of the RAG Pipeline

I began my analysis on this matter over one 12 months and a half in the past and it shortly grew to become clear that orchestrating a strong Textual content-to-SQL RAG (Retrieval-Augmented Technology) software will not be trivial. You want a number of elements in your pipeline, working in excellent concord:

- An intent classifier to detect the purpose of the query.

- A vector database to retailer extra context (like enterprise definitions) that the language fashions want.

- An embeddings mannequin to vectorize this extra data.

- A retrieval mechanism for the saved knowledge.

- Entry to the database.

- The power to generate SQL within the particular dialect of the database.

- And the flexibility to consider the outcomes.

This final half, analysis, I consider is usually omitted or handled as an afterthought, however it’s maybe essentially the most essential part for making certain the reliability wanted in an enterprise setting.

BigQuery: A Case Examine in Native AI Integration

Managing this complicated pipeline typically requires integrating a number of platforms. I used to be lately impressed by how BigQuery has launched the merger of Analytics and Generative AI natively of their platform.

You will have the flexibility to work along with your SQL within the BigQuery IDE and use Gen AI instantly with out going to a different platform or product. For instance: you may question the database and the retrieved outcomes will be instantly despatched to Gemini (or by means of Vertex you may also add different fashions). You should use Gemini to categorise intent, create embeddings and retailer them in BigQuery’s vector database capabilities, do a semantic search, and generate SQL.

All of that with just one platform, with out the trouble of managing a number of subscriptions.

After all, like every thing in life, it has its drawbacks.

One of many predominant cons is that BigQuery may not be the most affordable database, and I’ve heard tales of startups the place a single improper question can drain your bank card. It has not occurred to me, however I can relate to how this may occur. One other con could be that you simply get fully locked-in with Google. Possibly that’s not a nasty factor; the identical means we’re all locked in with Gmail. Maybe sooner or later, AI will probably be a commodity, the way in which emails at the moment are.

One other downside is the shortage of granular traceability of the price of the tokens and a form of “mock LLM” for growth; you don’t want to essentially use the true costly LLM at your growth stage.

If you’re okay with the cons above, you get an impressive product that mixes a number of instruments into one single cloud platform which might deal with massive knowledge massively.

I’ve created the next repo which was a part of the Kaggle hackathon, the place I explored these BigQuery native capabilities additional. For more information please go to the repo right here:

https://github.com/garyzava/bigq-ethereum-rag

The Lacking Piece: Rigorous Analysis

Now, going again to eval frameworks. Platforms like BigQuery simplify the structure, however they don’t mechanically clear up the accuracy drawback. I see a number of options on the market, however most of them lack sturdy analysis capabilities.

If we settle for that Textual content-to-SQL should be binary (appropriate or incorrect), we’d like analysis methods that replicate the messy actuality of enterprise knowledge, not the pristine environments of educational or demo datasets.

Evaluating a Textual content-to-SQL system is notoriously tough because of the declarative nature of SQL and the way complicated your database schema is. Does it have hundreds of tables? Are these tables properly documented? In all probability not. Are naming conventions constant throughout all tables?. Two queries can look utterly completely different syntactically (e.g., completely different be a part of orders, aliasing, or use of CTEs) but produce similar outcomes.

To really benchmark your RAG software throughout growth and in manufacturing, you need to use the proper metrics.

Metrics That Matter

Going again to the promise of self-service BI or analytics, this implies the end-user is relying 100% on themselves; sadly, there isn’t any human-in-the-loop or knowledge skilled to validate the outcomes. Due to this, we have to set up an explainable AI or analysis framework with a set of metrics to measure the standard of the generated SQL.

- The Shift to Execution Accuracy (EX): Early benchmarks relied on Precise Match (EM), which in contrast the expected SQL string to the bottom fact. This was deeply flawed, because it penalized legitimate syntactic variations. The trendy normal is Execution Accuracy (EX). This metric executes each the expected SQL and the “Gold” (floor fact) SQL towards the precise database and compares the returned outcome units. This appropriately validates queries no matter how they’re written.

- Targeted Analysis: In enterprise contexts, a question would possibly return additional, non-essential columns (e.g., an ID column used for a be a part of). Strict execution accuracy would possibly mark this as a failure. “Execution-based centered analysis” permits for a extra nuanced comparability, checking if the goal columns and values are appropriate, whereas being extra lenient on extraneous knowledge or row ordering.

- The “Comfortable-F1” Metric: To mitigate the binary nature of Execution Accuracy (the place one improper cell fails your complete take a look at), Comfortable-F1 is more and more used. This metric supplies partial credit score by calculating the overlap between the expected and gold outcomes. If a question returns 99 out of 100 appropriate rows, Comfortable-F1 displays excessive efficiency, whereas EX would return 0. That is essential for debugging.

- LLM-as-a-Decide: Typically execution is not possible (e.g., lacking non-public knowledge, setting errors). In these circumstances, a complicated LLM will be prompted to match the semantic logic of the expected SQL towards the Gold SQL. Whereas much less goal than execution, it correlates extremely with human judgment.

Spider 2.0: The Enterprise Actuality Examine

Presently there are three exceptional analysis frameworks: Spider 2.0, BIRD (BIg Bench for LaRge-scale Database Grounded Textual content-to-SQL) and SynSQL (primarily based on artificial knowledge). Nonetheless, the business has been affected by a false sense of safety created by outdated benchmarks. For years, the business relied on Spider 1.0. It centered on small, clear SQLite databases (averaging fewer than 10 tables). Fashions had been reaching 90%+ accuracy, main many to consider the issue was “solved.”

The framework that I at all times emphasize, which includes these trendy metrics and really checks enterprise readiness, is Spider 2.0.

Spider 2.0 (launched along side ICLR 2025) is a paradigm shift, designed to deal with this “actuality hole” by introducing the complexities that break LLMs in manufacturing:

- Huge Scale: Enterprise schemas are big. Spider 2.0 databases common 812 columns, with some exceeding 3,000. This scale typically exceeds the LLM’s context limits, forcing fashions to make use of “Schema Linking” (retrieval) methods simply to establish the related tables earlier than producing SQL.

- Dialect Variety: Actual corporations use Snowflake, BigQuery, and T-SQL, not simply SQLite. Spider 2.0 enforces dialect range, requiring fashions to grasp particular syntax (e.g., dealing with nested JSON knowledge utilizing UNNEST or FLATTEN).

- Exterior Information: Enterprise logic (just like the definition of “Churn Charge”) resides in documentation or challenge codebases (like DBT), not the schema. Spider 2.0 simulates this by offering exterior information (Markdown, YAML) the mannequin should learn to floor its reasoning.

- The Agentic Workflow: Crucially, Spider 2.0 fashions the workflow of a contemporary knowledge engineer. It strikes past static translation, evaluating the mannequin’s skill to discover the file system, learn documentation, work together with dwell database cases, and debug errors iteratively.

The distinction in problem is stark. Fashions that dominate Spider 1.0 see their success charges drop to 10-20% on the total Spider 2.0 benchmark, highlighting the deficiencies of present LLMs when confronted with real-world complexity.

Conclusion: The Binary Bar for Enterprise Knowledge

The journey from Enterprise Intelligence to AI-driven analytics has been marked by growing abstraction, however the elementary requirement for knowledge integrity stays unchanged. Whereas the promise of Textual content-to-SQL is nearer than ever, we should resist the attract of excessive scores on outdated benchmarks.

Reaching 90% accuracy may be academically attention-grabbing, however within the enterprise, it’s industrially ineffective. The bar is binary: it really works or it breaks belief.

As platforms like BigQuery simplify the combination of AI and knowledge, it’s crucial that we concurrently undertake refined analysis methodologies and rigorous benchmarks like Spider 2.0. Solely by testing towards the messy actuality of enterprise knowledge can we develop Textual content-to-SQL purposes dependable sufficient to guess the enterprise on.

Till subsequent time, I hope you will have discovered this matter as fascinating as I do.

Additional Studying

Spider 2.0: Evaluating Language Fashions on Actual-World Enterprise Textual content-to-SQL Workflows Authors: Fangyu Lei, Jixuan Chen, Yuxiao Ye, et al. Revealed: arXiv (Nov 2024), Accepted to ICLR 2025 (Oral). Hyperlink: https://arxiv.org/abs/2411.07763

Actually, like something with AI these days, this dialog doesn’t have to finish right here. I might love to listen to your inputs and perspective at www.gyza.org