Picture by Editor

# Introduction

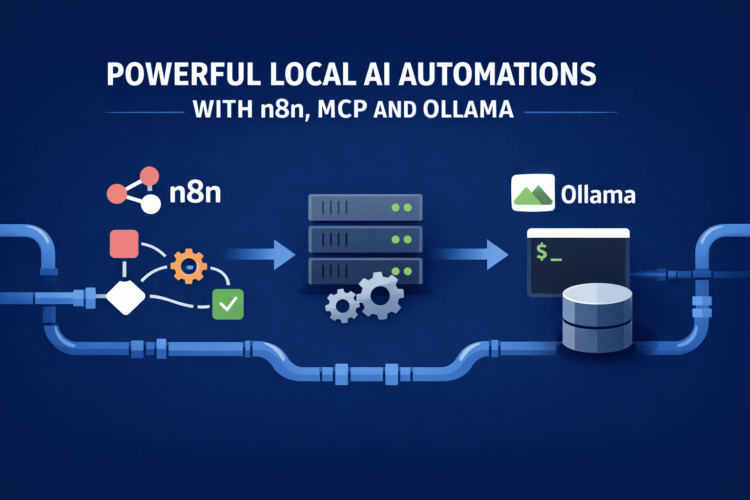

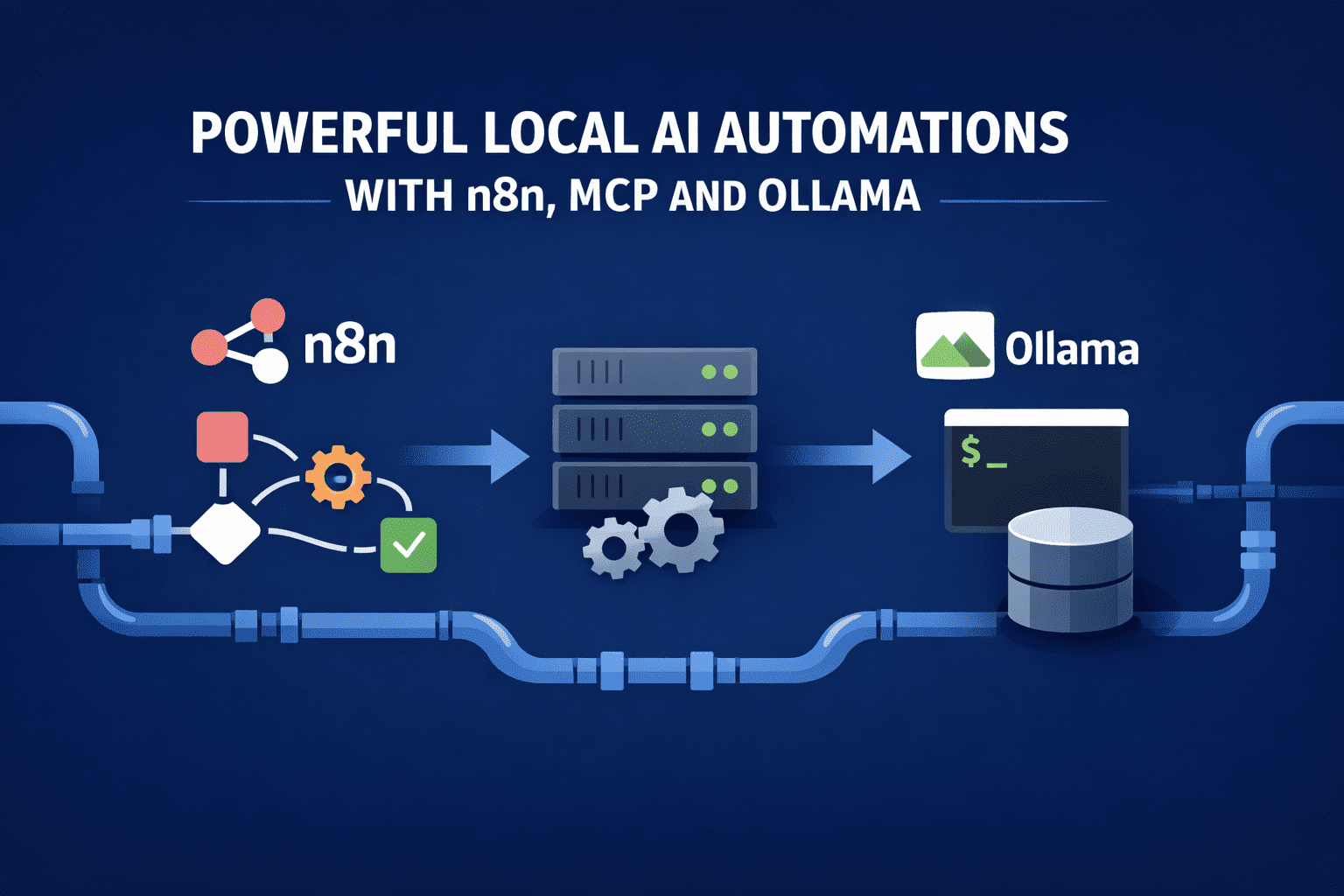

Working massive language fashions (LLMs) domestically solely issues if they’re doing actual work. The worth of n8n, the Mannequin Context Protocol (MCP), and Ollama isn’t architectural class, however the potential to automate duties that may in any other case require engineers within the loop.

This stack works when each element has a concrete duty: n8n orchestrates, MCP constrains software utilization, and Ollama causes over native information.

The final word purpose is to run these automations on a single workstation or small server, changing fragile scripts and costly API-based techniques.

# Automated Log Triage With Root-Trigger Speculation Technology

This automation begins with n8n ingesting utility logs each 5 minutes from an area listing or Kafka shopper. n8n performs deterministic preprocessing: grouping by service, deduplicating repeated stack traces, and extracting timestamps and error codes. Solely the condensed log bundle is handed to Ollama.

The native mannequin receives a tightly scoped immediate asking it to cluster failures, establish the primary causal occasion, and generate two to 3 believable root-cause hypotheses. MCP exposes a single software: query_recent_deployments. When the mannequin requests it, n8n executes the question in opposition to a deployment database and returns the outcome. The mannequin then updates its hypotheses and outputs structured JSON.

n8n shops the output, posts a abstract to an inner Slack channel, and opens a ticket solely when confidence exceeds an outlined threshold. No cloud LLM is concerned, and the mannequin by no means sees uncooked logs with out preprocessing.

# Steady Knowledge High quality Monitoring For Analytics Pipelines

n8n watches incoming batch tables in an area warehouse and runs schema diffs in opposition to historic baselines. When drift is detected, the workflow sends a compact description of the change to Ollama somewhat than the complete dataset.

The mannequin is instructed to find out whether or not the drift is benign, suspicious, or breaking. MCP exposes two instruments: sample_rows and compute_column_stats. The mannequin selectively requests these instruments, inspects returned values, and produces a classification together with a human-readable clarification.

If the drift is assessed as breaking, n8n routinely pauses downstream pipelines and annotates the incident with the mannequin’s reasoning. Over time, groups accumulate a searchable archive of previous schema adjustments and choices, all generated domestically.

# Autonomous Dataset Labeling And Validation Loops For Machine Studying Pipelines

This automation is designed for groups coaching fashions on repeatedly arriving information the place handbook labeling turns into the bottleneck. n8n screens an area information drop location or database desk and batches new, unlabeled data at fastened intervals.

Every batch is preprocessed deterministically to take away duplicates, normalize fields, and fasten minimal metadata earlier than inference ever occurs.

Ollama receives solely the cleaned batch and is instructed to generate labels with confidence scores, not free textual content. MCP exposes a constrained toolset so the mannequin can validate its personal outputs in opposition to historic distributions and sampling checks earlier than something is accepted. n8n then decides whether or not the labels are auto-approved, partially permitted, or routed to people.

Key elements of the loop:

- Preliminary label technology: The native mannequin assigns labels and confidence values based mostly strictly on the offered schema and examples, producing structured JSON that n8n can validate with out interpretation.

- Statistical drift verification: Via an MCP software, the mannequin requests label distribution stats from earlier batches and flags deviations that counsel idea drift or misclassification.

- Low-confidence escalation: n8n routinely routes samples under a confidence threshold to human reviewers whereas accepting the remaining, conserving throughput excessive with out sacrificing accuracy.

- Suggestions re-injection: Human corrections are fed again into the system as new reference examples, which the mannequin can retrieve in future runs via MCP.

This creates a closed-loop labeling system that scales domestically, improves over time, and removes people from the important path until they’re genuinely wanted.

# Self-Updating Analysis Briefs From Inside And Exterior Sources

This automation runs on a nightly schedule. n8n pulls new commits from chosen repositories, current inner docs, and a curated set of saved articles. Every merchandise is chunked and embedded domestically.

Ollama, whether or not run via the terminal or a GUI, is prompted to replace an present analysis transient somewhat than create a brand new one. MCP exposes retrieval instruments that enable the mannequin to question prior summaries and embeddings. The mannequin identifies what has modified, rewrites solely the affected sections, and flags contradictions or outdated claims.

n8n commits the up to date transient again to a repository and logs a diff. The result’s a dwelling doc that evolves with out handbook rewrites, powered solely by native inference.

# Automated Incident Postmortems With Proof Linking

When an incident is closed, n8n assembles timelines from alerts, logs, and deployment occasions. As an alternative of asking a mannequin to put in writing a story blindly, the workflow feeds the timeline in strict chronological blocks.

The mannequin is instructed to supply a postmortem with specific citations to timeline occasions. MCP exposes a fetch_event_details software that the mannequin can name when context is lacking. Every paragraph within the closing report references concrete proof IDs.

n8n rejects any output that lacks citations and re-prompts the mannequin. The ultimate doc is constant, auditable, and generated with out exposing operational information externally.

# Native Contract And Coverage Evaluate Automation

Authorized and compliance groups run this automation on inner machines. n8n ingests new contract drafts and coverage updates, strips formatting, and segments clauses.

Ollama is requested to check every clause in opposition to an permitted baseline and flag deviations. MCP exposes a retrieve_standard_clause software, permitting the mannequin to tug canonical language. The output contains actual clause references, threat degree, and advised revisions.

n8n routes high-risk findings to human reviewers and auto-approves unchanged sections. Delicate paperwork by no means depart the native setting.

# Device-Utilizing Code Evaluate For Inside Repositories

This workflow triggers on pull requests. n8n extracts diffs and take a look at outcomes, then sends them to Ollama with directions to focus solely on logic adjustments and potential failure modes.

Via MCP, the mannequin can name run_static_analysis and query_test_failures. It makes use of these outcomes to floor its evaluate feedback. n8n posts inline feedback solely when the mannequin identifies concrete, reproducible points.

The result’s a code reviewer that doesn’t hallucinate fashion opinions and solely feedback when proof helps the declare.

# Closing Ideas

Every instance limits the mannequin’s scope, exposes solely vital instruments, and depends on n8n for enforcement. Native inference makes these workflows quick sufficient to run repeatedly and low-cost sufficient to maintain all the time on. Extra importantly, it retains reasoning near the information and execution beneath strict management — the place it belongs.

That is the place n8n, MCP, and Ollama cease being infrastructure experiments — and begin functioning as a sensible automation stack.

Nahla Davies is a software program developer and tech author. Earlier than devoting her work full time to technical writing, she managed—amongst different intriguing issues—to function a lead programmer at an Inc. 5,000 experiential branding group whose purchasers embrace Samsung, Time Warner, Netflix, and Sony.

Picture by Editor

# Introduction

Working massive language fashions (LLMs) domestically solely issues if they’re doing actual work. The worth of n8n, the Mannequin Context Protocol (MCP), and Ollama isn’t architectural class, however the potential to automate duties that may in any other case require engineers within the loop.

This stack works when each element has a concrete duty: n8n orchestrates, MCP constrains software utilization, and Ollama causes over native information.

The final word purpose is to run these automations on a single workstation or small server, changing fragile scripts and costly API-based techniques.

# Automated Log Triage With Root-Trigger Speculation Technology

This automation begins with n8n ingesting utility logs each 5 minutes from an area listing or Kafka shopper. n8n performs deterministic preprocessing: grouping by service, deduplicating repeated stack traces, and extracting timestamps and error codes. Solely the condensed log bundle is handed to Ollama.

The native mannequin receives a tightly scoped immediate asking it to cluster failures, establish the primary causal occasion, and generate two to 3 believable root-cause hypotheses. MCP exposes a single software: query_recent_deployments. When the mannequin requests it, n8n executes the question in opposition to a deployment database and returns the outcome. The mannequin then updates its hypotheses and outputs structured JSON.

n8n shops the output, posts a abstract to an inner Slack channel, and opens a ticket solely when confidence exceeds an outlined threshold. No cloud LLM is concerned, and the mannequin by no means sees uncooked logs with out preprocessing.

# Steady Knowledge High quality Monitoring For Analytics Pipelines

n8n watches incoming batch tables in an area warehouse and runs schema diffs in opposition to historic baselines. When drift is detected, the workflow sends a compact description of the change to Ollama somewhat than the complete dataset.

The mannequin is instructed to find out whether or not the drift is benign, suspicious, or breaking. MCP exposes two instruments: sample_rows and compute_column_stats. The mannequin selectively requests these instruments, inspects returned values, and produces a classification together with a human-readable clarification.

If the drift is assessed as breaking, n8n routinely pauses downstream pipelines and annotates the incident with the mannequin’s reasoning. Over time, groups accumulate a searchable archive of previous schema adjustments and choices, all generated domestically.

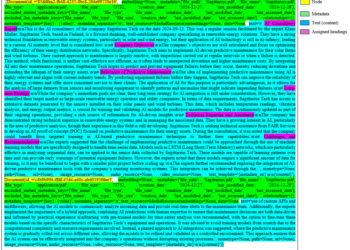

# Autonomous Dataset Labeling And Validation Loops For Machine Studying Pipelines

This automation is designed for groups coaching fashions on repeatedly arriving information the place handbook labeling turns into the bottleneck. n8n screens an area information drop location or database desk and batches new, unlabeled data at fastened intervals.

Every batch is preprocessed deterministically to take away duplicates, normalize fields, and fasten minimal metadata earlier than inference ever occurs.

Ollama receives solely the cleaned batch and is instructed to generate labels with confidence scores, not free textual content. MCP exposes a constrained toolset so the mannequin can validate its personal outputs in opposition to historic distributions and sampling checks earlier than something is accepted. n8n then decides whether or not the labels are auto-approved, partially permitted, or routed to people.

Key elements of the loop:

- Preliminary label technology: The native mannequin assigns labels and confidence values based mostly strictly on the offered schema and examples, producing structured JSON that n8n can validate with out interpretation.

- Statistical drift verification: Via an MCP software, the mannequin requests label distribution stats from earlier batches and flags deviations that counsel idea drift or misclassification.

- Low-confidence escalation: n8n routinely routes samples under a confidence threshold to human reviewers whereas accepting the remaining, conserving throughput excessive with out sacrificing accuracy.

- Suggestions re-injection: Human corrections are fed again into the system as new reference examples, which the mannequin can retrieve in future runs via MCP.

This creates a closed-loop labeling system that scales domestically, improves over time, and removes people from the important path until they’re genuinely wanted.

# Self-Updating Analysis Briefs From Inside And Exterior Sources

This automation runs on a nightly schedule. n8n pulls new commits from chosen repositories, current inner docs, and a curated set of saved articles. Every merchandise is chunked and embedded domestically.

Ollama, whether or not run via the terminal or a GUI, is prompted to replace an present analysis transient somewhat than create a brand new one. MCP exposes retrieval instruments that enable the mannequin to question prior summaries and embeddings. The mannequin identifies what has modified, rewrites solely the affected sections, and flags contradictions or outdated claims.

n8n commits the up to date transient again to a repository and logs a diff. The result’s a dwelling doc that evolves with out handbook rewrites, powered solely by native inference.

# Automated Incident Postmortems With Proof Linking

When an incident is closed, n8n assembles timelines from alerts, logs, and deployment occasions. As an alternative of asking a mannequin to put in writing a story blindly, the workflow feeds the timeline in strict chronological blocks.

The mannequin is instructed to supply a postmortem with specific citations to timeline occasions. MCP exposes a fetch_event_details software that the mannequin can name when context is lacking. Every paragraph within the closing report references concrete proof IDs.

n8n rejects any output that lacks citations and re-prompts the mannequin. The ultimate doc is constant, auditable, and generated with out exposing operational information externally.

# Native Contract And Coverage Evaluate Automation

Authorized and compliance groups run this automation on inner machines. n8n ingests new contract drafts and coverage updates, strips formatting, and segments clauses.

Ollama is requested to check every clause in opposition to an permitted baseline and flag deviations. MCP exposes a retrieve_standard_clause software, permitting the mannequin to tug canonical language. The output contains actual clause references, threat degree, and advised revisions.

n8n routes high-risk findings to human reviewers and auto-approves unchanged sections. Delicate paperwork by no means depart the native setting.

# Device-Utilizing Code Evaluate For Inside Repositories

This workflow triggers on pull requests. n8n extracts diffs and take a look at outcomes, then sends them to Ollama with directions to focus solely on logic adjustments and potential failure modes.

Via MCP, the mannequin can name run_static_analysis and query_test_failures. It makes use of these outcomes to floor its evaluate feedback. n8n posts inline feedback solely when the mannequin identifies concrete, reproducible points.

The result’s a code reviewer that doesn’t hallucinate fashion opinions and solely feedback when proof helps the declare.

# Closing Ideas

Every instance limits the mannequin’s scope, exposes solely vital instruments, and depends on n8n for enforcement. Native inference makes these workflows quick sufficient to run repeatedly and low-cost sufficient to maintain all the time on. Extra importantly, it retains reasoning near the information and execution beneath strict management — the place it belongs.

That is the place n8n, MCP, and Ollama cease being infrastructure experiments — and begin functioning as a sensible automation stack.

Nahla Davies is a software program developer and tech author. Earlier than devoting her work full time to technical writing, she managed—amongst different intriguing issues—to function a lead programmer at an Inc. 5,000 experiential branding group whose purchasers embrace Samsung, Time Warner, Netflix, and Sony.