, Databricks has shaken the information market as soon as once more. The corporate launched its free version of the Databricks platform with all of the functionalities included. It’s a nice useful resource for studying and testing, to say the least.

With that in thoughts, I created an end-to-end venture that can assist you studying the basics of the primary sources inside Databricks.

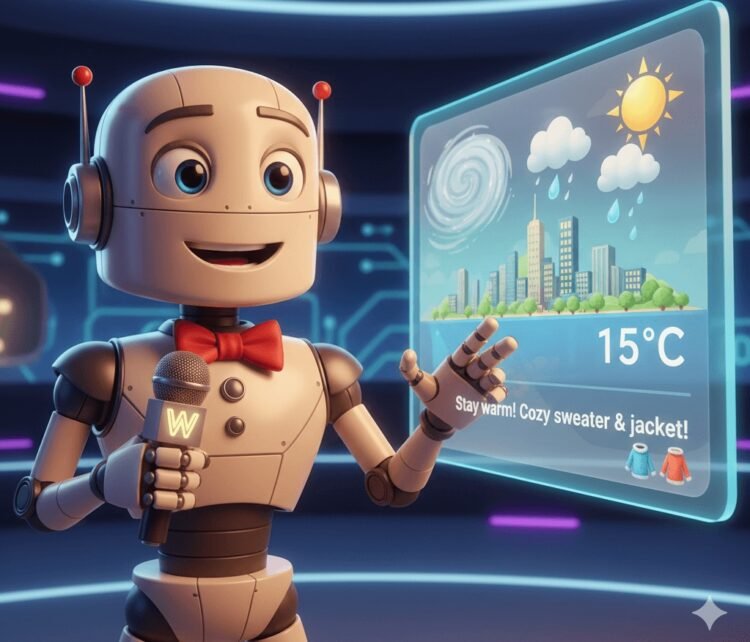

This venture demonstrates an entire Extract, Remodel, Load (ETL) workflow inside Databricks. It integrates the OpenWeatherMap API for information retrieval and the OpenAI GPT-4o-mini mannequin to supply personalised, weather-based dressing recommendations.

Let’s be taught extra about it.

The Mission

The venture implements a full information pipeline inside Databricks, following these steps.

- Extract: Fetches present climate information for New York Metropolis through the OpenWeatherMap API [1].

- Remodel: Converts UTC timestamps to New York native time and makes use of OpenAI’s [2] GPT-4o-mini to generate personalised dressing recommendations based mostly on the temperature.

- Load: Persists the information into the Databricks Unity Catalog as each uncooked JSON recordsdata and a structured Delta desk (Silver Layer).

- Orchestration: The pocket book with this ETL code is added to a job and scheduled to run each 1 hour in Databricks.

- Analytics: The silver layer feeds a Databricks Dashboard that shows related climate info alongside the LLM’s recommendations.

Right here is the structure.

Nice. Now that we perceive what we have to do, let’s transfer on with the how piece of this tutorial.

Be aware: should you nonetheless don’t have an account in Databricks, go to Databricks Free Version web page [3], click on Join Free Version and observe the prompts on display to get your free entry.

Extract: Integrating API And Databricks

As I normally say, an information venture wants information to start, proper? So our activity right here is integrating OpenWeatherMap API to ingest information straight right into a PySpark pocket book inside Databricks. This activity could look sophisticated at first, however belief me, it isn’t.

On Databricks’ preliminary web page, create a brand new pocket book utilizing the +New button, then choose Pocket book.

For the Extract half, we’ll want:

1. The API Key from the API OpenWeatherMap.

To get that, go to the API’s signup web page and full your free registration course of. As soon as logged in to the dashboard, click on on the API Key tab, the place it is possible for you to to see it.

2. Import packages

# Imports

import requests

import jsonSubsequent, we’re going to create a Python class to modularize our code and make it production-ready as nicely.

- This class receives the API_KEY we simply created, in addition to the town and nation for the climate fetch.

- Returns the response in JSON format.

# Creating a category to modularize our code

class Climate:

# Outline the constructor

def __init__(self, API_KEY):

self.API_KEY = API_KEY

# Outline a technique to retrieve climate information

def get_weather(self, metropolis, nation, models='imperial'):

self.metropolis = metropolis

self.nation = nation

self.models = models

# Make a GET request to an API endpoint that returns JSON information

url = f"https://api.openweathermap.org/information/2.5/climate?q={metropolis},{nation}&APPID={w.API_KEY}&models={models}"

response = requests.get(url)

# Use the .json() methodology to parse the response textual content and return

if response.status_code != 200:

increase Exception(f"Error: {response.status_code} - {response.textual content}")

return response.json()Good. Now we will run this class. Discover we use dbutils.widgets.get(). This command seems on the Parameters within the scheduled job, which we’ll see later on this article. It’s a greatest follow to maintain the secrets and techniques protected.

# Get the API OpenWeatherMap key

API_KEY = dbutils.widgets.get('API_KEY')

# Instantiate the category

w = Climate(API_KEY=API_KEY)

# Get the climate information

nyc = w.get_weather(metropolis='New York', nation='US')

nycRight here is the response.

{'coord': {'lon': -74.006, 'lat': 40.7143},

'climate': [{'id': 804,

'main': 'Clouds',

'description': 'overcast clouds',

'icon': '04d'}],

'base': 'stations',

'important': {'temp': 54.14,

'feels_like': 53.44,

'temp_min': 51.76,

'temp_max': 56.26,

'stress': 992,

'humidity': 89,

'sea_level': 992,

'grnd_level': 993},

'visibility': 10000,

'wind': {'pace': 21.85, 'deg': 270, 'gust': 37.98},

'clouds': {'all': 100},

'dt': 1766161441,

'sys': {'sort': 1,

'id': 4610,

'nation': 'US',

'dawn': 1766146541,

'sundown': 1766179850},

'timezone': -18000,

'id': 5128581,

'identify': 'New York',

'cod': 200}With that response in hand, we will transfer on to the Transformation a part of our venture, the place we’ll clear and remodel the information.

Remodel: Formatting The Information

On this part, we’ll have a look at the clear and remodel duties carried out over the uncooked information. We are going to begin by deciding on the items of knowledge wanted for our dashboard. That is merely getting information from a dictionary (or a JSON).

# Getting info

id = nyc['id']

timestamp = nyc['dt']

climate = nyc['weather'][0]['main']

temp = nyc['main']['temp']

tmin = nyc['main']['temp_min']

tmax = nyc['main']['temp_max']

nation = nyc['sys']['country']

metropolis = nyc['name']

dawn = nyc['sys']['sunrise']

sundown = nyc['sys']['sunset']Subsequent, let’s remodel the timestamps to the New York time zone, because it comes with Greenwich time.

# Remodel dawn and sundown to datetime in NYC timezone

from datetime import datetime, timezone

from zoneinfo import ZoneInfo

import time

# Timestamp, Dawn and Sundown to NYC timezone

target_timezone = ZoneInfo("America/New_York")

dt_utc = datetime.fromtimestamp(dawn, tz=timezone.utc)

sunrise_nyc = str(dt_utc.astimezone(target_timezone).time()) # get solely dawn time time

dt_utc = datetime.fromtimestamp(sundown, tz=timezone.utc)

sunset_nyc = str(dt_utc.astimezone(target_timezone).time()) # get solely sundown time time

dt_utc = datetime.fromtimestamp(timestamp, tz=timezone.utc)

time_nyc = str(dt_utc.astimezone(target_timezone))Lastly, we format it as a Spark dataframe.

# Create a dataframe from the variables

df = spark.createDataFrame([[id, time_nyc, weather, temp, tmin, tmax, country, city, sunrise_nyc, sunset_nyc]], schema=['id', 'timestamp','weather', 'temp', 'tmin', 'tmax', 'country', 'city', 'sunrise', 'sunset'])

The ultimate step on this part is including the suggestion from an LLM. On this step, we’re going to choose among the information fetched from the API and cross it to the mannequin, asking it to return a suggestion of how an individual may gown to be ready for the climate.

- You’ll need an OpenAI API Key.

- Cross the climate situation, max and min temperatures (

climate,tmax,tmin) - Ask the LLM to return a suggestion about how one can gown for the climate.

- Add the suggestion to the ultimate dataframe.

%pip set up openai --quiet

from openai import OpenAI

import pyspark.sql.capabilities as F

from pyspark.sql.capabilities import col

# Get OpenAI Key

OPENAI_API_KEY= dbutils.widgets.get('OPENAI_API_KEY')

consumer = OpenAI(

# That is the default and may be omitted

api_key=OPENAI_API_KEY

)

response = consumer.responses.create(

mannequin="gpt-4o-mini",

directions="You're a weatherman that provides recommendations about how one can gown based mostly on the climate. Reply in a single sentence.",

enter=f"The climate is {climate}, with max temperature {tmax} and min temperature {tmin}. How ought to I gown?"

)

suggestion = response.output_text

# Add the suggestion to the df

df = df.withColumn('suggestion', F.lit(suggestion))

show(df)Cool. We’re nearly executed with the ETL. Now it’s all about loading it. That’s the following part.

Load: Saving the Information and Creating the Silver Layer

The final piece of the ETL is loading the information. We are going to load it in two alternative ways.

- Persisting the uncooked recordsdata in a Unity Catalog Quantity.

- Saving the remodeled dataframe straight into the silver layer, which is a Delta Desk prepared for the Dashboard consumption.

Let’s create a catalog that may maintain all of the climate information that we get from the API.

-- Making a Catalog

CREATE CATALOG IF NOT EXISTS pipeline_weather

COMMENT 'That is the catalog for the climate pipeline';Subsequent, we create a schema for the Lakehouse. This one will retailer the quantity with the uncooked JSON recordsdata fetched.

-- Making a Schema

CREATE SCHEMA IF NOT EXISTS pipeline_weather.lakehouse

COMMENT 'That is the schema for the climate pipeline';Now, we create the quantity for the uncooked recordsdata.

-- Let's create a quantity

CREATE VOLUME IF NOT EXISTS pipeline_weather.lakehouse.raw_data

COMMENT 'That is the uncooked information quantity for the climate pipeline';We additionally create one other schema to carry the Silver Layer Delta Desk.

--Creating Schema to carry remodeled information

CREATE SCHEMA IF NOT EXISTS pipeline_weather.silver

COMMENT 'That is the schema for the climate pipeline';As soon as now we have the whole lot arrange, that is how our Catalog seems.

Now, let’s save the uncooked JSON response into our Uncooked Quantity. To maintain the whole lot organized and stop overwriting, we’ll connect a novel timestamp to every filename.

By appending these recordsdata to the quantity slightly than simply overwriting them, we’re making a dependable “audit path”. This acts as a security web, that means that if a downstream course of fails or we run into information loss later, we will all the time return to the supply and re-process the unique information each time we want it.

# Get timestamp

stamp = datetime.now().strftime('%Y-%m-%d_percentH-%M-%S')

# Path to avoid wasting

json_path = f'/Volumes/pipeline_weather/lakehouse/raw_data/weather_{stamp}.json'

# Save the information right into a json file

df.write.mode('append').json(json_path)Whereas we preserve the uncooked JSON as our “supply of fact,” saving the cleaned information right into a Delta Desk within the Silver layer is the place the true magic occurs. Through the use of .mode(“append”) and the Delta format, we guarantee our information is structured, schema-enforced, and prepared for high-speed analytics or BI instruments. This layer transforms messy API responses right into a dependable, queryable desk that grows with each pipeline run.

# Save the remodeled information right into a desk (schema)

(

df

.write

.format('delta')

.mode("append")

.saveAsTable('pipeline_weather.silver.climate')

)Stunning! With this all set, let’s verify how our desk seems now.

Let’s begin automating this pipeline now.

Orchestration: Scheduling the Pocket book to Run Mechanically

Transferring on with the venture, it’s time to make this pipeline run by itself, with minimal supervision. For that, Databricks has the Jobs & Pipelines tab, the place it’s straightforward we will schedule jobs to run.

- Click on the Jobs & Pipelines tab on the left panel

- Discover the button Create and choose Job

- Click on on Pocket book so as to add it to the Job.

- Configure like the information beneath.

- Add the API Keys to the Parameters.

- Click on Create activity.

- Click on Run Now to check if it really works.

When you click on the Run Now button, it ought to begin operating the pocket book and show the Succeeded message.

If the job is working advantageous, it’s time to schedule it to run mechanically.

- Click on on Add set off on the correct facet of the display, proper underneath the part Schedules & Triggers.

- Set off sort = Scheduled.

- Schedule sort: choose Superior

- Choose Each 1 hour from the drop-downs.

- Reserve it.

Glorious. Our Pipeline is on auto-mode now! Each hour, the system will hit the OpenWeatherMap API and get contemporary climate info for NYC and reserve it to our Silver Layer Desk.

Analytics: Constructing a Dashboard for Information-Pushed Choices

The final piece of this puzzle is creating the Analytics deliverable, which is able to present the climate info and supply the person with actionable details about how one can gown for the climate exterior.

- Click on on the Dashboards tab on the left facet panel.

- Click on on the Create dashboard button

- It should open a clean canvas for us to work on.

Now dashboards work based mostly on information fetched from SQL queries. Subsequently, earlier than we begin including textual content and graphics to the canvas, first we have to create some metrics that would be the variables to feed the dashboard playing cards and graphics.

So, click on on the +Create from SQL button to begin a metric. Give it a reputation. For instance, Location, to retrieve the most recent fetched metropolis identify, I have to use this question that follows.

-- Get the most recent metropolis identify fetched

SELECT metropolis

FROM pipeline_weather.silver.climate

ORDER BY timestamp DESC

LIMIT 1And we should create one SQL question for every metric. You may see all of them within the GitHub repository [ ].

Subsequent, we click on on the Dashboard tab and begin dragging and dropping components to the canvas.

When you click on on the Textual content, it helps you to insert a field into the canvas and edit the textual content. Once you click on on the graphic aspect, it inserts a placeholder for a graphic, and opens the correct facet menu for number of the variables and configuration.

Okay. In any case the weather are added, the dashboard will seem like this.

So good! And that concludes our venture.

Earlier than You Go

You may simply replicate this venture in about an hour, relying in your expertise with the Databricks ecosystem. Whereas it’s a fast construct, it packs loads when it comes to the core engineering expertise you’ll get to train:

- Architectural Design: You’ll learn to construction a contemporary Lakehouse surroundings from the bottom up.

- Seamless Information Integration: You’ll bridge the hole between exterior net APIs and the Databricks platform for real-time information ingestion.

- Clear, Modular Code: We transfer past easy scripts through the use of Python lessons and capabilities to maintain the codebase organized and maintainable.

- Automation & Orchestration: You’ll get hands-on expertise scheduling jobs to make sure your venture runs reliably on autopilot.

- Delivering Actual Worth: The objective isn’t simply to maneuver information; it’s to supply worth. By reworking uncooked climate metrics into actionable dressing recommendations through AI, we flip “chilly information” right into a useful service for the tip person.

In case you favored this content material, discover my contacts and extra about me in my web site.

GitHub Repository

Right here is the repository for this venture.

https://github.com/gurezende/Databricks-Climate-Pipeline

References

[1. OpenWeatherMap API] (https://openweathermap.org/)

[2. Open Ai Platform] (https://platform.openai.com/)

[3. Databricks Free Edition] (https://www.databricks.com/be taught/free-edition)

[4. GitHub Repository] (https://github.com/gurezende/Databricks-Climate-Pipeline)