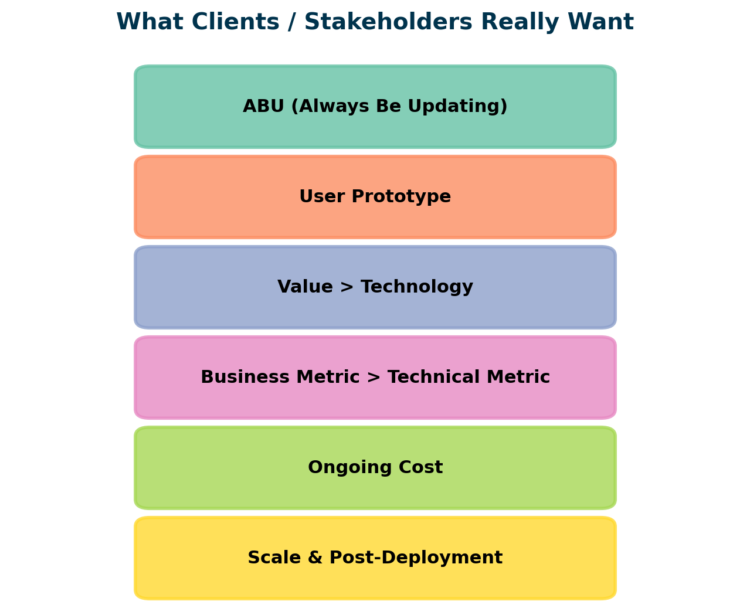

, purchasers and stakeholders don’t need surprises.

What they count on is readability, constant communication, and transparency. They need outcomes, however additionally they need you to remain grounded and aligned with the venture’s targets as a developer or product supervisor. Simply as vital, they need full visibility into the method.

On this weblog put up, I’ll share sensible rules and suggestions to assist maintain AI tasks on observe. These insights come from over 15 years of managing and deploying AI initiatives and is a observe up on my weblog put up “Suggestions for setting expectations in AI tasks”.

When working with AI tasks, uncertainty isn’t only a aspect impact, it might probably make or break the complete initiative.

All through the weblog sections, I’ll embody sensible gadgets you may put into motion instantly.

Let’s dive in!

ABU (At all times Be Updating)

In gross sales, there’s a well-known rule referred to as ABC — At all times Be Closing. The concept is straightforward: each interplay ought to transfer the shopper nearer to a deal. In AI tasks, we now have one other motto: ABU (At all times Be Updating).

This rule means precisely what it says: by no means go away stakeholders in the dead of night. Even when there’s little or no progress, it is advisable talk it rapidly. Silence creates uncertainty, and uncertainty kills belief.

A simple technique to apply ABU is with a brief weekly e mail to each stakeholder. Preserve it constant, concise, and targeted on 4 key factors:

- Breakthroughs in efficiency or key milestones achieved throughout the week;

- Points with deliverables or adjustments to final week’s plan and that have an effect on stakeholders’ expectations;

- Updates on the staff or assets concerned;

- Present progress on agreed success metrics;

This rhythm retains everybody aligned with out overwhelming them with noise. The important thing perception is that folks don’t really hate dangerous information, they only hate dangerous surprises. When you stick with ABU and handle expectations week by week, you construct credibility and defend the venture when challenges inevitably come up.

Put the Product in Entrance of the Customers

In AI tasks, it’s straightforward to fall into the lure of constructing for your self as an alternative of for the individuals who will really use the product/answer you’re constructing.

Too typically, I’ve seen groups get enthusiastic about options that matter to them however imply little to the tip person.

So, don’t assume something. Put the product in entrance of customers as early and as typically as doable. Actual suggestions is irreplaceable.

A sensible method to do that is thru light-weight prototypes or restricted pilots. Even when the product is much from completed, exhibiting it to customers helps you take a look at assumptions and prioritize options. Once you begin the venture, decide to a prototype date as quickly as doable.

Don’t fall into the expertise lure

Engineers love expertise — it’s a part of the eagerness for the function. However in AI tasks, expertise is just an enabler, by no means the tip aim. Simply because one thing is technically doable (or seems to be spectacular in a demo) doesn’t imply it solves the actual issues of your clients or stakeholders.

So the rule could be very easy, but tough to observe: Don’t begin with the tech, begin with the necessity. Each perform or code ought to hint again to a transparent person drawback.

A sensible technique to apply this precept is to validate issues earlier than options. Spend time with clients, map their ache factors, and ask: “If this expertise labored completely, would it not really matter to them?”

Cool options gained’t save a product that doesn’t resolve an issue. However if you anchor expertise in actual wants, adoption follows naturally.

Engineers typically concentrate on optimizing expertise or constructing cool options. However the most effective engineers (10x engineers) mix that technical power with the uncommon capacity to empathize with stakeholders.

Enterprise Metrics Over Technical Metrics

It’s straightforward to get misplaced in technical metrics — accuracy, F1 rating, ROC-AUC, precision, recall. Purchasers and stakeholders usually don’t care in case your mannequin is 0.5% extra correct, they care if it reduces churn, will increase income, or saves time and prices. The worst half is that purchasers and stakeholders typically imagine technical metrics are what matter, when in a enterprise context they not often are. And it’s on you to persuade them in any other case.

In case your churn prediction mannequin hits 92% accuracy, however the advertising and marketing staff can’t design efficient campaigns from its outputs, the metric means nothing. Alternatively, if a “much less correct” mannequin helps cut back buyer churn by 10% as a result of it’s explainable, that’s successful.

A sensible technique to apply that is to outline enterprise metrics at the beginning of the venture — ask:

- What’s the monetary or operational aim? (instance: cut back name heart dealing with time by 20%)

- Which technical metrics finest correlate with that final result?

- How will we talk outcomes to non-technical stakeholders?

Typically the suitable metric isn’t accuracy in any respect. For instance, in fraud detection, catching 70% of fraud circumstances with minimal false positives is perhaps extra helpful than a mannequin that squeezes out 90% however blocks hundreds of respectable transactions.

Possession and Handover

Who owns the answer as soon as it goes dwell? In case of success, will the shopper have dependable entry to it always? What occurs when your staff is now not engaged on the venture?

These questions typically get handed on, however they outline the long-term influence of your work. You could plan for handover from day one. Meaning documenting processes, transferring information, and guaranteeing the shopper’s staff can keep and function the mannequin with out your fixed involvement.

Delivering an ML mannequin is just half the job — post-deployment is commonly an vital section that will get misplaced in translation between enterprise and tech.

Value and Price range Visibility

How a lot will the answer value to run? Are you utilizing cloud infrastructure, LLMs, or different methods that carry variable bills the client should perceive?

From the beginning, it is advisable give stakeholders full visibility on value drivers. This implies breaking down infrastructure prices, licensing charges, and, particularly with GenAI, utilization bills like token consumption.

A sensible technique to handle that is to arrange clear cost-tracking dashboards or alerts and overview them repeatedly with the shopper. For LLMs, estimate anticipated token utilization below totally different situations (common question vs. heavy use) so there are not any surprises later.

Purchasers can settle for prices, however they gained’t settle for hidden or multi-scalable prices. Transparency on funds permits purchasers to plan realistically for scaling the answer.

Scale

Talking about scale..

Scale is a distinct recreation altogether. It’s the stage the place an AI answer can ship essentially the most enterprise worth, but additionally the place most tasks fail. Constructing a mannequin in a pocket book is one factor, however deploying it to deal with real-world visitors, information, and person calls for is one other.

So be clear about how you’ll scale your answer. That is the place information engineering and MLOps come. Deal with the topicss associated to making sure the complete pipeline (information ingestion, mannequin coaching, deployment, monitoring) can develop with demand whereas staying dependable and cost-efficient.

Some essential areas to contemplate when speaking scale are:

- Software program engineering practices: Model management, CI/CD pipelines, containerization, and automatic testing to make sure your answer can evolve with out breaking.

- MLOps capabilities: Automated retraining, monitoring for information drift and idea drift, and alerting techniques that maintain the mannequin correct over time.

- Infrastructure decisions: Cloud vs. on-premises, horizontal scaling, value controls, and whether or not you want specialised {hardware}.

An AI answer / venture that performs properly in isolation just isn’t sufficient. Actual worth comes when the answer can scale to hundreds of customers, adapt to new information, and proceed delivering enterprise influence lengthy after the preliminary deployment.

Listed below are the sensible suggestions we’ve seen on this put up:

- Ship a brief weekly e mail to all stakeholders with breakthroughs, points, staff updates, and progress on metrics.

- Decide to an early prototype or pilot to check assumptions with finish customers.

- Validate issues first — don’t begin with tech, begin with person wants. Consumer interviews are an effective way to do that (if doable, get out of your desk and be a part of the customers on no matter job they’re doing throughout someday).

- Outline enterprise metrics upfront and tie technical progress again to them.

- Plan for handover from day one: doc, practice the shopper staff, and guarantee possession is evident.

- Arrange a dashboard or alerts to trace prices (particularly for cloud and token-based GenAI options).

- Construct with scalability in thoughts: CI/CD, monitoring for drift, modular pipelines, and infrastructure that may develop.

Every other tip you discover related to share? Write it down within the feedback or be at liberty to contact me by way of LinkedIn!