Function Scaling in Observe: What Works and What Doesn’t

Picture by Editor | ChatGPT

Introduction

In machine studying, the distinction between a high-performing mannequin and one which struggles usually comes all the way down to small particulars. One of the crucial ignored steps on this course of is function scaling. Whereas it might appear minor, the way you scale knowledge can have an effect on mannequin accuracy, coaching pace, and stability. Nonetheless, not all scaling strategies are equally efficient in each situation. Some methods enhance efficiency and guarantee stability throughout options, whereas others could unintentionally distort the underlying relationships within the knowledge.

This text explores what works in apply on the subject of function scaling and what doesn’t.

What’s Function Scaling?

Function scaling is a knowledge preprocessing approach utilized in machine studying to normalize or standardize the vary of impartial variables (options).

Since options in a dataset could have very completely different items and scales (e.g., age in years vs. revenue in {dollars}), fashions that depend on distance or gradient calculations could be biased towards options with bigger numeric ranges. Function scaling ensures that each one options contribute proportionally to the mannequin.

Why Function Scaling Issues

- Improves mannequin efficiency: Algorithms like gradient descent converge sooner when options are normalized, since they don’t must “zig-zag” throughout uneven scales

- Interpretability: Standardized options (imply 0, variance 1) make it simpler to match the relative significance of coefficients in linear fashions

- Higher Accuracy: Distance-based fashions equivalent to k-nearest neighbors (KNN), k-means, and help vector machines (SVMs) carry out extra reliably with scaled options

- Sooner Convergence: Neural networks and gradient descent optimizers attain optimum options extra rapidly when options are scaled

Frequent Function Scaling Methods

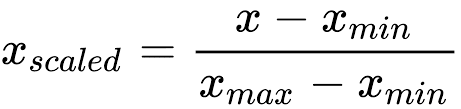

1. Normalization

Normalization is without doubt one of the easiest and most generally used function scaling methods. It rescales function values to a hard and fast vary, usually [0, 1], although it may be adjusted to any customized vary [a, b].

Components:

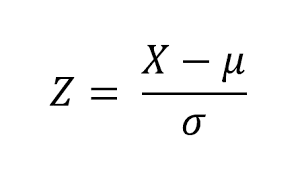

2. Standardization

Standardization is a extensively used scaling approach that transforms options in order that they’ve a imply of 0 and a regular deviation of 1. In contrast to min-max scaling, it doesn’t certain values inside a hard and fast vary; as a substitute, it facilities options and scales them to unit variance.

Components:

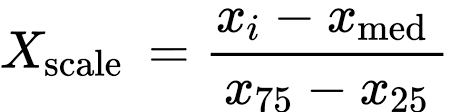

3. Strong Scaling

Strong Scaling is a function scaling approach that makes use of the median and the interquartile vary (IQR) as a substitute of the imply and normal deviation. This makes it sturdy to outliers, as excessive values have much less affect on the scaling course of in comparison with min-max scaling or standardization.

Components:

4. Max-Abs Scaling

Max-Abs scaling rescales every function individually in order that its most absolute worth turns into 1.0, whereas preserving the signal of the info. This implies all values are mapped into the vary [-1, 1].

Components:

Limitations of Function Scaling

- Not at all times vital: Tree-based fashions are largely insensitive to function scaling, so making use of normalization or standardization in these instances provides computation with out enhancing outcomes

- Lack of interpretability: Scaling could make uncooked function values tougher to interpret, which might complicate communication with non-technical stakeholders

- Technique-Dependent: Totally different scaling methods can yield completely different outcomes relying on the algorithm and dataset, and an ill-suited alternative can degrade efficiency

Conclusion

Function scaling is a crucial preprocessing step that may enhance the efficiency of machine studying fashions, however its effectiveness depends upon the algorithm and the info. Fashions that depend on distances or gradient descent usually require scaling, whereas tree-based strategies often don’t profit from it. At all times suit your scaler on the coaching knowledge solely (or inside every fold for cross-validation and time collection) and apply it to validation and check units to keep away from knowledge leakage. When utilized fastidiously and examined throughout completely different approaches, function scaling can result in sooner convergence, better stability, and extra dependable outcomes.