Fashions alone aren’t sufficient; having a full system stack and nice, profitable merchandise is the important thing. Satya Nadella – [1].

of specialised fashions, imaginative and prescient, language, segmentation, diffusion, and Combination-of-Consultants, usually orchestrated collectively. Right now the stack spans a number of varieties: LLM, LCM, LAM, MoE, VLM, SLM, MLM, and SAM [2], alongside the rise of brokers. Exactly as a result of the stack is that this heterogeneous and fast-moving, groups want a sensible framework that ensures: rigorous analysis (factuality, relevance, drift), built-in security and compliance (PII, coverage, red-teaming), and reliable operations (CI/CD, observability, rollback, price controls). And not using a framework, most fashions merely imply extra danger and fewer reliability.

On this article, we’ll summarize the primary points and illustrate them with an actual utility instance. This isn’t meant to be exhaustive however goals as an example the important thing difficulties.

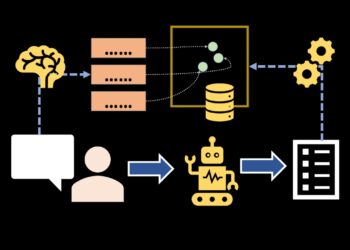

It’s well-known {that a} image is value a thousand phrases (and, behind the shine of AI, maybe much more). As an example and emphasize the core challenges in growing methods primarily based on Giant Language Fashions (LLMs), I created the diagram in Determine 1, which outlines a possible strategy for managing data buried inside lengthy, tedious contracts. Whereas many declare that AI will revolutionize each facet of know-how (and go away information scientists and engineers with out jobs), the fact is that constructing sturdy, reproducible, and dependable functions requires a framework of steady enchancment, rigorous analysis, and systematic validation. Mastering this quickly evolving panorama is something however trivial, and it will definitely take greater than a single article to focus on all the main points.

This diagram (Determine 1) could possibly be an ideal utility for a contact heart within the insurance coverage sector. When you’ve ever tried to learn your individual insurance coverage contract, you’ll understand it’s usually dozens of pages stuffed with dense authorized language (the type most of us are likely to skip). The reality is, even many insurance coverage staff don’t all the time know the tremendous particulars… however let’s maintain that between us! 😉 In any case, who might memorize the precise coverages, exclusions, and limits throughout a whole lot of merchandise and insurance coverage varieties? That’s exactly the form of complexity we aimed to handle with this technique.

The last word aim is to create a device for the contact heart employees, adjusters and fraud investigators, that may immediately reply complicated questions equivalent to coverage limits or particular protection circumstances in particular conditions. However whereas the appliance could seem easy on the floor, what I wish to spotlight listed below are the deeper challenges any developer faces when sustaining and bettering these sorts of methods.

This goes far past simply constructing a cool demo. It requires steady monitoring, validation, bias mitigation, and user-driven refinement. These duties are sometimes labeled ‘Enterprise-As-Regular’ (BAU), however in apply they demand vital effort and time. This technical debt for this specific instance can fall underneath a class known as LLMOps (or extra broadly GenAIOps or AIOps), which, though primarily based on comparable ideas to the previous pal MLOps, contains the distinctive challenges of Giant Language Fashions.

It’s a discipline that blends DevOps with governance, security and accountability, and but… until you’re seen as an ‘innovation’, nobody pays a lot consideration. Till it breaks. Then immediately it turns into necessary (particularly when regulators come knocking with RAI fines).

As promised, after my lengthy criticism 😓, let me stroll you thru the precise steps behind the diagram.

All of it begins, after all, with information (yeah… you continue to want information scientists or information engineers). Not clear, lovely, labeled information… no. We’re speaking uncooked, unstructured and unlabeled information, “issues” like insurance coverage dictionaries, multi-page coverage contracts, and even transcripts from contact heart conversations. And since we wish to construct one thing helpful, we additionally want a reality or gold commonplace (benchmark you belief for analysis), or at the very least one thing like that… When you’re an moral skilled who desires to construct actual worth, you’ll want to search out the reality within the easiest way, however all the time the best means.

When you’ve obtained the uncooked information, the subsequent step is to course of it into one thing the LLM can truly digest. Which means cleansing, chunking, standardizing, and should you’re working with transcripts, eradicating all of the filler and noise (step 4 in Determine 1).

Now, we use a immediate and a base LLM (on this case LLaMA) to routinely generate question-answer pairs from the processed paperwork and transcriptions (step 5, that makes use of steps 1-2). That kinds the supervised dataset for fine-tuning (step 6). These information ought to comprise the pair question-answer, the categorization of the query and the supply (title and web page), this final for validation. The immediate instructs the mannequin to explicitly state when sources are contradictory or when the required data is lacking. For the categorization, we assign every query a class utilizing zero-shot classification over a set taxonomy; when greater accuracy is required, we change to few-shot classification by including just a few labeled examples to the immediate.

LLM-assisted labelling accelerates setup however has drawbacks (hallucinations, shallow protection, fashion drift), so it is very important pair it with computerized checks and focused human overview earlier than coaching.

Moreover, we create a ground-truth (step 3) set: domain-expert–authored query–reply pairs with sources, used as a benchmark to guage the answer. This pattern has fewer rows than the fine-tuning dataset however offers us a transparent concept of what to anticipate. We are able to additionally broaden it throughout pilot trials with a small group of customers earlier than manufacturing.

To personalize the consumer’s response (LLMs lacks of specialised area information) we determined to fine-tune a open-source mannequin known as Mixtral utilizing LoRA (step 6). The concept was to make it extra “insurance-friendly” and in a position to reply in a tone and language nearer to how actual insurance coverage individuals talk, we consider the outcomes with steps 3 and seven. After all, we additionally wished to enhance that with long-term reminiscence, which is the place AWS Titan embeddings and vector search come into play (step 8). That is the RAG structure, combining semantic retrieval with context-aware technology.

From there, the circulation is easy:

The consumer asks a query (step 13), the system retrieves high related chunks (step 9 & 10) from the information base utilizing vector search + metadata filters (to make it extra scalable to completely different insurance coverage branches and sorts of shoppers), and the LLM (fine-tuned multilingual Mixtral) generates a well-grounded response utilizing a fastidiously engineered immediate (step 11).

These components summarise the diagram, however behind this there are challenges and particulars that, if not taken care of, can result in reproducing undesirable behaviour; for that reason, there are components which can be mandatory to include so as to not lose management of the appliance.

Nicely … Let’s start with the article 😄…

In manufacturing, issues change:

- Customers ask surprising questions.

- Context retrieval fails silently and the mannequin solutions with false confidence.

- Prompts degrade in high quality over time, that’s known as immediate drift.

- Enterprise logic shifts (over time, insurance policies evolve: new exceptions, amended phrases, new clauses, riders/endorsements, and fully new contract variations pushed by regulation, market shifts, and danger modifications.)

- Superb-tuned fashions behave inconsistently.

That is the half most individuals overlook: the lifecycle doesn’t finish at deployment, it begins there.

What does “Ops” cowl?

I created this diagram (Determine 2) to visualise how all of the items match collectively. The steps, the logic, the suggestions loops, at the very least how I lived them in my expertise. There are definitely different methods to signify this, however that is the one I discover most full.

We assume this diagram runs on a safe stack with controls that defend information and forestall unauthorized entry. This doesn’t take away our accountability to confirm and validate safety all through growth; for that purpose, I embody a developer-level safeguard field, which I’ll clarify in additional element later.

We deliberately observe a linear gate: Knowledge Administration → Mannequin Improvement → Analysis & Monitoring → Deploy (CI/CD). Solely fashions that go offline checks are deployed; as soon as in manufacturing on-line monitoring then feeds again into information and mannequin refinement (loop). After the primary deployment, we use on-line monitoring to constantly refine and enhance the answer.

Simply in case, we briefly describe every step:

- Mannequin Improvement: right here you outline the “splendid” or “much less flawed” mannequin structure aligned with enterprise requirements. Collect preliminary datasets, possibly fine-tune a mannequin (or simply prompt-engineer or RAG or altogether). The aim? Get one thing working — a prototype/MVP that proves feasibility. After the primary manufacturing launch, maintain refining through retraining and, when acceptable, incorporate superior strategies (e.g., RL/RLHF) to enhance efficiency.

- Knowledge Administration: Dealing with variations for information and prompts; maintain metadata associated with versioning, schemas, sources, operational indicators (as token utilization, latency, logs), and many others. Handle and govern uncooked and processed information in all their kinds: printed or handwritten, structured and unstructured, together with texts, audio, movies, photos, relational, vectorial and graphs databases or every other sort that can be utilized by the system. Even extract data from unstructured codecs and metadata; keep a graph retailer that RAG can question to energy analytical use instances. And please don’t make me speak about “high quality,” which is usually poorly dealt with, introduces noise into the fashions, and finally makes the work more durable.

- Mannequin Deployment (CI/CD): bundle the mannequin and its dependencies right into a reproducible artifact for promotion throughout environments; expose the artifact for inference (REST/gRPC or batch); and run testing pipelines that routinely test each change and block deployment if thresholds fail (unit exams, information/schema checks, linters, offline evals on golden units, efficiency/safety scans, canary/blue-green with rollback).

- Monitoring & Observability: Monitoring mannequin efficiency, drift, utilization, errors in manufacturing.

- Safeguards: Defend towards immediate injection, implement entry controls, defend information privateness, and consider for bias and toxicity.

- Price Administration: Monitoring and controlling utilization and prices; budgets, per-team quotas, tokens, and many others.

- Enterprise worth: Develop a enterprise case to analyse whether or not projected outcomes truly is smart in contrast to what’s truly supply. The worth of this type of answer is not seen instantly, however slightly over time. There are a collection of enterprise issues that generate prices and may assist decide whether or not the appliance nonetheless is smart or not. This step is not a straightforward one (particularly for embedded functions), however on the very least, it requires dialogue and debate. It’s an train that’s required to be finished.

So, to rework our prototype right into a production-grade, maintainable utility, a number of important layers have to be addressed. These aren’t extras; they’re the important steps to make sure each element is correctly managed. In what follows, I’ll concentrate on observability (analysis and monitoring) and safeguards, because the broader matter might fill a e-book.

Analysis & Monitoring

Observability is about constantly monitoring the system over time to make sure it retains performing as anticipated. It includes monitoring the important thing metrics to detect gradual degradation, drift, or different deviations throughout inputs, outputs, and intermediate steps (retrieval outcomes, immediate, API calls, amongst others), and capturing them in a kind that helps evaluation and subsequent refinement.

With this in place, you possibly can automate alerts that set off when outlined thresholds are crossed e.g., a sudden drop in reply relevance, an increase in retrieval latency, or surprising spikes in token utilization.

To make sure that the appliance behaves appropriately at completely different phases, it’s extremely helpful to create a reality or golden dataset curated by area consultants. This dataset serves as a benchmark for validating responses throughout coaching, fine-tuning, and analysis (step 3, determine 1).

Consider fine-tuning:

We start by measuring hallucination and reply relevance. We then examine these metrics between a baseline Mixtral mannequin (with out fine-tuning) and our Mixtral mannequin fine-tuned for insurance-specific language (step 6, Determine 1).

The comparability between the baseline and the fine-tuned mannequin serves two functions: (1) it exhibits whether or not the fine-tuned mannequin is healthier tailored to the Q&A dataset than the untuned baseline, and (2) it permits us to set a threshold to detect efficiency degradation over time, each relative to prior variations and to the baseline.

With this in thoughts we tried Claude 3 (through AWS Bedrock) to attain every mannequin response towards a domain-expert gold reply. The very best rating means “equal to or very near the gold reality,” and the bottom means “irrelevant or contradictory.”

Claude claim-level evaluator. We decompose every mannequin reply into atomic claims. Given the gold proof, Claude labels every declare as entailed / contradicted / not_in_source and returns JSON. If the context lacks the data to reply, an accurate response like entailed (we want no reply than flawed reply). For every reply we compute Declare Help (CS) = #entailed / total_claims and Hallucination fee = 1 − CS, then report dataset scores by averaging CS (and HR) throughout all solutions. This instantly measures how a lot of the reply is confirmed by the area skilled reply and aligns with claim-level metrics discovered within the literature [3].

This claim-level evaluator provides higher granularity and effectiveness, particularly when a solution accommodates a mixture of right and incorrect statements. Our earlier scoring methodology assigned a single grade to total efficiency, which obscured particular errors that wanted to be addressed.

The concept is to increase this metric to confirm solutions towards the documentary sources and, moreover, keep a second benchmark that’s simpler to construct and replace than a domain-expert set (and fewer vulnerable to error). Attaining this requires additional refinement.

Moreover, to evaluate reply relevance, we compute cosine similarity between embeddings of the mannequin’s reply and the gold reply. The downside is that embeddings can look “comparable” even when the info are flawed. In its place, we use an LLM-as-judge (Claude) to label relevance as direct, partial, or irrelevant, (taking in account the query) just like the strategy above.

These evaluations and ongoing monitoring can detect points equivalent to a query–reply dataset missing context, sources, adequate examples, or correct query categorization. If the fine-tuning immediate differs from the inference immediate, the mannequin might are likely to ignore sources and hallucinate in manufacturing as a result of it by no means realized to floor its outputs within the offered context. Each time any of those variables change, the monitoring system ought to set off an alert and supply diagnostics to facilitate investigation and remediation.

Moderation:

To measure moderation or toxicity, we used the DangerousQA benchmark (200 adversarial questions) [4] and had Claude 3 consider every response with an tailored immediate, scoring 1 (extremely unfavourable) to five (impartial) throughout Toxicity, Racism, Sexism, Illegality, and Dangerous Content material. Each the bottom and fine-tuned Mixtral fashions constantly scored 4–5 in all classes, indicating no poisonous, unlawful, or disrespectful content material.

Public benchmarks, equivalent to DangerousQA usually leak into LLM coaching information, which implies that new fashions memorize this check gadgets. This train-test information overlap results in inflated scores and might obscure actual dangers. To mitigate it, alternate options like develop non-public benchmarks, rotate analysis units, or generate recent benchmark variants are mandatory to making sure that check contamination doesn’t artificially inflate mannequin efficiency.

Consider RAG:

Right here, we focus solely on the high quality of the retrieved context. Throughout preprocessing (step 4, Determine 1), we divide the paperwork into chunks, aiming to encapsulate coherent fragments of data. The target is to make sure that the retrieval layer ranks essentially the most helpful data on the high earlier than it reaches the technology mannequin.

We in contrast two retrieval setups: (A) with out reranking : return the top-k passages utilizing key phrase or dense embeddings solely; and (B) with reranking: retrieve candidates through embeddings, then reorder the top-k with a reranker (pretrained ms-marco-mini-L-12-v2 mannequin in LangChain). For every query in a curated set with skilled gold reality, we labeled the retrieved context as Full, Partial, or Irrelevant, then summarized protection (% Full/Partial/Irrelevant) and win charges between setups.

Re-ranking constantly improved the context high quality of outcomes, however the beneficial properties had been extremely delicate to chunking/segmentation: fragmented or incoherent chunks (e.g., clipped tables, duplicates) degraded closing solutions even when the related items had been technically retrieved.

Lastly, throughout manufacturing, consumer suggestions and reply rankings from customers is collected to counterpoint this floor reality over time. Ceaselessly requested questions (FAQs) and their verified responses are additionally cached to scale back inference prices and supply quick, dependable solutions with excessive confidence.

Rubrics as a substitute:

The fast analysis strategy used to evaluate the RAG and fine-tuned mannequin supplies an preliminary common overview of mannequin responses. Nonetheless, another into consideration is a multi-step analysis utilizing domain-specific grading rubrics. As an alternative of assigning a single total grade, rubrics break down the perfect reply right into a binary guidelines of clear, verifiable standards. Every criterion is marked as sure/no or true/false and supported by proof or sources, enabling a exact analysis of the place the mannequin excels or falls quick [15]. This systematic rubric strategy provides a extra detailed and actionable evaluation of mannequin efficiency however requires time for growth, so it stays a part of our technical debt roadmap.

Safeguards

There’s usually strain to ship a minimal viable product as shortly as attainable, which implies that checking for potential vulnerabilities in datasets, prompts, and different growth parts is just not all the time a high precedence. Nonetheless, in depth literature highlights the significance of simulating and evaluating vulnerabilities, equivalent to testing adversarial assaults by introducing inputs that the appliance or system didn’t encounter throughout coaching/growth. To successfully implement these safety assessments, it’s essential to foster consciousness that vulnerability testing is a vital a part of each the event course of and the general safety of the appliance.

In Desk 2, we define a number of assault varieties with instance impacts. For example, GitLab lately confronted a distant immediate injection that affected the AI Duo code assistant, leading to supply code theft. On this incident, attackers embedded hidden prompts in public repositories, inflicting the assistant to leak delicate data from non-public repositories to exterior servers. This real-world case highlights how such vulnerabilities can result in breaches, underscoring the significance of anticipating and mitigating immediate injection and different rising AI-driven threats in utility safety.

Moreover, we should pay attention to biased outputs in AI outcomes. A 2023 Washington Publish article titled “That is how AI picture mills see the world” demonstrates, by photos, how AI fashions reproduce and even amplify the biases current of their coaching information. Guaranteeing equity and mitigating bias is a vital activity that usually will get missed on account of time constraints, but it stays essential for constructing reliable and equitable AI methods.

Conclusion

Though the primary concept of the article was as an example the complexities of LLM-based functions by the instance of a typical (however artificial) use case, the explanation for emphasizing the necessity for a sturdy and scalable system is obvious: constructing such functions is much from easy. It’s important to stay vigilant about potential points that will come up if we fail to constantly monitor the system, guarantee equity, and tackle dangers proactively. With out this self-discipline, even a promising utility can shortly grow to be unreliable, biased, or misaligned with its supposed objective.

References

[1] South Park Commons. (2025, March 7). CEO of Microsoft on AI Brokers & Quantum | Satya Nadella [Video]. YouTube. https://www.youtube.com/watch?v=ZUPJ1ZnIZvE — see 31:05.

[2] Potluri, S. (2025, June 23). The AI Stack Is Evolving: Meet the Fashions Behind the Scenes. Medium — Ladies in Expertise. Medium

[3] Košprdić, M., et. al. (2024). Verif. ai: In the direction of an open-source scientific generative question-answering system with referenced and verifiable solutions. arXiv preprint arXiv:2402.18589. https://arxiv.org/abs/2402.18589.

[4] Bhardwaj, R., et al. “Crimson-Teaming Giant Language Fashions utilizing Chain of Utterances for Security-Alignment” arXiv preprint arXiv:2308.09662 (2023). GitHub repository: https://github.com/declare-lab/red-instruct

Paper hyperlink: https://arxiv.org/abs/2308.09662

[5] Yair, Or, Ben Nassi, and Stav Cohen. “Invitation Is All You Want: Invoking Gemini for Workspace Brokers with a Easy Google Calendar Invite.” SafeBreach Weblog, 6 Aug. 2025. https://www.safebreach.com/weblog/invitation-is-all-you-need-hacking-gemini/

[6] Burgess, M., & Newman, L. H. (2025, January 31). DeepSeek’s Security Guardrails Failed Each Check Researchers Threw at Its AI Chatbot. WIRED. https://www.wired.com/story/deepseeks-ai-jailbreak-prompt-injection-attacks/?utm_source=chatgpt.com

[7] Eykholt, Okay., Evtimov, I., Fernandes, E., Li, B., Rahmati, A., Xiao, C., … & Track, D. (2018). Sturdy physical-world assaults on deep studying visible classification. In Proceedings of the IEEE convention on pc imaginative and prescient and sample recognition (pp. 1625–1634).

[8] Burgess, M. (2025, August 6). A Single Poisoned Doc May Leak ‘Secret’ Knowledge Through ChatGPT. WIRED. https://www.wired.com/story/poisoned-document-could-leak-secret-data-chatgpt/?utm_source=chatgpt.com

[9] Epelboim, M. (2025, April 7). Why Your AI Mannequin Would possibly Be Leaking Delicate Knowledge (and The way to Cease It). NeuralTrust. NeuralTrust.

[10] Zhou, Z., Zhu, J., Yu, F., Li, X., Peng, X., Liu, T., & Han, B. (2024). Mannequin inversion assaults: A survey of approaches and countermeasures. arXiv preprint arXiv:2411.10023. https://arxiv.org/abs/2411.10023

[11] Li, Y., Jiang, Y., Li, Z., & Xia, S. T. (2022). Backdoor studying: A survey. IEEE transactions on neural networks and studying methods, 35(1), 5–22.

[12] Daneshvar, S. S., Nong, Y., Yang, X., Wang, S., & Cai, H. (2025). VulScribeR: Exploring RAG-based Vulnerability Augmentation with LLMs. ACM Transactions on Software program Engineering and Methodology.

[13] Standaert, F. X. (2009). Introduction to side-channel assaults. In Safe built-in circuits and methods (pp. 27–42). Boston, MA: Springer US.

[14] Tiku N., Schaul Okay. and Chen S. (2023, November 01). That is how AI picture mills see the world. Washington Publish. https://www.washingtonpost.com/know-how/interactive/2023/ai-generated-images-bias-racism-sexism-stereotypes/ (final accessed Aug 20, 2025).

[15] Prepare dinner J., Rocktäschel T., Foerster J, Aumiller D., Wang A. (2024). TICKing All of the Packing containers: Generated Checklists Enhance LLM Analysis and Era. arXiv preprint arXiv:2410.03608. https://arxiv.org/abs/2410.03608