On this article, you’ll study three dependable methods — ordinal encoding, one-hot encoding, and goal (imply) encoding — for turning categorical options into model-ready numbers whereas preserving their which means.

Matters we are going to cowl embody:

- When and methods to apply ordinal (label-style) encoding for actually ordered classes.

- Utilizing one-hot encoding safely for nominal options and understanding its trade-offs.

- Making use of goal (imply) encoding for high-cardinality options with out leaking the goal.

Time to get to work.

3 Sensible Methods to Encode Categorical Options for Machine Studying

Picture by Editor

Introduction

Should you spend any time working with real-world information, you shortly notice that not every thing is available in neat, clear numbers. In reality, many of the attention-grabbing points, the issues that outline individuals, locations, and merchandise, are captured by classes. Take into consideration a typical buyer dataset: you’ve received fields like Metropolis, Product Sort, Schooling Degree, and even Favourite Coloration. These are all examples of categorical options, that are variables that may tackle certainly one of a restricted, fastened variety of values.

The issue? Whereas our human brains seamlessly course of the distinction between “Purple” and “Blue” or “New York” and “London,” the machine studying fashions we use to make predictions can’t. Fashions like linear regression, resolution timber, or neural networks are essentially mathematical features. They function by multiplying, including, and evaluating numbers. They should calculate distances, slopes, and possibilities. Once you feed a mannequin the phrase “Advertising and marketing,” it doesn’t see a job title; it simply sees a string of textual content that has no numerical worth it may well use in its equations. This lack of ability to course of textual content is why your mannequin will crash immediately if you happen to attempt to practice it on uncooked, non-numeric labels.

The first objective of function engineering, and particularly encoding, is to behave as a translator. Our job is to transform these qualitative labels into quantitative, numerical options with out dropping the underlying which means or relationships. If we do it proper, the numbers we create will carry the predictive energy of the unique classes. As an illustration, encoding should be sure that the quantity representing a high-level Schooling Degree is quantitatively “increased” than the quantity representing a decrease stage, or that the numbers representing completely different Cities mirror their distinction in buy habits.

To sort out this problem, we now have developed good methods to carry out this translation. We’ll begin with essentially the most intuitive strategies, the place we merely assign numbers primarily based on rank or create separate binary flags for every class. Then, we’ll transfer on to a robust method that makes use of the goal variable itself to construct a single, dense function that captures a class’s true predictive affect. By understanding this development, you’ll be outfitted to decide on the proper encoding technique for any categorical information you encounter.

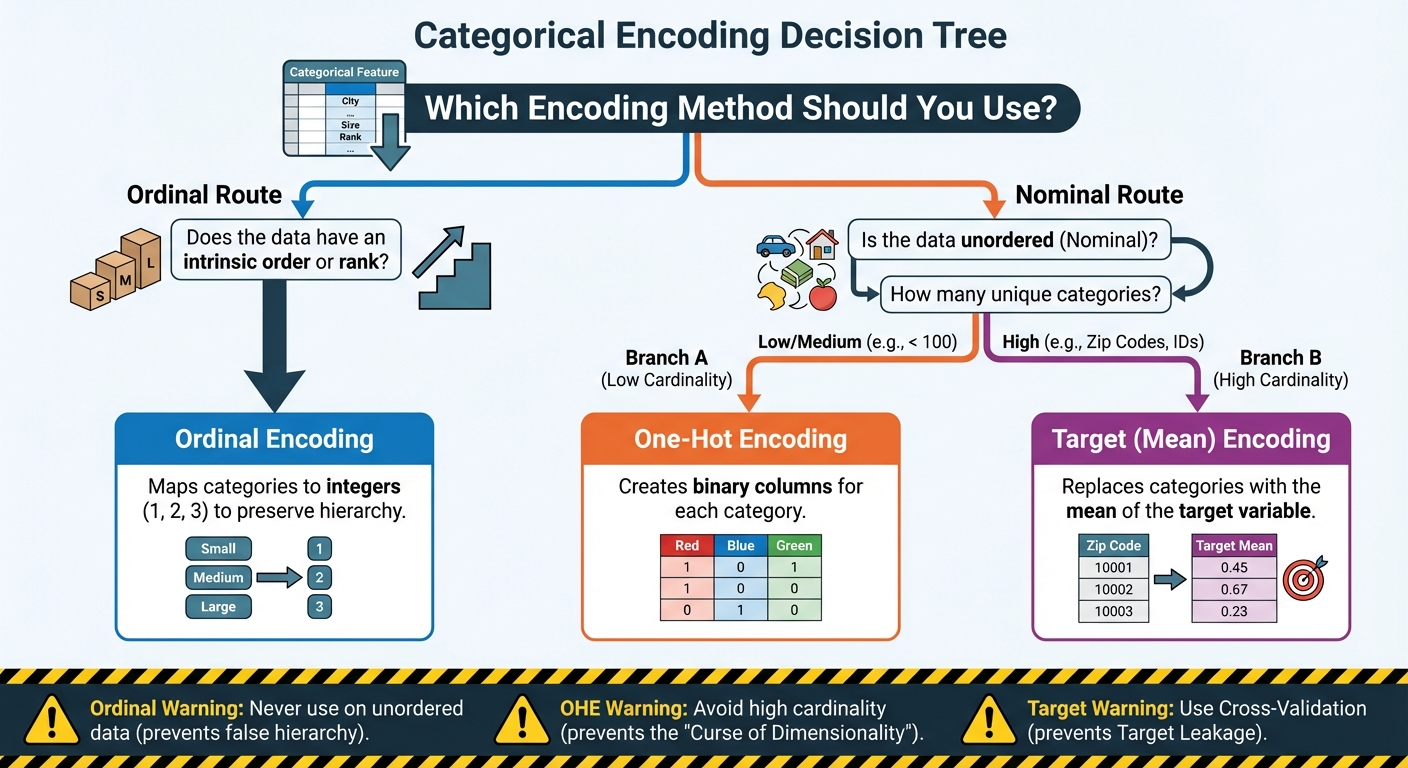

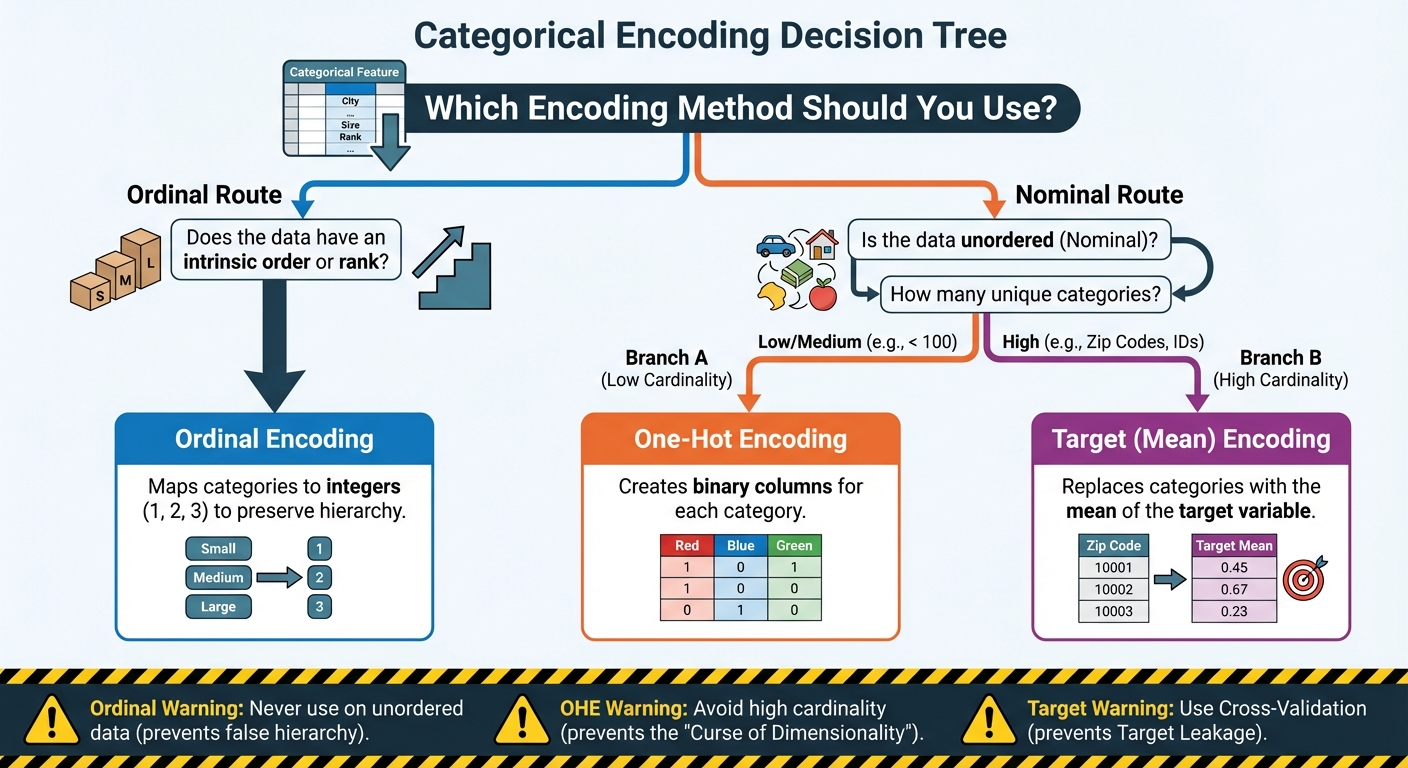

3 Sensible Methods to Encode Categorical Options for Machine Studying: A Flowchart (click on to enlarge)

Picture by Editor

1. Preserving Order: Ordinal and Label Encoding

The primary, and easiest, translation method is designed for categorical information that isn’t only a assortment of random names, however a set of labels with an intrinsic rank or order. That is the important thing perception. Not all classes are equal; some are inherently “increased” or “extra” than others.

The most typical examples are options that characterize some kind of scale or hierarchy:

- Schooling Degree: (Excessive College => Faculty => Grasp’s => PhD)

- Buyer Satisfaction: (Very Poor => Poor => Impartial => Good => Wonderful)

- T-shirt Dimension: (Small => Medium => Massive)

Once you encounter information like this, the simplest technique to encode it’s to make use of Ordinal Encoding (typically informally known as “label encoding” when mapping classes to integers).

The Mechanism

The method is easy: you map the classes to integers primarily based on their place within the hierarchy. You don’t simply assign numbers randomly; you explicitly outline the order.

For instance, if in case you have T-shirt sizes, the mapping would appear to be this:

| Unique Class | Assigned Numerical Worth |

|---|---|

| Small (S) | 1 |

| Medium (M) | 2 |

| Massive (L) | 3 |

| Additional-Massive (XL) | 4 |

By doing this, you might be educating the machine that an XL (4) is numerically “extra” than an S (1), which appropriately displays the real-world relationship. The distinction between an M (2) and an L (3) is mathematically the identical because the distinction between an L (3) and an XL (4), a unit enhance in measurement. This ensuing single column of numbers is what you feed into your mannequin.

Introducing a False Hierarchy

Whereas Ordinal Encoding is the proper alternative for ordered information, it carries a significant threat when misapplied. It’s essential to by no means apply it to nominal (non-ordered) information.

Contemplate encoding a listing of colours: Purple, Blue, Inexperienced. Should you arbitrarily assign them: Purple = 1, Blue = 2, Inexperienced = 3, your machine studying mannequin will interpret this as a hierarchy. It’ll conclude that “Inexperienced” is twice as massive or essential as “Purple,” and that the distinction between “Blue” and “Inexperienced” is identical because the distinction between “Purple” and “Blue.” That is virtually actually false and can severely mislead your mannequin, forcing it to study non-existent numerical relationships.

The rule right here is easy and agency: use Ordinal Encoding solely when there’s a clear, defensible rank or sequence between the classes. If the classes are simply names with none intrinsic order (like kinds of fruit or cities), it’s essential to use a unique encoding method.

Implementation and Code Clarification

We are able to implement this utilizing the OrdinalEncoder from scikit-learn. The hot button is that we should explicitly outline the order of the classes ourselves.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

from sklearn.preprocessing import OrdinalEncoder import numpy as np

# Pattern information representing buyer training ranges information = np.array([[‘High School’], [‘Bachelor’s’], [‘Master’s’], [‘Bachelor’s’], [‘PhD’]])

# Outline the specific order for the encoder # This ensures that ‘Bachelor’s’ is appropriately ranked under ‘Grasp’s’ education_order = [ [‘High School’, ‘Bachelor’s’, ‘Master’s’, ‘PhD’] ]

# Initialize the encoder and go the outlined order encoder = OrdinalEncoder(classes=education_order)

# Match and rework the info encoded_data = encoder.fit_transform(information)

print(“Unique Knowledge:n”, information.flatten()) print(“nEncoded Knowledge:n”, encoded_data.flatten()) |

Within the code above, the crucial half is setting the classes parameter when initializing OrdinalEncoder. By passing the precise record education_order, we inform the encoder that ‘Excessive College’ comes first, then ‘Bachelor’s’, and so forth. The encoder then assigns the corresponding integers (0, 1, 2, 3) primarily based on this practice sequence. If we had skipped this step, the encoder might need assigned the integers primarily based on alphabetical order, which might destroy the significant hierarchy we wished to protect.

2. Eliminating Rank: One-Scorching Encoding (OHE)

As we mentioned, Ordinal Encoding solely works in case your classes have a transparent rank. However what about options which are purely nominal, which means they’ve names, however no inherent order? Take into consideration issues like Nation, Favourite Animal, or Gender. Is “France” higher than “Japan”? Is “Canine” mathematically higher than “Cat”? Completely not.

For these non-ordered options, we want a technique to encode them numerically with out introducing a false sense of hierarchy. The answer is One-Scorching Encoding (OHE), which is by far essentially the most broadly used and most secure encoding method for nominal information.

The Mechanism

The core concept behind OHE is easy: as a substitute of changing a single class column with a single quantity, it’s changed with a number of binary columns. For each distinctive class in your unique function, you create a brand-new column. These new columns are sometimes known as dummy variables.

For instance, in case your unique Coloration function has three distinctive classes (Purple, Blue, Inexperienced), OHE will create three new columns: Color_Red, Color_Blue, and Color_Green.

In any given row, solely a type of columns can be “sizzling” (a price of 1), and the remainder can be 0.

| Unique Coloration | Color_Red | Color_Blue | Color_Green |

|---|---|---|---|

| Purple | 1 | 0 | 0 |

| Blue | 0 | 1 | 0 |

| Inexperienced | 0 | 0 | 1 |

This technique is good as a result of it utterly solves the hierarchy drawback. The mannequin now treats every class as a totally separate, impartial function. “Blue” is not numerically associated to “Purple”; it simply exists in its personal binary column. That is the most secure and most dependable default alternative when you already know your classes haven’t any order.

The Commerce-off

Whereas OHE is the usual for options with low to medium cardinality (i.e., a small to reasonable variety of distinctive values, sometimes below 100), it shortly turns into an issue when coping with high-cardinality options.

Cardinality refers back to the variety of distinctive classes in a function. Contemplate a function like Zip Code in the US, which may simply have over 40,000 distinctive values. Making use of OHE would pressure you to create 40,000 brand-new binary columns. This results in two main points:

- Dimensionality: You abruptly balloon the width of your dataset, creating a large, sparse matrix (a matrix containing principally zeros). This dramatically slows down the coaching course of for many algorithms.

- Overfitting: Many classes will solely seem a couple of times in your dataset. The mannequin may assign an excessive weight to certainly one of these uncommon, particular columns, basically memorizing its one look slightly than studying a common sample.

When a function has hundreds of distinctive classes, OHE is solely impractical. This limitation forces us to look past OHE and leads us on to our third, extra superior method for coping with information at a large scale.

Implementation and Code Clarification

In Python, the OneHotEncoder from scikit-learn or the get_dummies() perform from pandas are the usual instruments. The pandas technique is usually simpler for fast transformation:

|

import pandas as pd

# Pattern information with a nominal function: Coloration information = pd.DataFrame({ ‘ID’: [1, 2, 3, 4, 5], ‘Coloration’: [‘Red’, ‘Blue’, ‘Red’, ‘Green’, ‘Blue’] })

# 1. Apply One-Scorching Encoding utilizing pandas get_dummies df_encoded = pd.get_dummies(information, columns=[‘Color’], prefix=‘Is’)

print(df_encoded) |

On this code, we go our DataFrame information and specify the column we wish to rework (Coloration). The prefix='Is' merely provides a clear prefix (like ‘Is_Red‘) to the brand new columns for higher readability. The output DataFrame retains the ID column and replaces the one Coloration column with three new, impartial binary options: Is_Red, Is_Blue, and Is_Green. A row that was initially ‘Purple’ now has a 1 within the Is_Red column and a 0 within the others, attaining the specified numerical separation with out imposing rank.

3. Harnessing Predictive Energy: Goal (Imply) Encoding

As we established, One-Scorching Encoding fails spectacularly when a function has excessive cardinality, hundreds of distinctive values like Product ID, Zip Code, or E-mail Area. Creating hundreds of sparse columns is computationally inefficient and results in overfitting. We want a method that may compress these hundreds of classes right into a single, dense column with out dropping their predictive sign.

The reply lies in Goal Encoding, additionally regularly known as Imply Encoding. As a substitute of relying solely on the function itself, this technique strategically makes use of the goal variable (Y) to find out the numerical worth of every class.

The Idea and Mechanism

The core concept is to encode every class with the typical worth of the goal variable for all information factors belonging to that class.

As an illustration, think about you are attempting to foretell if a transaction is fraudulent (Y=1 for fraud, Y=0 for authentic). In case your categorical function is Metropolis:

- You group all transactions by Metropolis

- For every metropolis, you calculate the imply of the Y variable (the typical fraud charge)

- The town of “Miami” might need a median fraud charge of 0.10 (or 10%), and “Boston” might need 0.02 (2%)

- You substitute the explicit label “Miami” in each row with the quantity 0.10, and “Boston” with 0.02

The result’s a single, dense numerical column that instantly embeds the predictive energy of that class. The mannequin immediately is aware of that rows encoded with 0.10 are ten instances extra prone to be fraudulent than rows encoded with 0.01. This drastically reduces dimensionality whereas maximizing data density.

The Benefit and The Important Hazard

The benefit of Goal Encoding is evident: it solves the high-cardinality drawback by changing hundreds of sparse columns with only one dense, highly effective function.

Nevertheless, this technique is usually known as “essentially the most harmful encoding method” as a result of this can be very susceptible to Goal Leakage.

Goal leakage happens if you inadvertently embody data in your coaching information that might not be accessible at prediction time, resulting in artificially excellent (and ineffective) mannequin efficiency.

The Deadly Mistake: Should you calculate the typical fraud charge for Miami utilizing all the info, together with the row you might be at present encoding, you might be leaking the reply. The mannequin learns an ideal correlation between the encoded function and the goal variable, basically memorizing the coaching information as a substitute of studying generalizable patterns. When deployed on new, unseen information, the mannequin will fail spectacularly.

Stopping Leakage

To make use of Goal Encoding safely, it’s essential to be sure that the goal worth for the row being encoded isn’t used within the calculation of its function worth. This requires superior methods:

- Cross-Validation (Okay-Fold): Probably the most strong strategy is to make use of a cross-validation scheme. You cut up your information into Okay folds. When encoding one fold (the “holdout set”), you calculate the goal imply solely utilizing the info from the opposite Okay-1 folds (the “coaching set”). This ensures the function is generated from out-of-fold information.

- Smoothing: For classes with only a few information factors, the calculated imply might be unstable. Smoothing is utilized to “shrink” the imply of uncommon classes towards the worldwide common of the goal variable, making the function extra strong. A typical smoothing components typically includes weighting the class imply with the worldwide imply primarily based on the pattern measurement.

Implementation and Code Clarification

Implementing protected Goal Encoding often requires customized features or superior libraries like category_encoders, as scikit-learn’s core instruments don’t supply built-in leakage safety. The important thing precept is calculating the means outdoors of the first information being encoded.

For demonstration, we’ll use a conceptual instance, specializing in the results of the calculation:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

import pandas as pd

# Pattern information information = pd.DataFrame({ ‘Metropolis’: [‘Miami’, ‘Boston’, ‘Miami’, ‘Boston’, ‘Boston’, ‘Miami’], # Goal (Y): 1 = Fraud, 0 = Official ‘Fraud_Target’: [1, 0, 1, 0, 0, 0] })

# 1. Calculate the uncooked imply (for demonstration solely — that is UNSAFE leakage) # Actual-world use requires out-of-fold means for security! mean_encoding = information.groupby(‘Metropolis’)[‘Fraud_Target’].imply().reset_index() mean_encoding.columns = [‘City’, ‘City_Encoded_Value’]

# 2. Merge the encoded values again into the unique information df_encoded = information.merge(mean_encoding, on=‘Metropolis’, how=‘left’)

# Output the calculated means for illustration miami_mean = df_encoded[df_encoded[‘City’] == ‘Miami’][‘City_Encoded_Value’].iloc[0] boston_mean = df_encoded[df_encoded[‘City’] == ‘Boston’][‘City_Encoded_Value’].iloc[0]

print(f“Miami Encoded Worth: {miami_mean:.4f}”) print(f“Boston Encoded Worth: {boston_mean:.4f}”) print(“nFinal Encoded Knowledge (Conceptual Leakage Instance):n”, df_encoded) |

On this conceptual instance, “Miami” has three data with goal values [1, 1, 0], giving a median (imply) of 0.6667. “Boston” has three data [0, 0, 0], giving a median of 0.0000. The uncooked metropolis names are changed by these float values, dramatically growing the function’s predictive energy. Once more, to make use of this in an actual challenge, the City_Encoded_Value would have to be calculated rigorously utilizing solely the subset of information not being skilled on, which is the place the complexity lies.

Conclusion

We’ve coated the journey of reworking uncooked, summary classes into the numerical language that machine studying fashions demand. The distinction between a mannequin that works and one which excels typically comes right down to this function engineering step.

The important thing takeaway is that no single method is universally superior. As a substitute, the fitting alternative relies upon fully on the character of your information and the variety of distinctive classes you might be coping with.

To shortly summarize the three good approaches we’ve detailed:

- Ordinal Encoding: That is your answer when you will have an intrinsic rank or hierarchy amongst your classes. It’s environment friendly, including just one column to your dataset, nevertheless it have to be reserved completely for ordered information (like sizes or ranges of settlement) to keep away from introducing deceptive numerical relationships.

- One-Scorching Encoding (OHE): That is the most secure default when coping with nominal information the place order doesn’t matter and the variety of classes is small to medium. It prevents the introduction of false rank, however you have to be cautious of utilizing it on options with hundreds of distinctive values, as it may well balloon the dataset measurement and decelerate coaching.

- Goal (Imply) Encoding: That is the highly effective reply for high-cardinality options that might overwhelm OHE. By encoding the class with its imply relationship to the goal variable, you create a single, dense, and extremely predictive function. Nevertheless, as a result of it makes use of the goal variable, it calls for excessive warning and have to be carried out utilizing cross-validation or smoothing to forestall catastrophic goal leakage.

On this article, you’ll study three dependable methods — ordinal encoding, one-hot encoding, and goal (imply) encoding — for turning categorical options into model-ready numbers whereas preserving their which means.

Matters we are going to cowl embody:

- When and methods to apply ordinal (label-style) encoding for actually ordered classes.

- Utilizing one-hot encoding safely for nominal options and understanding its trade-offs.

- Making use of goal (imply) encoding for high-cardinality options with out leaking the goal.

Time to get to work.

3 Sensible Methods to Encode Categorical Options for Machine Studying

Picture by Editor

Introduction

Should you spend any time working with real-world information, you shortly notice that not every thing is available in neat, clear numbers. In reality, many of the attention-grabbing points, the issues that outline individuals, locations, and merchandise, are captured by classes. Take into consideration a typical buyer dataset: you’ve received fields like Metropolis, Product Sort, Schooling Degree, and even Favourite Coloration. These are all examples of categorical options, that are variables that may tackle certainly one of a restricted, fastened variety of values.

The issue? Whereas our human brains seamlessly course of the distinction between “Purple” and “Blue” or “New York” and “London,” the machine studying fashions we use to make predictions can’t. Fashions like linear regression, resolution timber, or neural networks are essentially mathematical features. They function by multiplying, including, and evaluating numbers. They should calculate distances, slopes, and possibilities. Once you feed a mannequin the phrase “Advertising and marketing,” it doesn’t see a job title; it simply sees a string of textual content that has no numerical worth it may well use in its equations. This lack of ability to course of textual content is why your mannequin will crash immediately if you happen to attempt to practice it on uncooked, non-numeric labels.

The first objective of function engineering, and particularly encoding, is to behave as a translator. Our job is to transform these qualitative labels into quantitative, numerical options with out dropping the underlying which means or relationships. If we do it proper, the numbers we create will carry the predictive energy of the unique classes. As an illustration, encoding should be sure that the quantity representing a high-level Schooling Degree is quantitatively “increased” than the quantity representing a decrease stage, or that the numbers representing completely different Cities mirror their distinction in buy habits.

To sort out this problem, we now have developed good methods to carry out this translation. We’ll begin with essentially the most intuitive strategies, the place we merely assign numbers primarily based on rank or create separate binary flags for every class. Then, we’ll transfer on to a robust method that makes use of the goal variable itself to construct a single, dense function that captures a class’s true predictive affect. By understanding this development, you’ll be outfitted to decide on the proper encoding technique for any categorical information you encounter.

3 Sensible Methods to Encode Categorical Options for Machine Studying: A Flowchart (click on to enlarge)

Picture by Editor

1. Preserving Order: Ordinal and Label Encoding

The primary, and easiest, translation method is designed for categorical information that isn’t only a assortment of random names, however a set of labels with an intrinsic rank or order. That is the important thing perception. Not all classes are equal; some are inherently “increased” or “extra” than others.

The most typical examples are options that characterize some kind of scale or hierarchy:

- Schooling Degree: (Excessive College => Faculty => Grasp’s => PhD)

- Buyer Satisfaction: (Very Poor => Poor => Impartial => Good => Wonderful)

- T-shirt Dimension: (Small => Medium => Massive)

Once you encounter information like this, the simplest technique to encode it’s to make use of Ordinal Encoding (typically informally known as “label encoding” when mapping classes to integers).

The Mechanism

The method is easy: you map the classes to integers primarily based on their place within the hierarchy. You don’t simply assign numbers randomly; you explicitly outline the order.

For instance, if in case you have T-shirt sizes, the mapping would appear to be this:

| Unique Class | Assigned Numerical Worth |

|---|---|

| Small (S) | 1 |

| Medium (M) | 2 |

| Massive (L) | 3 |

| Additional-Massive (XL) | 4 |

By doing this, you might be educating the machine that an XL (4) is numerically “extra” than an S (1), which appropriately displays the real-world relationship. The distinction between an M (2) and an L (3) is mathematically the identical because the distinction between an L (3) and an XL (4), a unit enhance in measurement. This ensuing single column of numbers is what you feed into your mannequin.

Introducing a False Hierarchy

Whereas Ordinal Encoding is the proper alternative for ordered information, it carries a significant threat when misapplied. It’s essential to by no means apply it to nominal (non-ordered) information.

Contemplate encoding a listing of colours: Purple, Blue, Inexperienced. Should you arbitrarily assign them: Purple = 1, Blue = 2, Inexperienced = 3, your machine studying mannequin will interpret this as a hierarchy. It’ll conclude that “Inexperienced” is twice as massive or essential as “Purple,” and that the distinction between “Blue” and “Inexperienced” is identical because the distinction between “Purple” and “Blue.” That is virtually actually false and can severely mislead your mannequin, forcing it to study non-existent numerical relationships.

The rule right here is easy and agency: use Ordinal Encoding solely when there’s a clear, defensible rank or sequence between the classes. If the classes are simply names with none intrinsic order (like kinds of fruit or cities), it’s essential to use a unique encoding method.

Implementation and Code Clarification

We are able to implement this utilizing the OrdinalEncoder from scikit-learn. The hot button is that we should explicitly outline the order of the classes ourselves.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

from sklearn.preprocessing import OrdinalEncoder import numpy as np

# Pattern information representing buyer training ranges information = np.array([[‘High School’], [‘Bachelor’s’], [‘Master’s’], [‘Bachelor’s’], [‘PhD’]])

# Outline the specific order for the encoder # This ensures that ‘Bachelor’s’ is appropriately ranked under ‘Grasp’s’ education_order = [ [‘High School’, ‘Bachelor’s’, ‘Master’s’, ‘PhD’] ]

# Initialize the encoder and go the outlined order encoder = OrdinalEncoder(classes=education_order)

# Match and rework the info encoded_data = encoder.fit_transform(information)

print(“Unique Knowledge:n”, information.flatten()) print(“nEncoded Knowledge:n”, encoded_data.flatten()) |

Within the code above, the crucial half is setting the classes parameter when initializing OrdinalEncoder. By passing the precise record education_order, we inform the encoder that ‘Excessive College’ comes first, then ‘Bachelor’s’, and so forth. The encoder then assigns the corresponding integers (0, 1, 2, 3) primarily based on this practice sequence. If we had skipped this step, the encoder might need assigned the integers primarily based on alphabetical order, which might destroy the significant hierarchy we wished to protect.

2. Eliminating Rank: One-Scorching Encoding (OHE)

As we mentioned, Ordinal Encoding solely works in case your classes have a transparent rank. However what about options which are purely nominal, which means they’ve names, however no inherent order? Take into consideration issues like Nation, Favourite Animal, or Gender. Is “France” higher than “Japan”? Is “Canine” mathematically higher than “Cat”? Completely not.

For these non-ordered options, we want a technique to encode them numerically with out introducing a false sense of hierarchy. The answer is One-Scorching Encoding (OHE), which is by far essentially the most broadly used and most secure encoding method for nominal information.

The Mechanism

The core concept behind OHE is easy: as a substitute of changing a single class column with a single quantity, it’s changed with a number of binary columns. For each distinctive class in your unique function, you create a brand-new column. These new columns are sometimes known as dummy variables.

For instance, in case your unique Coloration function has three distinctive classes (Purple, Blue, Inexperienced), OHE will create three new columns: Color_Red, Color_Blue, and Color_Green.

In any given row, solely a type of columns can be “sizzling” (a price of 1), and the remainder can be 0.

| Unique Coloration | Color_Red | Color_Blue | Color_Green |

|---|---|---|---|

| Purple | 1 | 0 | 0 |

| Blue | 0 | 1 | 0 |

| Inexperienced | 0 | 0 | 1 |

This technique is good as a result of it utterly solves the hierarchy drawback. The mannequin now treats every class as a totally separate, impartial function. “Blue” is not numerically associated to “Purple”; it simply exists in its personal binary column. That is the most secure and most dependable default alternative when you already know your classes haven’t any order.

The Commerce-off

Whereas OHE is the usual for options with low to medium cardinality (i.e., a small to reasonable variety of distinctive values, sometimes below 100), it shortly turns into an issue when coping with high-cardinality options.

Cardinality refers back to the variety of distinctive classes in a function. Contemplate a function like Zip Code in the US, which may simply have over 40,000 distinctive values. Making use of OHE would pressure you to create 40,000 brand-new binary columns. This results in two main points:

- Dimensionality: You abruptly balloon the width of your dataset, creating a large, sparse matrix (a matrix containing principally zeros). This dramatically slows down the coaching course of for many algorithms.

- Overfitting: Many classes will solely seem a couple of times in your dataset. The mannequin may assign an excessive weight to certainly one of these uncommon, particular columns, basically memorizing its one look slightly than studying a common sample.

When a function has hundreds of distinctive classes, OHE is solely impractical. This limitation forces us to look past OHE and leads us on to our third, extra superior method for coping with information at a large scale.

Implementation and Code Clarification

In Python, the OneHotEncoder from scikit-learn or the get_dummies() perform from pandas are the usual instruments. The pandas technique is usually simpler for fast transformation:

|

import pandas as pd

# Pattern information with a nominal function: Coloration information = pd.DataFrame({ ‘ID’: [1, 2, 3, 4, 5], ‘Coloration’: [‘Red’, ‘Blue’, ‘Red’, ‘Green’, ‘Blue’] })

# 1. Apply One-Scorching Encoding utilizing pandas get_dummies df_encoded = pd.get_dummies(information, columns=[‘Color’], prefix=‘Is’)

print(df_encoded) |

On this code, we go our DataFrame information and specify the column we wish to rework (Coloration). The prefix='Is' merely provides a clear prefix (like ‘Is_Red‘) to the brand new columns for higher readability. The output DataFrame retains the ID column and replaces the one Coloration column with three new, impartial binary options: Is_Red, Is_Blue, and Is_Green. A row that was initially ‘Purple’ now has a 1 within the Is_Red column and a 0 within the others, attaining the specified numerical separation with out imposing rank.

3. Harnessing Predictive Energy: Goal (Imply) Encoding

As we established, One-Scorching Encoding fails spectacularly when a function has excessive cardinality, hundreds of distinctive values like Product ID, Zip Code, or E-mail Area. Creating hundreds of sparse columns is computationally inefficient and results in overfitting. We want a method that may compress these hundreds of classes right into a single, dense column with out dropping their predictive sign.

The reply lies in Goal Encoding, additionally regularly known as Imply Encoding. As a substitute of relying solely on the function itself, this technique strategically makes use of the goal variable (Y) to find out the numerical worth of every class.

The Idea and Mechanism

The core concept is to encode every class with the typical worth of the goal variable for all information factors belonging to that class.

As an illustration, think about you are attempting to foretell if a transaction is fraudulent (Y=1 for fraud, Y=0 for authentic). In case your categorical function is Metropolis:

- You group all transactions by Metropolis

- For every metropolis, you calculate the imply of the Y variable (the typical fraud charge)

- The town of “Miami” might need a median fraud charge of 0.10 (or 10%), and “Boston” might need 0.02 (2%)

- You substitute the explicit label “Miami” in each row with the quantity 0.10, and “Boston” with 0.02

The result’s a single, dense numerical column that instantly embeds the predictive energy of that class. The mannequin immediately is aware of that rows encoded with 0.10 are ten instances extra prone to be fraudulent than rows encoded with 0.01. This drastically reduces dimensionality whereas maximizing data density.

The Benefit and The Important Hazard

The benefit of Goal Encoding is evident: it solves the high-cardinality drawback by changing hundreds of sparse columns with only one dense, highly effective function.

Nevertheless, this technique is usually known as “essentially the most harmful encoding method” as a result of this can be very susceptible to Goal Leakage.

Goal leakage happens if you inadvertently embody data in your coaching information that might not be accessible at prediction time, resulting in artificially excellent (and ineffective) mannequin efficiency.

The Deadly Mistake: Should you calculate the typical fraud charge for Miami utilizing all the info, together with the row you might be at present encoding, you might be leaking the reply. The mannequin learns an ideal correlation between the encoded function and the goal variable, basically memorizing the coaching information as a substitute of studying generalizable patterns. When deployed on new, unseen information, the mannequin will fail spectacularly.

Stopping Leakage

To make use of Goal Encoding safely, it’s essential to be sure that the goal worth for the row being encoded isn’t used within the calculation of its function worth. This requires superior methods:

- Cross-Validation (Okay-Fold): Probably the most strong strategy is to make use of a cross-validation scheme. You cut up your information into Okay folds. When encoding one fold (the “holdout set”), you calculate the goal imply solely utilizing the info from the opposite Okay-1 folds (the “coaching set”). This ensures the function is generated from out-of-fold information.

- Smoothing: For classes with only a few information factors, the calculated imply might be unstable. Smoothing is utilized to “shrink” the imply of uncommon classes towards the worldwide common of the goal variable, making the function extra strong. A typical smoothing components typically includes weighting the class imply with the worldwide imply primarily based on the pattern measurement.

Implementation and Code Clarification

Implementing protected Goal Encoding often requires customized features or superior libraries like category_encoders, as scikit-learn’s core instruments don’t supply built-in leakage safety. The important thing precept is calculating the means outdoors of the first information being encoded.

For demonstration, we’ll use a conceptual instance, specializing in the results of the calculation:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

import pandas as pd

# Pattern information information = pd.DataFrame({ ‘Metropolis’: [‘Miami’, ‘Boston’, ‘Miami’, ‘Boston’, ‘Boston’, ‘Miami’], # Goal (Y): 1 = Fraud, 0 = Official ‘Fraud_Target’: [1, 0, 1, 0, 0, 0] })

# 1. Calculate the uncooked imply (for demonstration solely — that is UNSAFE leakage) # Actual-world use requires out-of-fold means for security! mean_encoding = information.groupby(‘Metropolis’)[‘Fraud_Target’].imply().reset_index() mean_encoding.columns = [‘City’, ‘City_Encoded_Value’]

# 2. Merge the encoded values again into the unique information df_encoded = information.merge(mean_encoding, on=‘Metropolis’, how=‘left’)

# Output the calculated means for illustration miami_mean = df_encoded[df_encoded[‘City’] == ‘Miami’][‘City_Encoded_Value’].iloc[0] boston_mean = df_encoded[df_encoded[‘City’] == ‘Boston’][‘City_Encoded_Value’].iloc[0]

print(f“Miami Encoded Worth: {miami_mean:.4f}”) print(f“Boston Encoded Worth: {boston_mean:.4f}”) print(“nFinal Encoded Knowledge (Conceptual Leakage Instance):n”, df_encoded) |

On this conceptual instance, “Miami” has three data with goal values [1, 1, 0], giving a median (imply) of 0.6667. “Boston” has three data [0, 0, 0], giving a median of 0.0000. The uncooked metropolis names are changed by these float values, dramatically growing the function’s predictive energy. Once more, to make use of this in an actual challenge, the City_Encoded_Value would have to be calculated rigorously utilizing solely the subset of information not being skilled on, which is the place the complexity lies.

Conclusion

We’ve coated the journey of reworking uncooked, summary classes into the numerical language that machine studying fashions demand. The distinction between a mannequin that works and one which excels typically comes right down to this function engineering step.

The important thing takeaway is that no single method is universally superior. As a substitute, the fitting alternative relies upon fully on the character of your information and the variety of distinctive classes you might be coping with.

To shortly summarize the three good approaches we’ve detailed:

- Ordinal Encoding: That is your answer when you will have an intrinsic rank or hierarchy amongst your classes. It’s environment friendly, including just one column to your dataset, nevertheless it have to be reserved completely for ordered information (like sizes or ranges of settlement) to keep away from introducing deceptive numerical relationships.

- One-Scorching Encoding (OHE): That is the most secure default when coping with nominal information the place order doesn’t matter and the variety of classes is small to medium. It prevents the introduction of false rank, however you have to be cautious of utilizing it on options with hundreds of distinctive values, as it may well balloon the dataset measurement and decelerate coaching.

- Goal (Imply) Encoding: That is the highly effective reply for high-cardinality options that might overwhelm OHE. By encoding the class with its imply relationship to the goal variable, you create a single, dense, and extremely predictive function. Nevertheless, as a result of it makes use of the goal variable, it calls for excessive warning and have to be carried out utilizing cross-validation or smoothing to forestall catastrophic goal leakage.