On this article, you’ll be taught what information leakage is, the way it silently inflates mannequin efficiency, and sensible patterns for stopping it throughout frequent workflows.

Subjects we are going to cowl embrace:

- Figuring out goal leakage and eradicating target-derived options.

- Stopping practice–take a look at contamination by ordering preprocessing appropriately.

- Avoiding temporal leakage in time sequence with correct function design and splits.

Let’s get began.

3 Delicate Methods Knowledge Leakage Can Damage Your Fashions (and The way to Forestall It)

Picture by Editor

Introduction

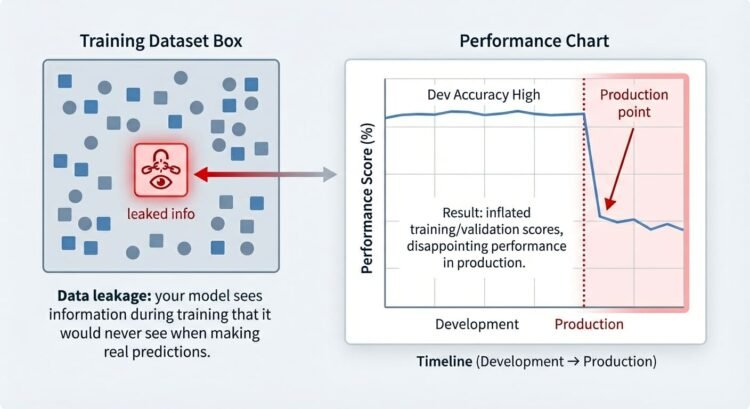

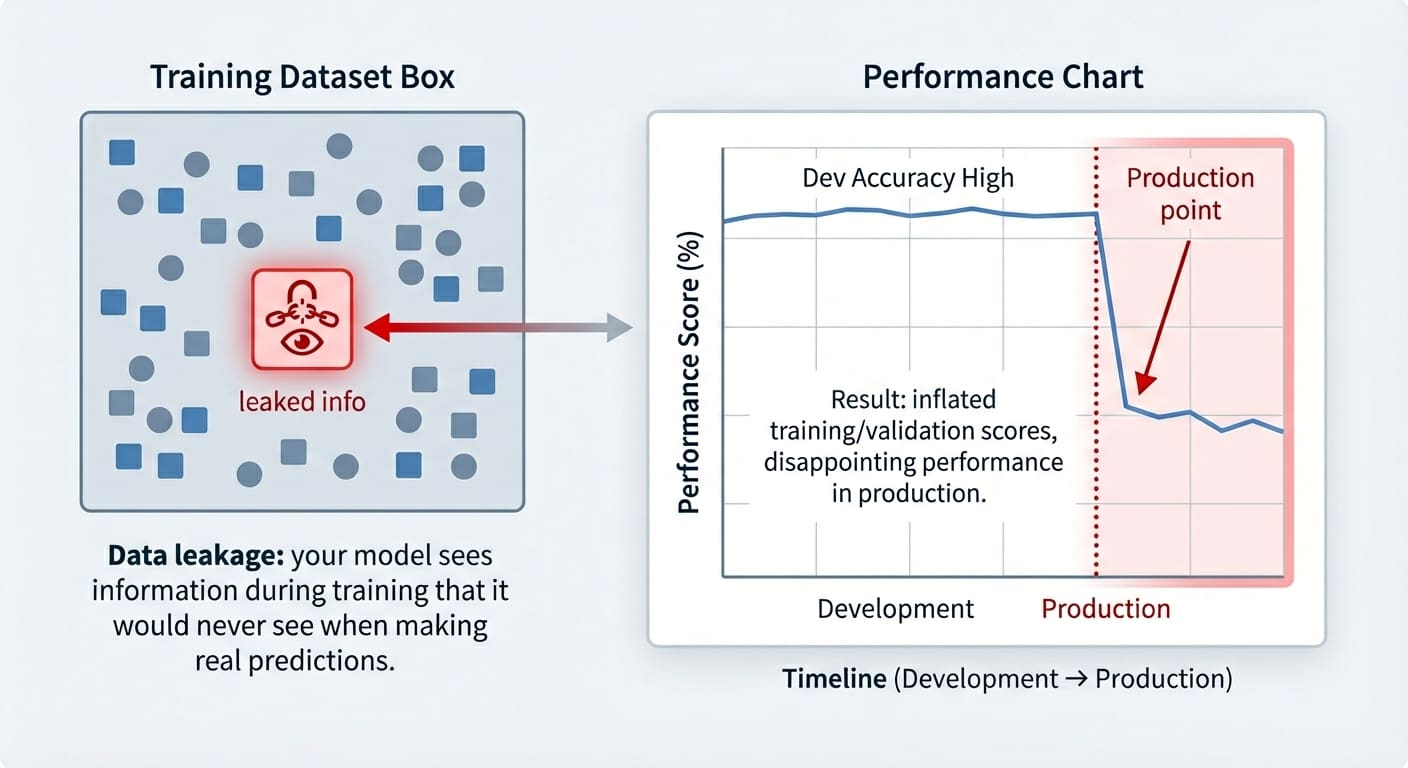

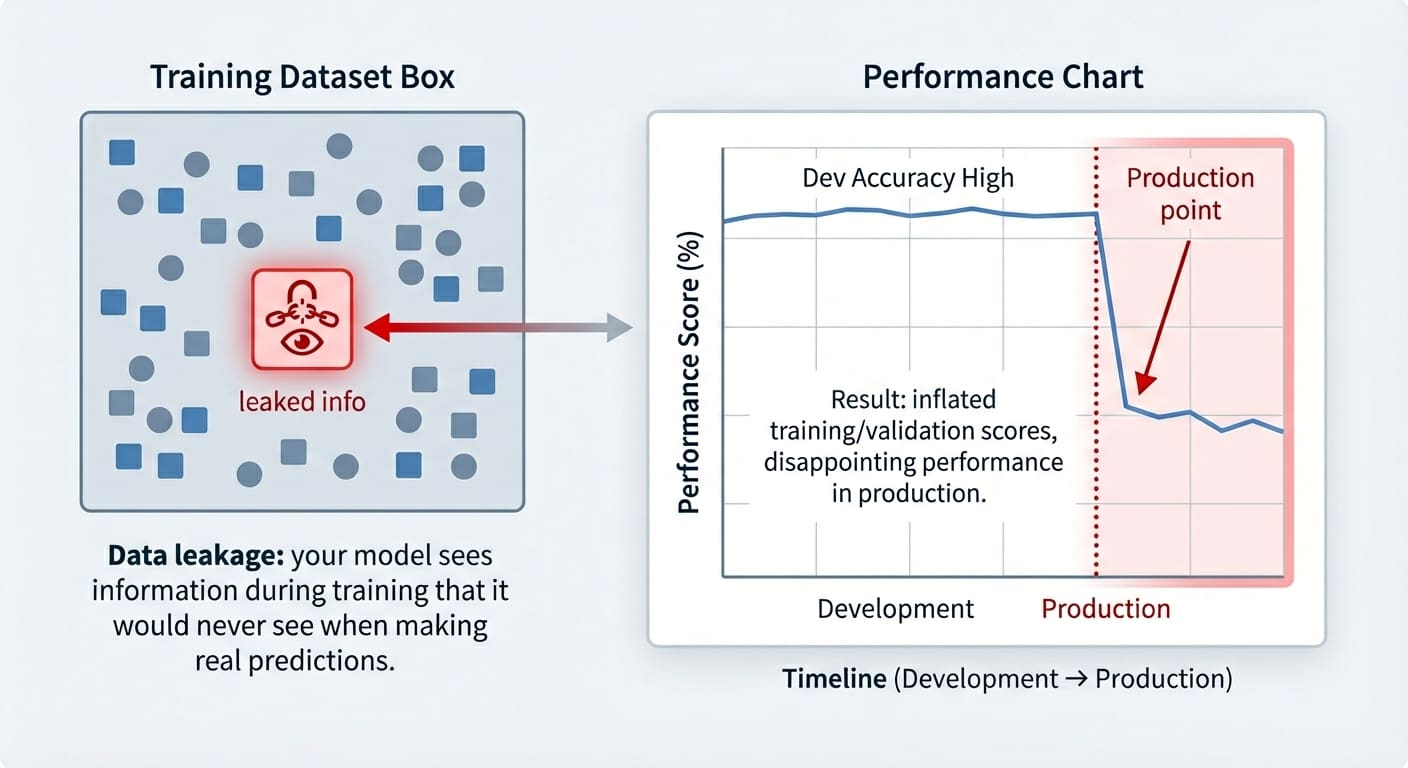

Knowledge leakage is an typically unintended drawback which will occur in machine studying modeling. It occurs when the info used for coaching incorporates info that “shouldn’t be identified” at this stage — i.e. this info has leaked and turn into an “intruder” inside the coaching set. Consequently, the educated mannequin has gained a form of unfair benefit, however solely within the very quick run: it’d carry out suspiciously nicely on the coaching examples themselves (and validation ones, at most), nevertheless it later performs fairly poorly on future unseen information.

This text reveals three sensible machine studying situations wherein information leakage could occur, highlighting the way it impacts educated fashions, and showcasing methods to stop this concern in every state of affairs. The info leakage situations coated are:

- Goal leakage

- Practice-test break up contamination

- Temporal leakage in time sequence information

Knowledge Leakage vs. Overfitting

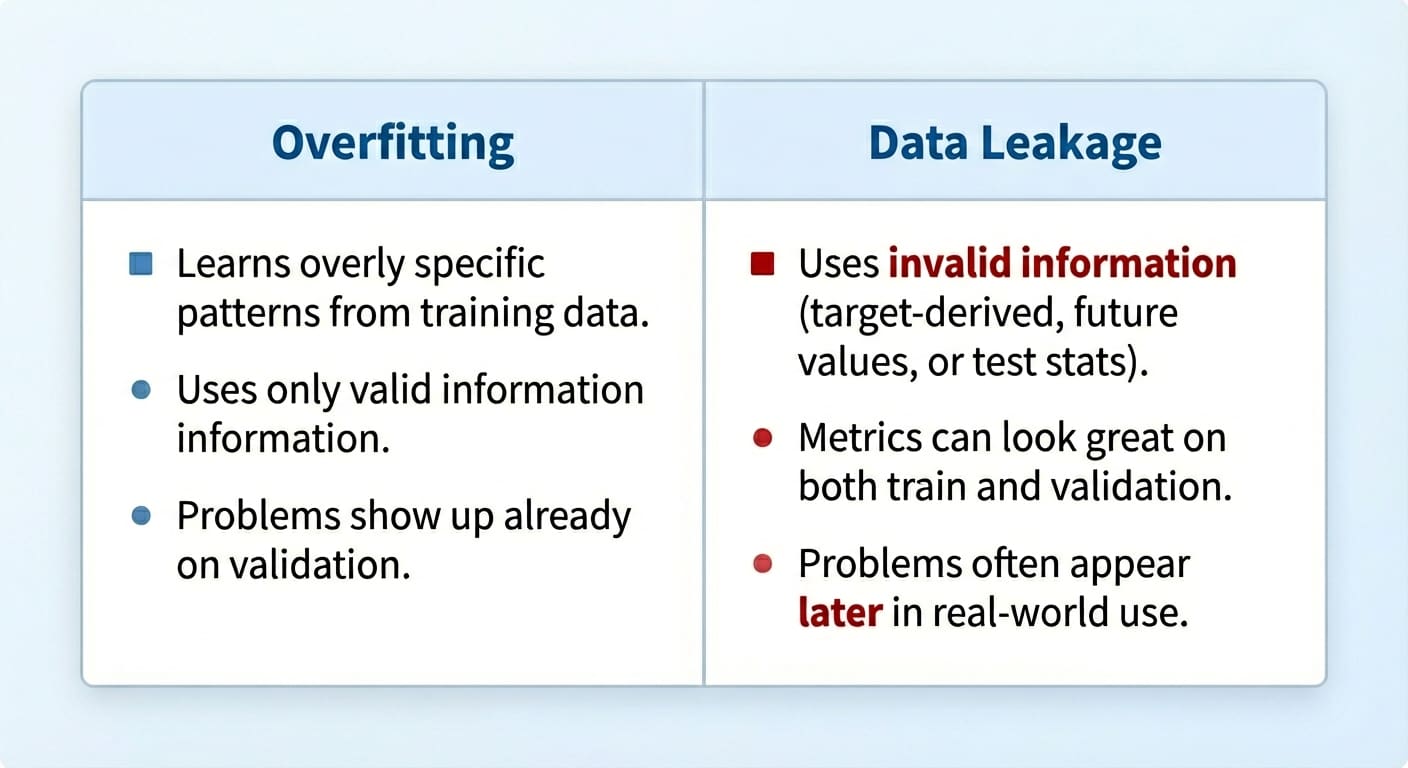

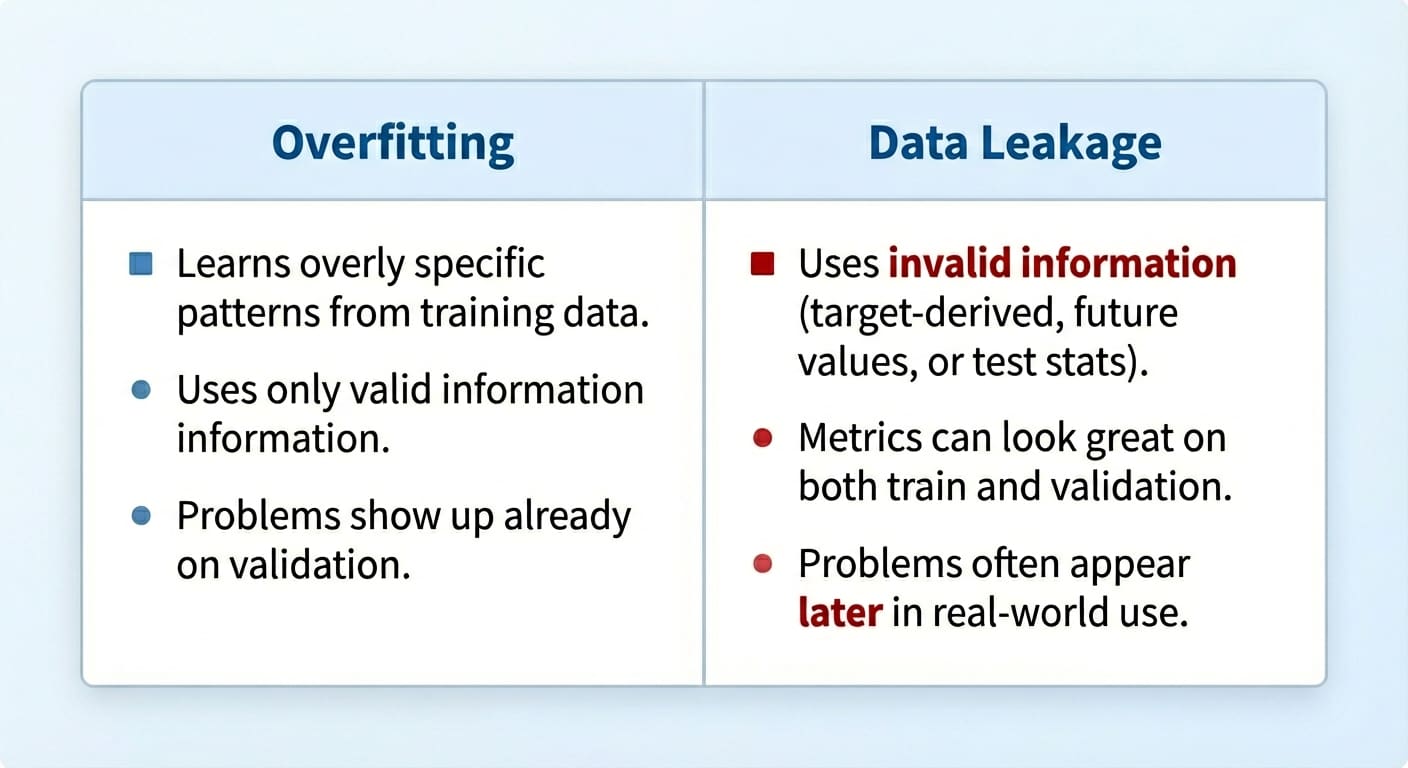

Despite the fact that information leakage and overfitting can produce similar-looking outcomes, they’re totally different issues.

Overfitting arises when a mannequin memorizes overly particular patterns from the coaching set, however the mannequin isn’t essentially receiving any illegitimate info it shouldn’t know on the coaching stage — it’s simply studying excessively from the coaching information.

Knowledge leakage, against this, happens when the mannequin is uncovered to info it mustn’t have throughout coaching. Furthermore, whereas overfitting usually arises as a poorly generalizing mannequin on the validation set, the results of knowledge leakage could solely floor at a later stage, typically already in manufacturing when the mannequin receives really unseen information.

Knowledge leakage vs. overfitting

Picture by Editor

Let’s take a better have a look at 3 particular information leakage situations.

State of affairs 1: Goal Leakage

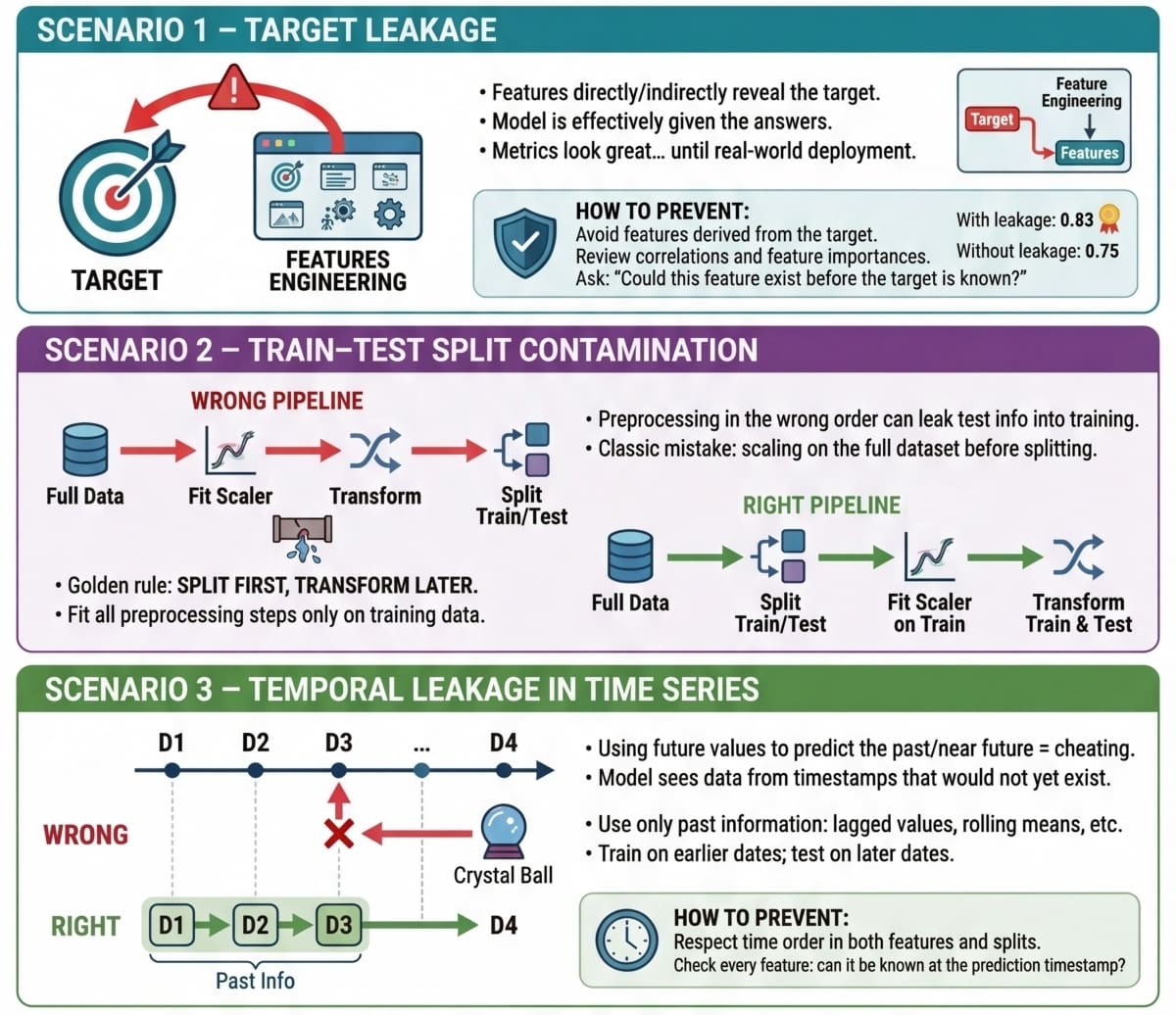

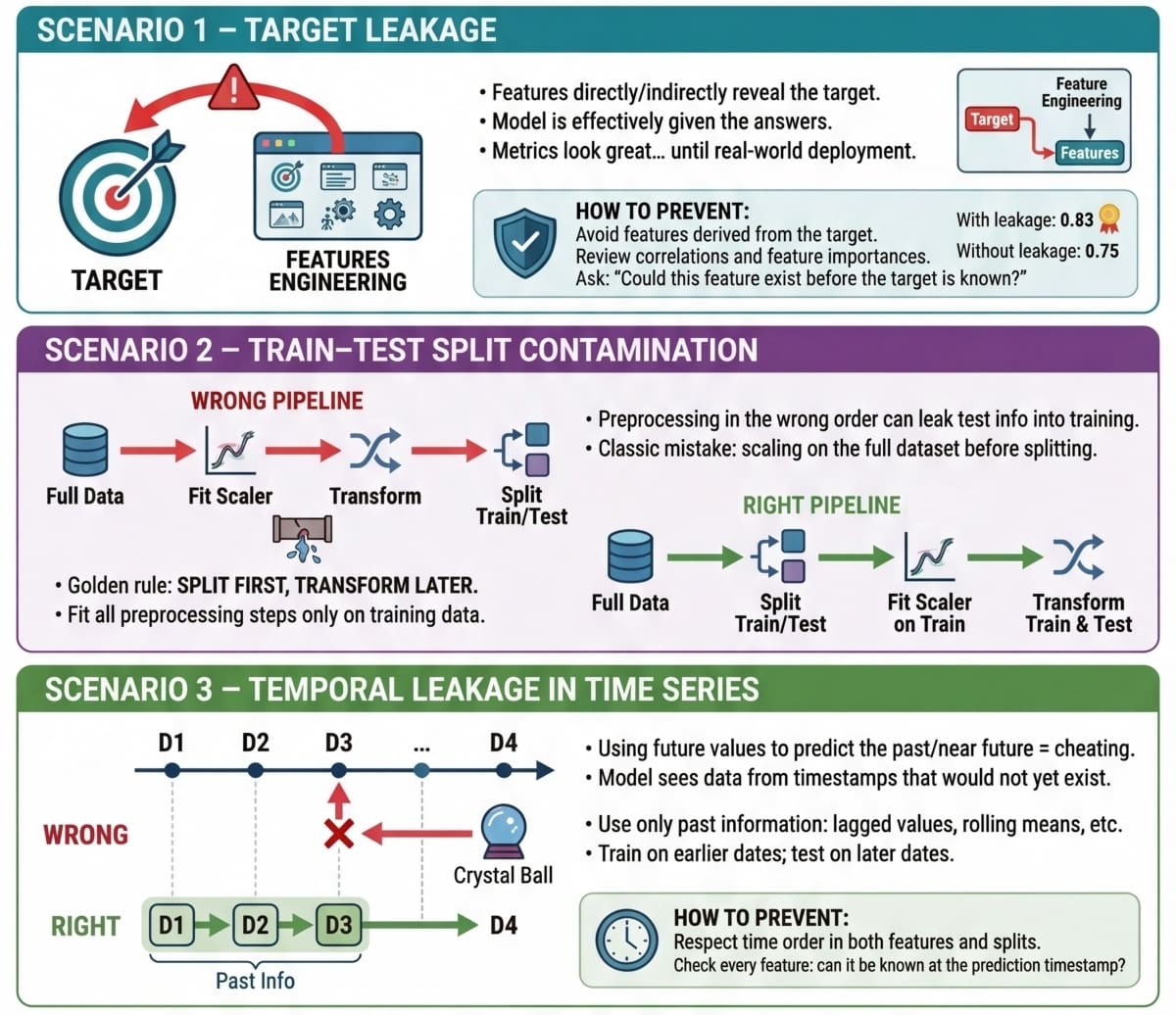

Goal leakage happens when options include info that instantly or not directly reveals the goal variable. Generally this may be the results of a wrongly utilized function engineering course of wherein target-derived options have been launched within the dataset. Passing coaching information containing such options to a mannequin is corresponding to a pupil dishonest on an examination: a part of the solutions they need to provide you with by themselves has been supplied to them.

The examples on this article use scikit-learn, Pandas, and NumPy.

Let’s see an instance of how this drawback could come up when coaching a dataset to foretell diabetes. To take action, we are going to deliberately incorporate a predictor function derived from the goal variable, 'goal' (after all, this concern in observe tends to occur accidentally, however we’re injecting it on objective on this instance for instance how the issue manifests!):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

from sklearn.datasets import load_diabetes import pandas as pd import numpy as np from sklearn.linear_model import LogisticRegression from sklearn.model_selection import train_test_break up

X, y = load_diabetes(return_X_y=True, as_frame=True) df = X.copy() df[‘target’] = (y > y.median()).astype(int) # Binary final result

# Add leaky function: associated to the goal however with some random noise df[‘leaky_feature’] = df[‘target’] + np.random.regular(0, 0.5, dimension=len(df))

# Practice and take a look at mannequin with leaky function X_leaky = df.drop(columns=[‘target’]) y = df[‘target’]

X_train, X_test, y_train, y_test = train_test_split(X_leaky, y, random_state=0, stratify=y) clf = LogisticRegression(max_iter=1000).match(X_train, y_train) print(“Check accuracy with leakage:”, clf.rating(X_test, y_test)) |

Now, to check accuracy outcomes on the take a look at set with out the “leaky function”, we are going to take away it and retrain the mannequin:

|

# Eradicating leaky function and repeating the method X_clean = df.drop(columns=[‘target’, ‘leaky_feature’]) X_train, X_test, y_train, y_test = train_test_split(X_clean, y, random_state=0, stratify=y) clf = LogisticRegression(max_iter=1000).match(X_train, y_train) print(“Check accuracy with out leakage:”, clf.rating(X_test, y_test)) |

Chances are you’ll get a consequence like:

|

Check accuracy with leakage: 0.8288288288288288 Check accuracy with out leakage: 0.7477477477477478 |

Which makes us marvel: wasn’t information leakage speculated to break our mannequin, because the article title suggests? In reality, it’s, and that is why information leakage could be tough to identify till it could be late: as talked about within the introduction, the issue typically manifests as inflated accuracy each in coaching and in validation/take a look at units, with the efficiency downfall solely noticeable as soon as the mannequin is uncovered to new, real-world information. Methods to stop it ideally embrace a mix of steps like fastidiously analyzing correlations between the goal and the remainder of the options, checking function weights in a newly educated mannequin and seeing if any function has an excessively giant weight, and so forth.

State of affairs 2: Practice-Check Cut up Contamination

One other very frequent information leakage state of affairs typically arises once we don’t put together the info in the suitable order, as a result of sure, order issues in information preparation and preprocessing. Particularly, scaling the info earlier than splitting it into coaching and take a look at/validation units could be the right recipe to by chance (and really subtly) incorporate take a look at information info — by way of the statistics used for scaling — into the coaching course of.

These fast code excerpts primarily based on the favored wine dataset present the unsuitable vs. proper technique to apply scaling and splitting (it’s a matter of order, as you’ll discover!):

|

import pandas as pd from sklearn.datasets import load_wine from sklearn.model_selection import train_test_split from sklearn.preprocessing import StandardScaler from sklearn.linear_model import LogisticRegression

X, y = load_wine(return_X_y=True, as_frame=True)

# WRONG: scaling the total dataset earlier than splitting could trigger leakage scaler = StandardScaler().match(X) X_scaled = scaler.rework(X)

X_train, X_test, y_train, y_test = train_test_split(X_scaled, y, test_size=0.3, random_state=42, stratify=y)

clf = LogisticRegression(max_iter=2000).match(X_train, y_train) print(“Accuracy with leakage:”, clf.rating(X_test, y_test)) |

The precise strategy:

|

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42, stratify=y)

scaler = StandardScaler().match(X_train) # the scaler solely “learns” from coaching information… X_train_scaled = scaler.rework(X_train) X_test_scaled = scaler.rework(X_test) # … however, after all, it’s utilized to each partitions

clf = LogisticRegression(max_iter=2000).match(X_train_scaled, y_train) print(“Accuracy with out leakage:”, clf.rating(X_test_scaled, y_test)) |

Relying on the particular drawback and dataset, making use of the suitable or unsuitable strategy will make little or no distinction as a result of typically the test-specific leaked info could statistically be similar to that within the coaching information. Don’t take this with no consideration in all datasets and, as a matter of fine observe, at all times break up earlier than scaling.

State of affairs 3: Temporal Leakage in Time Sequence Knowledge

The final leakage state of affairs is inherent to time sequence information, and it happens when details about the longer term — i.e. info to be forecasted by the mannequin — is in some way leaked into the coaching set. For instance, utilizing future values to foretell previous ones in a inventory pricing state of affairs isn’t the suitable strategy to construct a forecasting mannequin.

This instance considers a synthetically generated small dataset of every day inventory costs, and we deliberately add a brand new predictor variable that leaks in details about the longer term that the mannequin shouldn’t pay attention to at coaching time. Once more, we do that on objective right here for instance the difficulty, however in real-world situations this isn’t too uncommon to occur attributable to components like inadvertent function engineering processes:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

import pandas as pd import numpy as np from sklearn.linear_model import LogisticRegression

np.random.seed(0) dates = pd.date_range(“2020-01-01”, intervals=300)

# Artificial information technology with some patterns to introduce temporal predictability pattern = np.linspace(100, 150, 300) seasonality = 5 * np.sin(np.linspace(0, 10*np.pi, 300))

# Autocorrelated small noise: earlier day information partly influences subsequent day noise = np.random.randn(300) * 0.5 for i in vary(1, 300): noise[i] += 0.7 * noise[i–1]

costs = pattern + seasonality + noise df = pd.DataFrame({“date”: dates, “worth”: costs})

# WRONG CASE: introducing leaky function (next-day worth) df[‘future_price’] = df[‘price’].shift(–1) df = df.dropna(subset=[‘future_price’])

X_leaky = df[[‘price’, ‘future_price’]] y = (df[‘future_price’] > df[‘price’]).astype(int)

X_train, X_test = X_leaky.iloc[:250], X_leaky.iloc[250:] y_train, y_test = y.iloc[:250], y.iloc[250:]

clf = LogisticRegression(max_iter=500) clf.match(X_train, y_train) print(“Accuracy with leakage:”, clf.rating(X_test, y_test)) |

If we wished to complement our time sequence dataset with new, significant options for higher prediction, the suitable strategy is to include info describing the previous, relatively than the longer term. Rolling statistics are an effective way to do that, as proven on this instance, which additionally reformulates the predictive job into classification as a substitute of numerical forecasting:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# New goal: next-day path (enhance vs lower) df[‘target’] = (df[‘price’].shift(–1) > df[‘price’]).astype(int)

# Added function associated to the previous: 3-day rolling imply df[‘rolling_mean’] = df[‘price’].rolling(3).imply()

df_clean = df.dropna(subset=[‘rolling_mean’, ‘target’]) X_clean = df_clean[[‘rolling_mean’]] y_clean = df_clean[‘target’]

X_train, X_test = X_clean.iloc[:250], X_clean.iloc[250:] y_train, y_test = y_clean.iloc[:250], y_clean.iloc[250:]

from sklearn.linear_model import LogisticRegression clf = LogisticRegression(max_iter=500) clf.match(X_train, y_train) print(“Accuracy with out leakage:”, clf.rating(X_test, y_test)) |

As soon as once more, you might even see inflated outcomes for the unsuitable case, however be warned: issues could flip the other way up as soon as in manufacturing if there was impactful information leakage alongside the way in which.

Knowledge leakage situations summarized

Picture by Editor

Wrapping Up

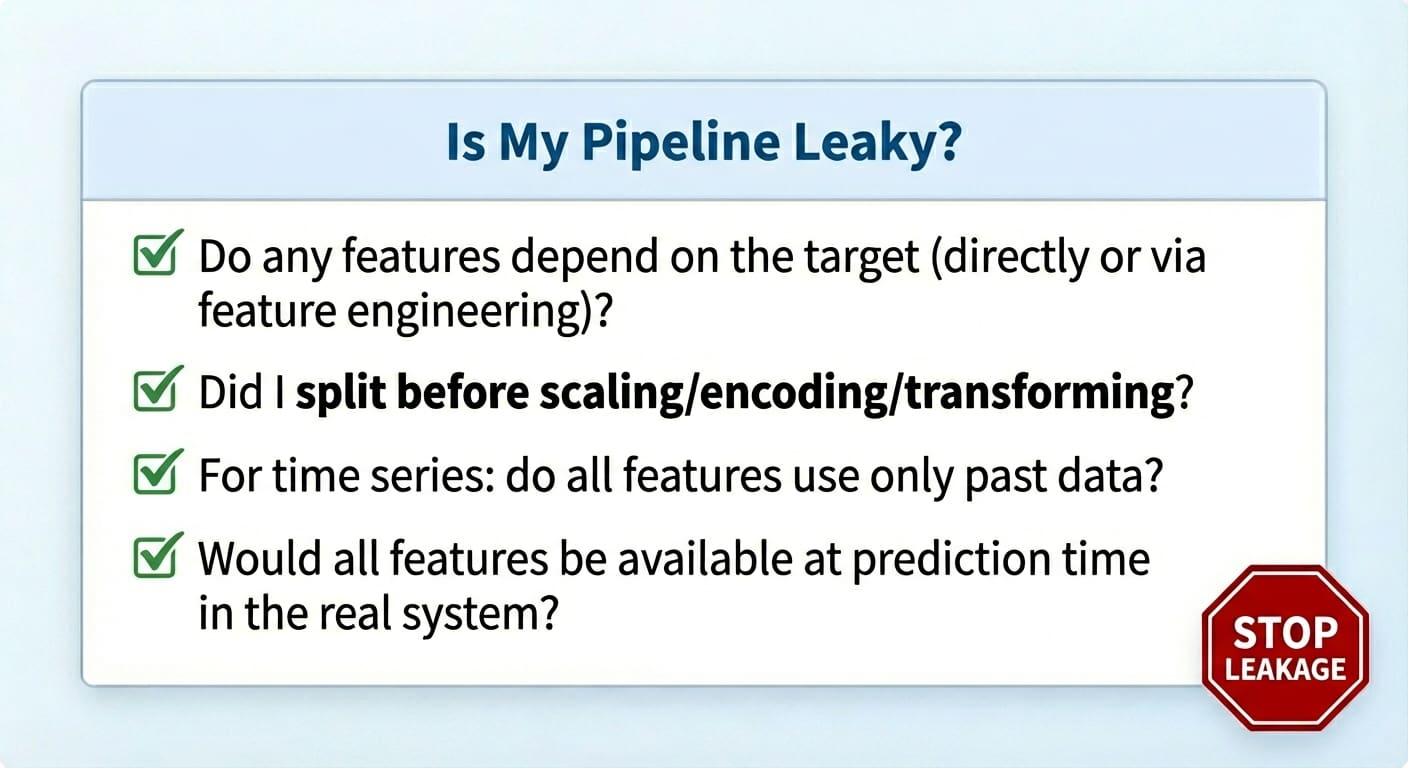

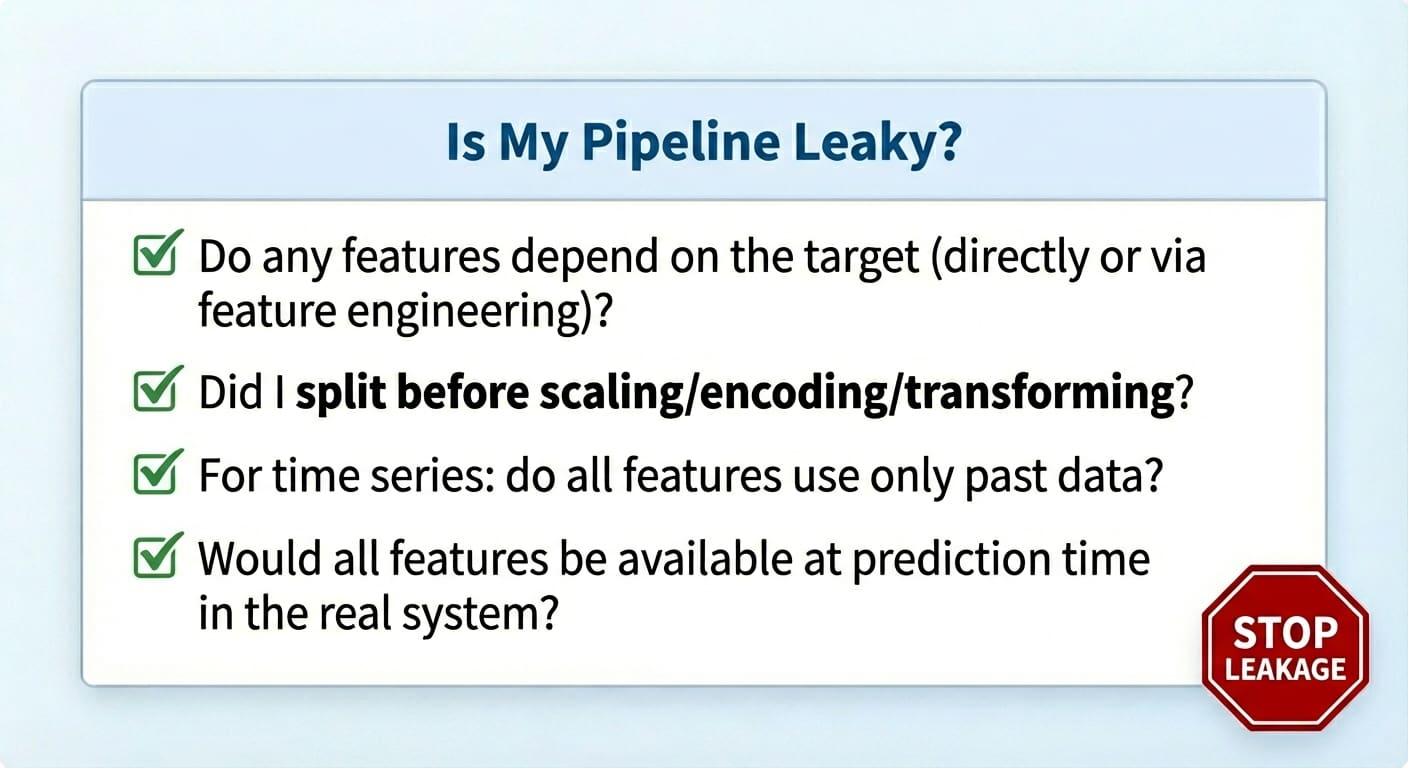

This text confirmed, by way of three sensible situations, some kinds wherein information leakage could manifest throughout machine studying modeling processes, outlining their affect and methods to navigate these points, which, whereas apparently innocent at first, could later wreak havoc (actually!) whereas in manufacturing.

Knowledge leakage guidelines

Picture by Editor

On this article, you’ll be taught what information leakage is, the way it silently inflates mannequin efficiency, and sensible patterns for stopping it throughout frequent workflows.

Subjects we are going to cowl embrace:

- Figuring out goal leakage and eradicating target-derived options.

- Stopping practice–take a look at contamination by ordering preprocessing appropriately.

- Avoiding temporal leakage in time sequence with correct function design and splits.

Let’s get began.

3 Delicate Methods Knowledge Leakage Can Damage Your Fashions (and The way to Forestall It)

Picture by Editor

Introduction

Knowledge leakage is an typically unintended drawback which will occur in machine studying modeling. It occurs when the info used for coaching incorporates info that “shouldn’t be identified” at this stage — i.e. this info has leaked and turn into an “intruder” inside the coaching set. Consequently, the educated mannequin has gained a form of unfair benefit, however solely within the very quick run: it’d carry out suspiciously nicely on the coaching examples themselves (and validation ones, at most), nevertheless it later performs fairly poorly on future unseen information.

This text reveals three sensible machine studying situations wherein information leakage could occur, highlighting the way it impacts educated fashions, and showcasing methods to stop this concern in every state of affairs. The info leakage situations coated are:

- Goal leakage

- Practice-test break up contamination

- Temporal leakage in time sequence information

Knowledge Leakage vs. Overfitting

Despite the fact that information leakage and overfitting can produce similar-looking outcomes, they’re totally different issues.

Overfitting arises when a mannequin memorizes overly particular patterns from the coaching set, however the mannequin isn’t essentially receiving any illegitimate info it shouldn’t know on the coaching stage — it’s simply studying excessively from the coaching information.

Knowledge leakage, against this, happens when the mannequin is uncovered to info it mustn’t have throughout coaching. Furthermore, whereas overfitting usually arises as a poorly generalizing mannequin on the validation set, the results of knowledge leakage could solely floor at a later stage, typically already in manufacturing when the mannequin receives really unseen information.

Knowledge leakage vs. overfitting

Picture by Editor

Let’s take a better have a look at 3 particular information leakage situations.

State of affairs 1: Goal Leakage

Goal leakage happens when options include info that instantly or not directly reveals the goal variable. Generally this may be the results of a wrongly utilized function engineering course of wherein target-derived options have been launched within the dataset. Passing coaching information containing such options to a mannequin is corresponding to a pupil dishonest on an examination: a part of the solutions they need to provide you with by themselves has been supplied to them.

The examples on this article use scikit-learn, Pandas, and NumPy.

Let’s see an instance of how this drawback could come up when coaching a dataset to foretell diabetes. To take action, we are going to deliberately incorporate a predictor function derived from the goal variable, 'goal' (after all, this concern in observe tends to occur accidentally, however we’re injecting it on objective on this instance for instance how the issue manifests!):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

from sklearn.datasets import load_diabetes import pandas as pd import numpy as np from sklearn.linear_model import LogisticRegression from sklearn.model_selection import train_test_break up

X, y = load_diabetes(return_X_y=True, as_frame=True) df = X.copy() df[‘target’] = (y > y.median()).astype(int) # Binary final result

# Add leaky function: associated to the goal however with some random noise df[‘leaky_feature’] = df[‘target’] + np.random.regular(0, 0.5, dimension=len(df))

# Practice and take a look at mannequin with leaky function X_leaky = df.drop(columns=[‘target’]) y = df[‘target’]

X_train, X_test, y_train, y_test = train_test_split(X_leaky, y, random_state=0, stratify=y) clf = LogisticRegression(max_iter=1000).match(X_train, y_train) print(“Check accuracy with leakage:”, clf.rating(X_test, y_test)) |

Now, to check accuracy outcomes on the take a look at set with out the “leaky function”, we are going to take away it and retrain the mannequin:

|

# Eradicating leaky function and repeating the method X_clean = df.drop(columns=[‘target’, ‘leaky_feature’]) X_train, X_test, y_train, y_test = train_test_split(X_clean, y, random_state=0, stratify=y) clf = LogisticRegression(max_iter=1000).match(X_train, y_train) print(“Check accuracy with out leakage:”, clf.rating(X_test, y_test)) |

Chances are you’ll get a consequence like:

|

Check accuracy with leakage: 0.8288288288288288 Check accuracy with out leakage: 0.7477477477477478 |

Which makes us marvel: wasn’t information leakage speculated to break our mannequin, because the article title suggests? In reality, it’s, and that is why information leakage could be tough to identify till it could be late: as talked about within the introduction, the issue typically manifests as inflated accuracy each in coaching and in validation/take a look at units, with the efficiency downfall solely noticeable as soon as the mannequin is uncovered to new, real-world information. Methods to stop it ideally embrace a mix of steps like fastidiously analyzing correlations between the goal and the remainder of the options, checking function weights in a newly educated mannequin and seeing if any function has an excessively giant weight, and so forth.

State of affairs 2: Practice-Check Cut up Contamination

One other very frequent information leakage state of affairs typically arises once we don’t put together the info in the suitable order, as a result of sure, order issues in information preparation and preprocessing. Particularly, scaling the info earlier than splitting it into coaching and take a look at/validation units could be the right recipe to by chance (and really subtly) incorporate take a look at information info — by way of the statistics used for scaling — into the coaching course of.

These fast code excerpts primarily based on the favored wine dataset present the unsuitable vs. proper technique to apply scaling and splitting (it’s a matter of order, as you’ll discover!):

|

import pandas as pd from sklearn.datasets import load_wine from sklearn.model_selection import train_test_split from sklearn.preprocessing import StandardScaler from sklearn.linear_model import LogisticRegression

X, y = load_wine(return_X_y=True, as_frame=True)

# WRONG: scaling the total dataset earlier than splitting could trigger leakage scaler = StandardScaler().match(X) X_scaled = scaler.rework(X)

X_train, X_test, y_train, y_test = train_test_split(X_scaled, y, test_size=0.3, random_state=42, stratify=y)

clf = LogisticRegression(max_iter=2000).match(X_train, y_train) print(“Accuracy with leakage:”, clf.rating(X_test, y_test)) |

The precise strategy:

|

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42, stratify=y)

scaler = StandardScaler().match(X_train) # the scaler solely “learns” from coaching information… X_train_scaled = scaler.rework(X_train) X_test_scaled = scaler.rework(X_test) # … however, after all, it’s utilized to each partitions

clf = LogisticRegression(max_iter=2000).match(X_train_scaled, y_train) print(“Accuracy with out leakage:”, clf.rating(X_test_scaled, y_test)) |

Relying on the particular drawback and dataset, making use of the suitable or unsuitable strategy will make little or no distinction as a result of typically the test-specific leaked info could statistically be similar to that within the coaching information. Don’t take this with no consideration in all datasets and, as a matter of fine observe, at all times break up earlier than scaling.

State of affairs 3: Temporal Leakage in Time Sequence Knowledge

The final leakage state of affairs is inherent to time sequence information, and it happens when details about the longer term — i.e. info to be forecasted by the mannequin — is in some way leaked into the coaching set. For instance, utilizing future values to foretell previous ones in a inventory pricing state of affairs isn’t the suitable strategy to construct a forecasting mannequin.

This instance considers a synthetically generated small dataset of every day inventory costs, and we deliberately add a brand new predictor variable that leaks in details about the longer term that the mannequin shouldn’t pay attention to at coaching time. Once more, we do that on objective right here for instance the difficulty, however in real-world situations this isn’t too uncommon to occur attributable to components like inadvertent function engineering processes:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

import pandas as pd import numpy as np from sklearn.linear_model import LogisticRegression

np.random.seed(0) dates = pd.date_range(“2020-01-01”, intervals=300)

# Artificial information technology with some patterns to introduce temporal predictability pattern = np.linspace(100, 150, 300) seasonality = 5 * np.sin(np.linspace(0, 10*np.pi, 300))

# Autocorrelated small noise: earlier day information partly influences subsequent day noise = np.random.randn(300) * 0.5 for i in vary(1, 300): noise[i] += 0.7 * noise[i–1]

costs = pattern + seasonality + noise df = pd.DataFrame({“date”: dates, “worth”: costs})

# WRONG CASE: introducing leaky function (next-day worth) df[‘future_price’] = df[‘price’].shift(–1) df = df.dropna(subset=[‘future_price’])

X_leaky = df[[‘price’, ‘future_price’]] y = (df[‘future_price’] > df[‘price’]).astype(int)

X_train, X_test = X_leaky.iloc[:250], X_leaky.iloc[250:] y_train, y_test = y.iloc[:250], y.iloc[250:]

clf = LogisticRegression(max_iter=500) clf.match(X_train, y_train) print(“Accuracy with leakage:”, clf.rating(X_test, y_test)) |

If we wished to complement our time sequence dataset with new, significant options for higher prediction, the suitable strategy is to include info describing the previous, relatively than the longer term. Rolling statistics are an effective way to do that, as proven on this instance, which additionally reformulates the predictive job into classification as a substitute of numerical forecasting:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

# New goal: next-day path (enhance vs lower) df[‘target’] = (df[‘price’].shift(–1) > df[‘price’]).astype(int)

# Added function associated to the previous: 3-day rolling imply df[‘rolling_mean’] = df[‘price’].rolling(3).imply()

df_clean = df.dropna(subset=[‘rolling_mean’, ‘target’]) X_clean = df_clean[[‘rolling_mean’]] y_clean = df_clean[‘target’]

X_train, X_test = X_clean.iloc[:250], X_clean.iloc[250:] y_train, y_test = y_clean.iloc[:250], y_clean.iloc[250:]

from sklearn.linear_model import LogisticRegression clf = LogisticRegression(max_iter=500) clf.match(X_train, y_train) print(“Accuracy with out leakage:”, clf.rating(X_test, y_test)) |

As soon as once more, you might even see inflated outcomes for the unsuitable case, however be warned: issues could flip the other way up as soon as in manufacturing if there was impactful information leakage alongside the way in which.

Knowledge leakage situations summarized

Picture by Editor

Wrapping Up

This text confirmed, by way of three sensible situations, some kinds wherein information leakage could manifest throughout machine studying modeling processes, outlining their affect and methods to navigate these points, which, whereas apparently innocent at first, could later wreak havoc (actually!) whereas in manufacturing.

Knowledge leakage guidelines

Picture by Editor